Since the start of 2025, one topic has dominated conversations with technology leaders: agentic AI. Many companies now say they have “entered the agentic era,” and most are trying to understand what that actually means for their teams and systems.

The enthusiasm isn’t driven by hype. It comes from a practical question almost every enterprise leader has faced: if AI can understand what you’re asking for, why can’t it take the next step?

Most organizations reached a point where chatbots could explain and automation could execute, but nothing could do both with context. That gap is what brought agentic AI into focus.

As you explore this space, a few questions appear quickly:

- What qualifies a system as an agent?

- How is this different from the AI approaches used over the past decade?

- What conditions must be in place before agents can operate with confidence?

These questions matter because the term “agent” has spread faster than its definition. Before looking at use cases or adoption stages, it helps to anchor the concept in clear, straightforward terms. And the best place to start is with the word at the center of it all: agent.

Where the term “agent” comes from

Before looking at the technology, it helps to understand the language. “Agent” did not begin in computing. The word has existed for centuries and has always carried the idea of action.

It comes from the Latin agens, meaning “one who acts.” When it entered English in the late 1400s, it described a person who acted on behalf of someone else. By the 16th century, it referred to natural forces that produced an effect. By the 20th century, it appeared in biology, economics and early computing to describe an independent actor inside a system.

Across all of these contexts, the core idea remained consistent: an agent does something. It moves a situation forward.

That evolution mirrors what we now see with AI. As teams began expecting systems to understand a goal and take the next step, the word “agent” shifted from a metaphor to a practical description of what this new class of systems is designed to do.

Understanding the evolution of AI before the agentic era

To understand what makes the agentic era different, it helps to look at how AI evolved over the years. Each stage added a little more capability and moved us closer to what we now expect from an agent. Most of us have lived through these phases without realising it.

1. Rule-based systems (1980s to early 2000s)

This was the era of predictable automation. If you called a bank and heard “Press 1 for balance, Press 2 for support,” that was rule-based AI. The same applied to early spam filters that blocked emails containing certain words, or online tax calculators that followed fixed formulas. These systems did exactly what they were told, nothing more, nothing less.

2. Intent-based chatbots (2010 to 2018)

Then came chatbots on telecom, airline and e-commerce websites. Ask about your bill, flight status or delivery, and they will send you a ready-made reply. These systems recognised intent, which made interactions smoother, but they still couldn’t take action. They couldn’t update an order, open a ticket or complete a task. Their role was to only reply and not act.

3. Conversational AI (2016 to 2022)

As chatbots matured, conversational AI emerged. Assistants could hold more natural, multi-turn conversations and retain basic context. Troubleshooting improved and guidance felt less mechanical. But the limitation was that the intelligence stayed at the conversation layer. So, while they made interactions easier, humans still carried the real work behind the scenes.

4. Generative AI (2018 to 2023)

Generative AI marked a different kind of shift. Machines could now produce content, not just recognise patterns. Large language models could read, understand and generate text in ways that felt intuitive, so you could drop in a document, ask a question or share a rough idea and get something useful back.

The GPT wave made this mainstream. AI became part of everyday work. Students used it for assignments. Teams used it for drafts and quick clarity. In many ways, it democratized access to AI and helped people be more productive with certain tasks.

But its role stopped at helping you think through a task, not completing it. It could outline steps, but not run them. It could write an email, but not send it. The responsibility for action still sat with people.

So even though this phase changed how we approached work, it didn’t change who moved the work forward. And that led to the next question:

If AI understands what we want, why can’t it help act on it?

That question opened the door to the agentic era.

The arrival of Agentic AI (2024–present)

By 2024, many organizations reached the same realization. AI could understand intent far better than before, and automation could execute repeatable steps, but these capabilities lived in separate parts of the workflow. The work moved only when a person connected them.

This gap pushed agentic AI into focus.

Instead of stopping at interpretation, teams began testing small AI components that could read a goal, understand the surrounding context and carry out simple actions inside their systems. These early agents were not designed to run entire processes. They handled the routine steps that often slow work down like preparing information, validating details, routing items or triggering follow-ups. Even that level of support changed how teams thought about where AI fits in day-to-day operations.

Raj has emphasised in several recent keynotes that AI becomes meaningful only when it helps the work progress. It’s not enough for a system to understand the request; it should be able to move the task forward. That idea reinforced what many teams were already starting to see in their own environments, and it pushed the conversation toward agents in a more practical way.

By late 2024, agentic AI had already moved beyond theory. Early agents were quietly taking on routine tasks across service, HR, IT and operations, which changed the nature of the discussion. So the discussion changed. Instead of asking whether this approach makes sense, teams were deciding where it belongs, how much responsibility it should have and what level of oversight feels right. In that sense, the agentic era didn’t arrive through a single breakthrough. It took shape as soon as organisations began expecting AI to participate in the work, not just describe it.

What do we mean by an “agent”?

At Reimagine NYC, Raj Koneru addressed a question nearly every enterprise team now asks: what exactly is an agent? He pointed out that there is still no single, official definition. The idea is new, and the industry is shaping it through real implementations rather than theory.

What he offered instead was a practical way to think about it. In his view, an agent brings together understanding, planning and reasoning in a way that feels like a small, context-aware “brain” inside the workflow. It should understand what the user wants, make sense of the surrounding data and decide the next step with enough confidence to keep the work moving.

That leads to a simpler, enterprise-ready definition:

- It understands what someone is trying to achieve

- It interprets the context around the request

- It decides what should happen next

- It takes action inside your systems to move the task forward

Earlier tools handled fragments of this — chatbots responded, automation executed, generative models interpreted. Agents combine these behaviours so progress doesn’t pause at every step that needs human intervention.

The simplest way to think about an agent is this:

it understands the goal and helps carry the work, not just describe it.

What is Agentic AI?

By now, the idea should already make sense. Agentic AI isn’t a sudden leap or a new label. It’s the stage where several AI capabilities finally come together in a way that allows work to move forward.

At its core, agentic AI refers to AI systems that can understand a goal, decide what needs to happen next, and take action across systems to progress that task with limited human involvement.

What is new here is not intelligence itself, but continuity. Rather than completing a task after making a response or taking an action, this system remains with the problem, dealing with routine actions and bridging transitions until something truly calls on human judgment. It is this kind of assistance in between tasks rather than in one place or at one time, so characteristic of AI as reaching its agentic phase.

Agentish vs Agentic: Understanding the difference

Before going further, it helps to slow down on one thing, because this is where a lot of confusion creeps in. The word agent is being used very broadly right now, even though the systems behind it don’t all behave the same way.

What most teams start with are systems that are agentish or agent-like. They can understand a request, respond intelligently, and sometimes take action. They are useful, make things easier for us, but still need to be told what to do, step by step. Once they respond or complete a task, the work comes back to the person.

As teams get more comfortable, expectations change. At some point, people stop asking for better responses and start asking a different question: can this system actually carry the work forward?

That’s where agentic systems feel different. They understand the goal, work out what needs to happen next, and keep going across steps. They stay with the task instead of stopping after a single interaction. They only pause when something genuinely needs human judgment.

This also makes one thing clear. We didn’t suddenly arrive at the agentic AI era. What we’re seeing now is the result of a steady evolution. Many teams started with agentish systems that could assist at individual steps, respond intelligently, or trigger simple actions. Over time, expectations grew. Those agentish capabilities began giving way to more agentic systems that can carry work forward, stay with a task across steps, and take on real responsibility inside workflows. As that shift happens, the focus naturally moves from responses to behaviour, from isolated interactions to how these systems operate day after day in real environments.

Why agentic AI represents a new class of technology

Agentic AI feels different because it changes the role AI plays inside the workflow. For years, AI helped teams understand information, summarise it or frame the next step, but the work still moved only when a person stepped in. That quiet dependency shaped most AI deployments, even when they delivered value.

Agents shift that pattern. They interpret what someone is trying to achieve, work out the next logical step, gather the required information and move the task forward without constant direction. When teams experience this, it stands out immediately. It is not about making AI more impressive. It is about allowing it to participate in the work instead of stopping at interpretation.

This becomes particularly clear in organisations that experimented early. Small actions that normally need manual attention like updating a field, checking a status, preparing the next step etc. begin to happen on their own. Nothing dramatic on its own, but enough to change how people think about where AI belongs in daily operations.

That is why agentic AI feels like a new class of technology. It does not replace people or overhaul entire processes. It simply takes on parts of the work in a steady, predictable way. And once teams see that behaviour, they understand why this cannot be grouped with earlier waves of AI. It changes how work progresses, which is ultimately what places it in a different category altogether.

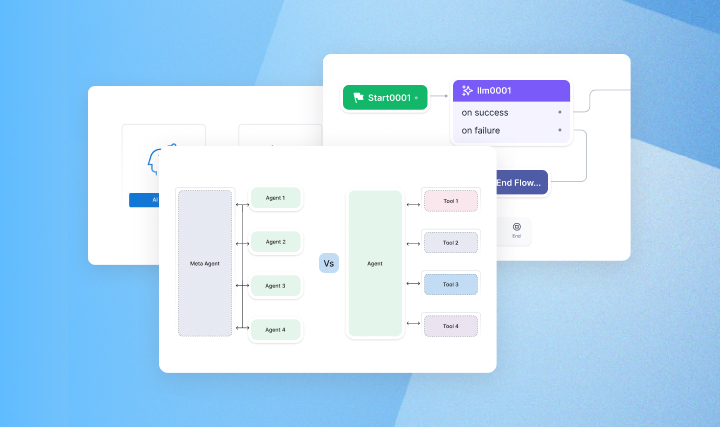

Types of agents in the agentic AI landscape

As organisations begin working with agentic AI, it becomes clear that not all agents serve the same purpose. Each type plays a specific role in how work gets done, and together they form the building blocks for more advanced systems. The categories below reflect that progression and help teams understand what kind of responsibility an agent can take on.

1. Reactive agents

These are straightforward agents that respond to direct prompts. They complete simple, single-step tasks such as retrieving information, generating quick drafts or answering routine questions. They give teams an immediate sense of value without requiring major changes to existing workflows.

2. Contextual agents

These agents remember what happened earlier in the interaction. They keep track of intent, recent history and relevant details so people do not have to repeat themselves. This makes the experience smoother and creates a more natural flow in service, HR or IT scenarios where users return with similar needs.

3. Tool-Using agents

Tool-using agents can call APIs, connect to enterprise systems and complete multi-step activities. They understand what the user is trying to achieve and take action inside systems rather than stopping at explanation. This is often the first moment when organisations truly experience what “agentic” means because the agent is now participating in the workflow.

4. Collaborative multi-agent systems

In these systems, several agents work together and each one handles a specific part of the task. One may gather information, another may validate it and another may complete an operational step. This mirrors how human teams divide work and makes it easier for organisations to handle complex or cross-functional processes.

5. Autonomous subsystems

These agents manage a defined portion of a workflow with minimal supervision. They handle predictable, high-volume tasks such as onboarding checks, triage, procurement validations or ticket categorisation. Their value comes from consistent execution and a noticeable reduction in manual effort.

6. Organisational intelligence layer

At this stage, agents operate across functions with shared memory, shared rules and shared context. They support HR, finance, IT, operations and customer experience in a unified way. This layer becomes part of the organisation’s operational backbone and keeps work moving with more consistency and visibility.

Six stages of agentic AI adoption inside the enterprise

Most organisations begin their agentic AI journey long before the first agent goes live. The early work is usually about understanding what agents can realistically take on, where they fit inside existing workflows and what needs to be in place for them to operate safely. Over time, this exploration settles into a predictable pattern.

These six stages reflect how enterprises usually progress from interest to real operational outcomes.

Stage 1: Build understanding and identify the right opportunities

The early stage is all about clarity. Teams look for tasks that are repetitive, rules-driven or slowed down by manual coordination. They also review whether their data, APIs and processes are stable enough for agent-led work. By the end of this stage, most organisations have a small group of workflows where agents can make a real difference, along with a practical sense of what needs to be ready before they move ahead.

Stage 2: Prepare the environment for agentic work

Once the opportunities are clear, the focus shifts to creating the right conditions for agents to operate safely. This includes defining permissions, setting guardrails and understanding how exceptions will be handled. Teams also put monitoring and reliable data access in place. The outcome is a controlled environment where early agents can participate in the work without disrupting day-to-day operations.

Stage 3: Deploy early agents for real tasks

This is the first point where agents touch real workflows. Organisations introduce them to small, contained tasks to observe how they behave inside actual systems. The intention here is learning, not scale. Leaders watch how accurately the agents work, where they hesitate and when they escalate. These early insights usually shape what gets refined and what becomes a strong candidate for expansion.

Stage 4: Expand into multi-step and cross-functional work

After early pilots, organisations start to see where agents can help work move across multiple steps or systems. Agents begin coordinating actions, carrying context forward and reducing the back-and-forth that slows down progress. This stage often reveals the first signs of broader operational impact, where workflows feel lighter and teams begin recognising the value of connected agent behaviour.

Stage 5: Allow agents to own a defined portion of the workflow

At this point, agents take on more responsibility. They manage a clear portion of a workflow, handle routine decisions and ask for human input only when something falls outside predictable patterns. Because the boundaries are well-defined, organisations see improvements in consistency, turnaround times and overall workload reduction. This is often the stage that builds real trust.

Stage 6: Integrate agents into enterprise-wide operations

In the final stage, agents stop operating in isolated pockets. They support work across HR, finance, IT, operations and service with shared context and unified policies. Leaders gain visibility into how agents make decisions, and teams begin to see them as part of the organisation’s normal rhythm of work. At this point, agents contribute in a steady and predictable way, and the enterprise shifts from experimentation to a blended operating model.

Where does agentic AI makes practical impact

To understand the impact of agentic AI, it helps to compare how work happens today with how it moves when agents are involved.

How work actually happens today

In most enterprises, completing a task means coordinating across systems, people, and handoffs. An employee receives a request, gathers the information, confirms the details, decides the next step, updates the systems, and notifies others. The steps are simple, yet the coordination takes time. Automation helps only when the path is fixed. The moment a task needs interpretation or meets an exception, a person takes over. While Generative AI (GenAI) largely improved how information is handled, the execution still remained with humans.

How agentic AI creates impact

With agentic AI in place, the flow of work feels different. The agent takes in the request, understands what the person is trying to get done, and gathers the information it needs without waiting for someone to guide each step. It moves through the task the way a well-informed colleague would, completing what it can and keeping everything updated as it goes. When the situation calls for human judgment, it pauses and hands the task back with the context already in place.

The change is easy to notice. The agent does not stop at understanding the task. It helps move the work forward. Routine steps that once depended on someone checking, following up, or switching between systems begin to progress on their own. People stay focused on decisions that need their expertise, and the overall workflow becomes smoother and more predictable.

Three areas where agentic AI creates impact

Agentic AI delivers the most practical value in three areas where work naturally slows down: the day-to-day tasks inside teams, the interactions that support customers and employees, and the multi-step processes that cut across departments. These areas reflect how enterprises actually operate, so even small improvements create meaningful lifts in speed and consistency.

Work

Much of the work inside an organization involves preparing information, validating details, routing requests, and keeping tasks moving. These steps are important, yet they take time away from activities that require real judgment. Agents handle this coordination smoothly. They collect what is needed, complete the predictable steps, and progress the task so employees can focus on the parts that rely on their expertise.

Service

Service interactions depend on continuity. When context is lost or simple requests need escalation, the experience slows down. Agents maintain context throughout the interaction and complete straightforward tasks in the same flow. For situations that need human attention, the agent gathers the details, organizes the information, and hands the case over in a ready state. This creates faster resolutions for routine issues and better-prepared teams for complex ones.

Process

Enterprise processes often involve many steps, systems, and checkpoints. Breaks in that chain create delays, inconsistencies, and rework. Agents keep these processes on track by following the established logic, validating each step, and ensuring the right information moves forward. In workflows like onboarding, claims, procurement, and order management, this leads to smoother progress and fewer manual interventions.

Where agentic AI delivers impact across industries

Agentic AI tends to make the most difference in places where work depends on gathering information, applying known rules, and moving tasks across systems. Every industry has these patterns, which is why the early impact shows up in similar parts of the workflow, even though the actual tasks are different.

Financial services

Teams use agents to support account servicing, loan processing, compliance activities, and onboarding. Agents prepare the information, validate requirements, and keep the work moving so people can focus on decisions that need expertise.

Retail and e-commerce

Order updates, returns, customer questions, and inventory tasks often slow down due to volume. Agents help by tracking status, confirming details, and taking the small actions that keep operations running smoothly.

Telecommunications

Service activation, billing reviews, troubleshooting, and plan updates all follow predictable sequences. Agents complete those steps and pass on anything that needs human attention with clear context.

Insurance

Claims intake, policy servicing, underwriting, and first notice of loss involve gathering information and checking it against existing rules. Agents do this consistently and help the workflow continue without unnecessary delays.

Human resources

Onboarding, benefits, employee questions, and offboarding all require coordination across multiple systems. Agents take on the routine steps so HR teams can focus on people rather than paperwork.

IT operations

Incident handling, access requests, change coordination, and service desk tasks have well-defined starting points. Agents classify issues, run initial checks, and complete routine actions that help keep IT environments responsive.

What defines a strong agentic AI use case

Not everything is a good use case for agentic AI. It may feel like agents can take on anything, but that is rarely the case. Some workflows are a natural fit and others are better left with people. The difference usually becomes clear once you look at how the work is shaped and how much of it an agent can handle without constant interpretation from a human.

A few characteristics tend to show up in the workflows that succeed with agents.

Clear logic: The work follows a pattern that makes sense even when the details change. If the reasoning behind each step is stable, the agent can learn it and carry it out reliably.

System reach: The task depends on information and actions that live inside systems the agent can access. When the data is available and the systems are connected, the agent can move through the steps without friction.

Meaningful frequency: Some tasks seem small on their own, but they happen every day across teams. These repeated workflows are often where agents create the clearest value.

Data-driven context: The agent can understand the situation from the information already present in your systems. When the context is visible, the agent knows what needs to happen next.

Manageable exceptions: Most cases follow the expected path, while the unusual ones can go to a person. This balance allows agents to handle a large share of the work without taking away the parts that genuinely require judgment.

Where human-led work still makes more sense: There will always be tasks that rely on interpretation, creativity, or navigating ambiguity. These are better handled by people, with agents supporting the parts that can be standardized.

Where agentic AI is not practical

As organisations start working with agentic AI, it helps to be clear about where agents should not take responsibility. The constraints are rarely about model capability. They are almost always about the environment the agent is placed into. Agents operate well when workflows are stable, information is available and rules are defined. They struggle when those foundations are not there.

A few boundaries become evident very early in real deployments.

1. Lack of a proper workflow sequence

Agents can handle variability, but they still need a basic, repeatable pattern to operate with confidence. When teams follow different sequences, when steps change from case to case or when no one can clearly describe how a task is meant to move, the agent has nothing stable to reason with. In these situations, humans remain better suited because they can interpret ambiguity, reset direction and make sense of work that does not follow any consistent path.

2. Lack of clean, connected data

Agents make decisions based on the information available to them. When data is scattered across systems, inconsistent, outdated or not accessible through reliable APIs, the agent cannot form an accurate picture of what needs to happen next. It hesitates, escalates or produces incomplete outcomes. In many early deployments, this becomes the first barrier teams encounter. Until the underlying data pathways are stable and connected, humans remain better equipped to interpret fragmented information and move the work forward.

3. Low-frequency or highly specialised tasks

Agents deliver the most value in high-volume, repeatable work where decision patterns can be modelled with confidence. Tasks that occur infrequently or rely on deep tacit expertise do not provide enough signal for an agent to operate reliably. Training and maintaining an agent for these cases adds complexity without meaningful operational benefit. It is more practical for humans to continue handling this category of work.

4. Decisions requiring human judgment

Some decisions cannot be reduced to rules or historical patterns. Clinical interpretation, financial approvals, legal considerations and sensitive employee matters require human judgment, accountability and contextual understanding. Agents can prepare information and reduce the surrounding manual effort, but they cannot take responsibility for the outcome. In these scenarios, human oversight is not optional but essential.

5. Absence of governance foundations

Agents need clarity on how they should operate. If permissions, escalation paths, monitoring and auditability are not defined, the agent cannot be introduced into the workflow with confidence. It isn’t about the agent making mistakes. It’s about the organisation not having a clear way to supervise or intervene when required. Until these guardrails are in place, the responsibility is better left with people.

The economics of Agentic AI

Agentic AI doesn’t follow the cost patterns we used for earlier automation models. Most teams begin by asking what it costs to build an agent, but that turns out to be the smallest part of the investment. The real economics emerge when the agent starts carrying work inside a live workflow. That’s when organisations see what it takes to support the agent properly, how quickly it begins contributing and where the investment actually makes sense.

Agentic AI build costs: What organisations actually pay For

The build phase is usually predictable. You set up the platform, define the reasoning layer and outline how the agent should handle the initial workflow. It’s structured, controlled work. The more meaningful considerations begin when the agent needs to connect to enterprise systems and operate inside a live process rather than sit as a standalone automation.

Agentic AI integration costs: Where real investment occurs

Most of the investment surrounds the agent, not the agent itself. When organisations connect agents to real workflows, a few areas consistently shape the economics, such as:

System integrations: Agents need stable, secure access to CRMs, ERPs, EHRs, ticketing tools and internal APIs. This often forms the bulk of the early effort.

Data readiness: Agents don’t need perfect data, but they do need consistent access to the specific information the workflow depends on.

Governance: Permissions, monitoring, auditability and escalation rules allow the agent to take responsibility safely.

Adoption: Teams need time to understand the agent’s behaviour and align their own steps. Adoption has a major influence on how quickly value shows up.

Agentic AI operational costs: What it takes to run an agent

Once an agent is deployed, it needs steady but minimal oversight. Enterprise systems evolve, new exceptions appear and workflows shift over time, so the agent requires periodic adjustments to stay reliable. The ongoing cost is low compared to human effort, but it is still a planned part of operating an agent in a live environment.

Agentic AI value timeline: How quickly ROI becomes visible

The return from agentic AI depends on the type of agent entering the workflow. Each one takes on a different level of responsibility, so the impact becomes visible at different points in the journey. For example:

Task Agents: Immediate relief in high-volume Work

Task agents tend to show value first. They take over the small, repetitive actions that quietly consume large amounts of time like routine checks, simple validations, basic inquiries. These steps look minor on their own, but they happen constantly across the organisation. When an agent starts handling them, interruptions drop, context switching reduces and teams feel the difference almost immediately.

System-connected agents: Value builds as the workflow stabilises

Agents that act inside systems deliver a different kind of lift. Once the integrations and permissions settle, these agents begin updating records, moving cases forward and triggering the next step inside the workflow. Cycle times shorten because the work no longer pauses between systems or waits for someone to complete routine handoffs. The workflow becomes more fluid, and the improvement is noticeable across teams that handle high volumes.

Multi-agent workflows: Deeper impact as orchestration matures

When multiple agents begin working together, the nature of the workflow shifts. One may gather information, another may validate it and another may update downstream systems. This reduces bottlenecks, makes exceptions more visible and increases throughput in a way that is difficult to achieve with human capacity alone. It takes more coordination to get this stage right, but when it settles, the operational lift is substantial.

You begin to see these gains early. The long-term ROI becomes clearer when the agent is not just answering questions or running isolated steps, but participating in the workflow in a steady, reliable way.

Agentic AI hidden costs: What organisations often miss

Most teams enter agentic AI with a good sense of the visible costs: platform, compute, integrations and the initial build. The hidden costs appear later, once the agent starts working inside real workflows. Everest Group notes that these “hidden layers” are the reason many organisations revise their ROI expectations after pilots move into production.

1. Human-dependent gaps that now need fixing

Workflows often depend on people to resolve unclear steps, fill missing details or adjust rules informally. Agents cannot improvise like this. When they hit these gaps, they stop, and the organisation must formalise everything humans were doing instinctively. That means rewriting steps, defining rules and cleaning up data issues that were previously absorbed by human judgment. This refinement becomes a real cost because the workflow has to be made explicit before an agent can run it reliably.

2. Oversight and visibility that must be built deliberately

Once agents start taking actions, leaders expect clear visibility into how decisions were made. Setting up monitoring, decision traces, audit logs, permissions and escalation paths adds real operational effort. It’s essential for trust and compliance, but it rarely appears in early budgeting.

3. Workflow design that doesn’t translate into autonomous execution

Many workflows were never designed for autonomous execution. They assume a person is present to interpret context, manage exceptions, coordinate across teams or decide when to change course. The process works because humans absorb this complexity. Agents surface the gap immediately because they need a clear path, stable handoffs and predictable ownership. When those elements are missing, organisations must rethink parts of the workflow so the agent can operate reliably. That redesign effort becomes a hidden cost, and it usually appears only when the agent starts working inside the process.

Why hidden costs impact agentic AI success

These hidden layers matter because they influence how quickly an organisation moves from pilot to real operational value. When workflows need more cleanup than expected or when oversight is not yet in place, teams spend their time preparing the environment instead of letting the agent carry meaningful work. That shift in effort slows momentum, and leaders begin to question whether the deployment is progressing as planned.

Gartner’s research reflects this reality. They note that over 40 percent of agentic AI projects will be cancelled by 2027, not because the technology falls short, but because organisations underestimate the operational work required to run agents reliably inside real processes.

So the key takeaway here is that Agentic AI succeeds when the environment is ready for it. When it is not, the organisation spends more time fixing the workflow than benefiting from the agent. Getting these foundations in place early makes the economics stronger and helps agents take on the parts of the work that truly shift outcomes.

How Agentic AI Is Evolving Inside the Organisation

Agentic AI behaves very differently from the earlier AI systems we worked with. It doesn’t pause after a few steps or wait for someone to decide what happens next. It steps in, takes responsibility for its part of the workflow, and keeps the task moving. When you watch it do this in a real setting, the idea of a coworker doesn’t feel like an analogy anymore.

More companies are beginning to use this language openly. At AWS’s re:Invent, teams were encouraged to look at AI agents as teammates that carry meaningful parts of the workload, not utilities sitting on the edges. The idea is simple: if something is helping move work forward on its own, it is no longer acting like traditional software.

Raj puts this in a way many leaders connect with right away: “Agents behave like coworkers. They understand the goal, they take action, and they stay with the task until something meaningful happens.”

That is exactly what teams experience when they start working with these systems. They hand off the repetitive, layered steps and stay focused on decisions, exceptions and outcomes that truly need people.

Why large enterprises are preparing for the agentic workforce

At the World Economic Forum in Davos, Salesforce CEO Marc Benioff said something that caught a lot of attention. He described today's chief executives as "the last generation to manage only humans." It wasn't a prediction about the distant future. It was an observation about what's already starting to happen.

Benioff pointed to Salesforce's own support operations as an example. Agents now handle roughly half of all customer inquiries there, working alongside 9,000 human support staff. The two groups operate in the same workflows, just handling different parts of the work.

Microsoft took a similar stance at its Ignite conference when it introduced Agent 365, a platform designed to help organizations deploy and govern agents the same way they would any other workforce member. The system treats agents like onboarded team members, each with a clear identity and set of responsibilities.

These are just some examples. There are many other large enterprises who understand that Agentic AI is not a hype, but a necessity that they must prepare for.

Redefining leadership for the Agentic era

2025 showed leaders that agents are not a short-term experiment. They are becoming part of how work gets done in day to day operations. In 2026, more large organisations will formalise this by treating agents as part of their workforce structure by assigning responsibilities, defining ownership and ensuring they work in sync with the people around them.

This introduces a different leadership challenge. People and agents contribute differently. People bring judgment, context and decision-making. Agents bring consistency, availability and the ability to keep routine work moving. The role of leadership is to make sure both operate within a clear system so the workflow remains predictable and outcomes stay reliable.

With that in mind, leaders preparing for an agentic workforce tend to focus on a few fundamentals:

1. Establish identity and access early

Every agent needs a clear identity in the organisation’s systems. Leaders define who the agent is, what it can access and what it is accountable for. This makes its actions traceable and ensures it operates with the same transparency expected from any contributor.

2. Identify the work the agent will own

Ownership is not vague. Leaders specify the exact steps, checks or decisions the agent is responsible for. When this is explicit, teams know what the agent handles and where human involvement continues to matter.

3. Make the agent’s actions discoverable

People work better with agents when they can see what the agent did, why it did it and what it plans next. Simple discoverability—logs, reasoning visibility, decision trails—builds trust and prevents unnecessary intervention.

4. Stabilise the workflows the agent will run

Agents rely on predictable inputs and structured pathways. Leaders ensure the workflows around the agent’s responsibilities are stable enough for consistent execution and clearly defined escalation when needed.

5. Set boundaries that are easy to understand

Agents need clarity on when to proceed, when to pause and when to escalate. Leaders outline these boundaries up front so the agent operates confidently and people know when their judgment is required.

6. Build team comfort and alignment

For most organisations, the real shift is behavioural. Teams need to understand how the agent works, what to expect from it and how handoffs will feel. Leaders bring this alignment early so the blended workforce operates without confusion.

7. Integrate the agent into how the team measures work

Agents do not operate outside the team. Their output becomes part of the team’s performance. Leaders make this integration explicit so responsibility remains connected and outcomes stay unified.

What the evolution of agentic AI means for people

As agentic AI becomes part of everyday operations, the biggest change shows up in how people use their time. The routine steps that once filled a large portion of the workday start moving on their own, and attention shifts to areas where human input makes a real difference.

Where attention moves

When the predictable tasks no longer compete for time, people focus more on decisions, exceptions and conversations that require experience or context. The workday starts to feel less fragmented. Teams spend more time moving outcomes forward and less time coordinating the basics.

A more natural form of collaboration

Working with agents does not feel like supervision. People are not monitoring or correcting. They simply step in when the situation needs judgment and let the agent handle the parts that benefit from consistency. Over time, both sides settle into a rhythm: the agent keeps the process steady, and people handle the parts that cannot be standardised.

Better flow across teams

When agents prepare information, check details or handle follow-ups, the rest of the workflow becomes easier for everyone involved. Handoffs are smoother. Reviews take less time. Conversations become more meaningful because the groundwork is already done.

More space for meaningful work

As the routine load reduces, teams naturally gravitate toward tasks that require insight, creativity or problem-solving. The work feels more intentional, not because roles change, but because the distractions around the role reduce.

Clearer accountability

People know exactly where their judgment matters. Agents handle the operational flow; humans remain responsible for direction, exceptions and outcomes. This clarity reduces second-guessing and helps teams stay aligned.

Build vs Buy: A practical question in the Agentic era

At some point, every organisation working seriously with agents reaches the same fork in the road. Do we keep building this internally, or do we rely on a platform that already knows how to run agents inside an enterprise?

Building feels reasonable at first. Most large organisations have capable engineering teams, access to foundation models, and a deep understanding of how their own workflows operate. When agents are described as reasoning layered on top of models, it’s easy to assume this is something internal teams can assemble and improve over time.

In pilot phases, that thinking usually holds. Teams can build agents for specific tasks, connect them to a few systems, and see early success.

The challenge shows up when those same agents are expected to operate as part of enterprise-wide initiatives. Running continuously, interacting across multiple systems, adapting as workflows change, and operating with clear oversight introduces a very different level of complexity.

This tension shows up clearly in enterprise research as well. MIT Sloan’s study on generative AI adoption notes that while organisations can and do build early systems internally, sustaining them as a dependable, evolving capability is where most teams slow down. The effort quickly extends beyond models into integration, governance, operating discipline, and ongoing adaptation as the business changes. That’s why many enterprises end up mixing approaches, building where it makes sense, and relying on external platforms for the parts that require deeper, sustained expertise.

At this stage, leaders realise that agentic AI works only when decisions are grounded in practicality, not preference. Agentic AI touches too many layers at once for any one organisation to do everything on its own. Running agents reliably means getting orchestration, oversight, human interaction, and system integration right together, not one at a time.

That’s why organisations start looking for partners with enterprise-level experience in running agents inside real workflows. Not to replace internal teams, but to add the operating discipline that comes from doing this in production like setting clear boundaries for agents, putting the right oversight in place, and making sure handoffs work once volume and exceptions appear. This kind of experience reduces rework, helps teams move beyond pilots sooner, and ensures agents behave consistently when they become part of everyday operations.

How Kore.ai approaches Agentic AI implementation

Most enterprises can usually figure out where agents could be used. That part is fairly straightforward. The real challenge shows up when those agents have to be implemented inside live enterprise workflows. Agents aren’t plug and play. They have to work across existing systems, follow real operating rules, deal with exceptions, and stay reliable over time. This is where the work shifts from identifying use cases to making sure agents behave in a way the organisation can trust.

That’s the space Kore.ai works in, focusing on the practical details that determine whether agents fit cleanly into day-to-day operations or become another source of friction. The sections below reflect how that work typically unfolds in real enterprise environments.

Giving the agent a clear identity and place in the organisation

An agent must have defined responsibilities, access rules and boundaries before it can operate with confidence. This creates traceability and helps teams understand where the agent fits. Once this foundation is set, leaders know what the agent owns, where it escalates and how it contributes to the flow of work.

Preparing workflows so agents can operate consistently

Agents perform well when the surrounding workflow is stable. That means predictable steps, consistent data and clear handoffs. Much of the early work involves strengthening these areas so the agent can take responsibility without uncertainty. This creates a smoother path for adoption and fewer interruptions later.

Supporting the natural stages of adoption without forcing a model

Organisations expand their use of agents gradually. Early agents may handle single tasks; later ones support multi-step work that moves across teams. Kore.ai supports this progression at the organisation’s pace. Throughout the journey, leaders maintain visibility into the agent’s behaviour, decision-making and escalation points.

Avoiding the common gaps that slow adoption

Many workflows rely on human judgment, undocumented rules or informal adjustments. Agents cannot interpret these without clarity. Kore.ai helps organisations uncover where the workflow needs definition, where data needs alignment and where escalation paths should sit. Addressing these elements early reduces rework and builds trust in the system.

What success looks like in a blended workforce

Success is not measured by how many agents are deployed. It is measured by how naturally they operate within the workflow. When teams trust the agent’s actions, when oversight is simple, when exceptions are manageable and when work moves with fewer delays, the organisation begins to experience the real benefit of agentic AI. Kore.ai’s role is to help enterprises reach this point with confidence and sustain it as the agent’s responsibilities grow.

And that approach comes from repeated exposure to real enterprise environments, across industries and functions, where agents are expected to hold up regardless of the systems or teams around them.

Moving Forward with Agentic AI

If there’s one thing this evolution makes clear, it’s that work isn’t going back to the way it was. Agents will only get smarter. They’ll take on more responsibility than we imagined a year ago. And that raises a question all of us will face sooner than we think: what does our day look like when a meaningful part of the work is carried forward before we even step in?

You can almost sense the shape of it. There’s less time spent setting things up and more time moving directly into decisions. Steps that usually slow the day down begin to clear themselves. The spaces that normally fill with routine tasks start to open, leaving more room for the kind of work that genuinely needs a person.

It’s not a distant idea. You can already see hints of it in organisations trying this today. A routine task progresses without being nudged. A process that usually stretches across days reaches completion without a reminder. The small frictions we’ve learned to accept gradually ease out of the way, and once you see that happen, even once, you understand where this is headed.

As agents mature, the real consideration is not what they can accomplish, but how we want the overall shape of work to evolve around their contribution. That’s the phase we’re entering now. And it’s one worth stepping into with a sense of curiosity, not caution.

.webp)