The AI productivity paradox: why employees are moving faster than enterprises

Akhil Ganji

January 12, 2026

Introduction

It's 9:47 AM on a Tuesday. Frank, a marketing director at a Fortune 500 company, is staring at his laptop. He has 23 minutes before his next meeting and needs to summarize a 40-page competitive analysis report for an executive briefing.

He opens a new browser tab, navigates to ChatGPT or NotebookLM, uploads the executive documents, complete with proprietary market data and strategic insights, and presses Enter.

Sound familiar?

It should.

According to recent research, 69% of cybersecurity leaders suspect or have evidence that employees are using public generative AI in the workplace.

This is shadow AI in action.

It's the well-intentioned use of consumer AI, and it's happening in every department of every enterprise. The enthusiasm for AI is a powerful force for employee productivity, but without proper enterprise guardrails, it creates a significant blind spot. Enterprises lack visibility into what data is being shared, which tools are being used, and what risks are accumulating outside their security boundary.

The challenge isn't to stop this behavior, but to channel it from a hidden risk into a strategic, secure asset with the right AI solution for work.

The great AI divide: understanding what's really different

The explosion of consumer AI tools, such as ChatGPT, Claude, and Gemini, has been nothing short of revolutionary. These tools have made AI accessible to everyone, from students writing essays to professionals drafting emails. But here's where the confusion begins: not all AI is created equal, especially when it comes to work.

Consumer AI is built more for personal use, such as meal planning, creative writing, updating a resume, or obtaining quick answers to everyday questions. These solutions are user-friendly and often available for free or at a low cost. They're trained on public data and, critically, many store the user's inputs to improve their models. They're great at answering general questions, but they often lack in-depth knowledge of an enterprise's internal documents, policies, and other relevant information.

Enterprise AI, on the other hand, is fundamentally different. Yes, it's secured and compliant, but the real power lies in what it knows and what it can do. Enterprise AI connects directly to an organization's knowledge sources, including CRM systems, knowledge repositories, internal wikis, project management systems, and more.

Imagine this: Instead of spending 90 minutes manually pulling Q3 sales data from five different systems, consolidating it into a PowerPoint presentation, and formatting it for a quarterly business review, the user asks an enterprise AI solution to do it. Three minutes later, the user has a comprehensive presentation, formatted and ready. That 87-minute savings? It's not just about efficiency; it's about giving the team time to think strategically about what the data means, rather than just assembling it.

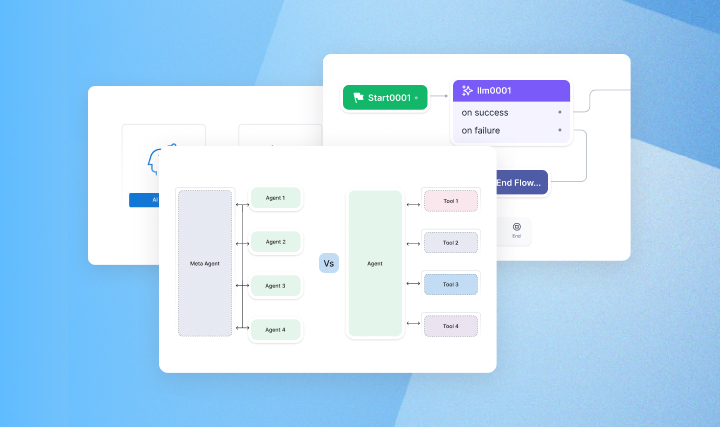

Better yet, enterprise AI can execute workflows or generate reports on a regular cadence. Just as a user might ask ChatGPT to assist them with something on demand, enterprise AI tools enable employees to schedule AI agents to run workflows at specific, regular dates and times. Set it to generate a weekly competitive intelligence report every Monday at 8 AM, or to compile customer feedback sentiment analysis every Friday afternoon. Employees can walk into Monday's strategy meeting or Friday's product review already prepared, already armed with insights, ready to have the conversations that actually move the business forward.

Overall, enterprise AI doesn't just chat; it acts on enterprise data.

The distinction isn't just technical, it's transformational. Consumer AI helps users work faster, while Enterprise AI reimagines the nature of work itself.

The sovereignty question: who really controls the AI solution?

With consumer AI, users are operating in someone else's environment. The prompts, data, and potentially even outputs exist in a third party's infrastructure, subject to their terms, model training practices, and data retention policies. Users have no control over where the data resides, how long it's stored, or who might eventually have access to it.

Enterprise AI solutions flip this equation. The solutions enable data sovereignty, meaning enterprise information stays within the controlled environment, whether that's a private cloud, data center, or a specific geographic region that meets all regulatory requirements. Enterprises decide data residency, retention policies, and access controls. And crucially, the solutions provide model sovereignty, which is the ability to use models that aren't trained on proprietary data unless required, or even to deploy and fine-tune enterprises' own models on their infrastructure.

This isn't just a technical detail. For organizations operating under strict regulations, such as GDPR, HIPAA, or financial services compliance, sovereignty isn't optional; it's mandatory.

The strategic blind spot for business leaders

Research shows that workers worldwide increased their input of sensitive corporate data into AI tools by 485% from March 2023 to March 2024. Even more concerning, 73.8% ChatGPT usage at work occurs through non-corporate accounts, which means that employees could incorporate their employers' data into public models. When enterprise data is ingested into public models, it becomes potentially accessible to other AI users through model responses, risking expensive GDPR compliance violations, regulatory fines, and breaches of customer trust.

For the business leaders, shadow AI represents a critical strategic risk that is often overlooked. While it boosts individual efficiency, it simultaneously opens the enterprise to data leaks, intellectual property theft, and serious compliance violations. When employees use consumer AI, they are feeding an enterprise's crown jewels, such as customer data, financial models, and future strategies, into systems that lack enterprise-grade security and governance.

Consider what's invisible in this equation: the absence of audit trails. With consumer AI, there's no record of what data or AI agents were used, who accessed it, when, or for what purpose. IT teams or administrators cannot track data flow, monitor for sensitive information exposure, and can't demonstrate compliance when regulators come knocking. Consumer AI is a black box where the most valuable assets disappear without a trace.

The outputs from these tools are often inconsistent and lack the specific context of your business, leading to flawed insights and poor decision-making. This isn't just a security problem, but a threat to your competitive advantage and a missed opportunity to build a proper, unified AI strategy that fosters employee engagement safely.

Enterprise AI solutions fundamentally address these challenges. Through comprehensive audit logs, every user action and agent interaction is captured and time-stamped. The solutions provide the transparency and accountability necessary for compliance and governance. Furthermore, the solutions typically provide context-aware RAG, ensuring that AI responses are grounded in the enterprise's specific knowledge base and deliver accurate, relevant outputs that reflect your business context.

And with fine-tuned models running in private instances, the data stays secure, with no leaks and, if needed, trains the enterprise's model, not someone else's.

Wondering what is the path forward?

The confusion between consumer AI and enterprise AI isn't just semantic; instead, it's a critical business risk. Every day that employees rely on unauthorized AI tools is another day your enterprise's data, intellectual property, and competitive advantage are exposed.

But here's the good news: Enterprise AI technology has matured rapidly. 92% of companies plan to increase their AI investment over the next three years, and early adopters are already seeing measurable returns. The path forward requires transitioning from reactive policy enforcement to proactive enablement, providing employees with the necessary tools while maintaining the security and governance the enterprise demands.

The question is no longer "if" but "how fast" you can bridge the gap between employee needs and enterprise requirements. Frank's 23-minute shortcut might have felt productive. But for that enterprise, it could have cost millions in breached data, regulatory fines, or lost competitive advantage. The real productivity gain comes from giving people like Frank the right tools, ones that are just as powerful as the ChatGPTs of the world, but designed for the complexity and sensitivity of enterprise work.

It's time to move beyond the public playground and build your future on an enterprise-grade foundation.

At Kore.ai, we believe that the AI revolution is here, and AI can help enterprises reimagine employee productivity. Our question to you is - Are you leading the AI revolution, or is it leading you?

Learn more about AI for Work and how enterprises can leverage the solution across teams to enable secure, productive AI adoption at scale.

.webp)