AI agents are live. They're making decisions, triggering workflows, and acting on behalf of the business, right now, across thousands of enterprises. And a growing number of those organizations are quietly asking the same question: who's actually in control?

Not rhetorically. Practically.

When an agent executes a business process end-to-end, coordinates across systems at machine speed, and makes decisions in milliseconds, the governance model that worked for predictive AI simply doesn't hold. Human oversight still exists, but it can't operate at agent speed. And when something goes wrong, the trail is harder to follow, the decisions harder to explain, and the accountability harder to assign.

The stakes got even higher when no-code platforms put agent-building in the hands of citizen developers, capable, well-meaning business users who've never had to think about safety architecture, data boundaries, or audit trails, agents with access to live systems and sensitive data, built without guardrails, deployed without oversight.

As agentic systems take on more responsibility, the questions organizations are asking get harder:

- How do you ensure alignment when systems optimize independently?

- How do you maintain explainability when decisions happen in milliseconds across distributed agents?

- How do you demonstrate meaningful control to regulators and auditors when constant supervision isn't operationally possible?

These aren't theoretical concerns. They're the practical challenges separating experimental deployments from agentic AI that can be trusted in production and scaled responsibly.

What the most advanced implementers are discovering is counterintuitive: governance isn't what slows agentic AI down. It's what makes it viable. When it's designed into the architecture, not bolted on after the fact, organizations move faster and with far greater confidence.

This guide is for enterprise leaders deploying or planning to deploy AI agents in real production environments. It breaks down what AI agent governance actually requires, why legacy approaches fall short, and how to design governance that scales with autonomy rather than constraining it, so autonomy and accountability don't trade off against each other, but work together, sustainably.

AI Agent Governance Fundamentals: What It Actually Means

When people discuss AI agent governance, the conversation often drifts into abstractions. So let's ground this clearly.

AI agent governance refers to the set of structures, both organizational and technical, that enable autonomous AI agents to operate safely, responsibly, and within defined boundaries over time. It combines policies, guardrails, monitoring, and oversight mechanisms to ensure that systems designed to act independently remain aligned with business intent, legal obligations, and societal expectations.

Put more simply: governance is how you maintain control, accountability, and trust when deploying systems that reason, decide, and act without asking permission first.

That distinction matters because agentic systems behave differently from the AI most organizations have governed in the past. In practice, effective AI agent governance is measured by how systems behave under real operational pressure, not how policies read on paper.

Why governance is measured by behavior, not documentation

In practice, effective AI agent governance is measured by three questions:

- Can you prevent violations before they happen, not just detect them after?

- Can you reconstruct why an agent made a decision six months later?

- Can you intervene meaningfully when agents operate at machine speed?

The difference between governance that works and governance that looks good on paper comes down to these operational realities.

Why Traditional AI Governance Frameworks Don't Work Anymore

If you've implemented AI governance before, the familiar pattern looks like this: train a model, validate performance, assess bias, deploy, monitor for drift. That approach worked because humans stayed in the loop. The AI-generated predictions or recommendations. People made the final decisions. Accountability was clear, and governance could be periodic and reactive.

Agentic AI changes the operating model entirely.

AI agents don't just predict outcomes, they take action. They perceive their environment, reason through options, and execute decisions autonomously, often coordinating with other agents and systems, often continuously, outside business hours, at machine speed.

New governance challenges emerge that traditional frameworks weren't designed to address:

The Three Pillars of Effective AI Agent Governance

Effective AI agent governance rests on three interdependent foundations. These pillars are interdependent, not sequential. You cannot build trust without managing risk. You cannot demonstrate compliance without both. Organizations that treat these as separate initiatives discover gaps when regulators or auditors ask for evidence that spans all three.

- AI risk management focuses on preventing material harm, detecting when agent behavior drifts into unsafe territory, and managing risks like cascading failures, goal misalignment, and emergent behaviors that were never explicitly programmed.

- Trust and responsible AI address the human dimension. Customers, employees, partners, and regulators need to understand when agents are making decisions, why those decisions were made, and when human oversight applies. Without trust, agentic systems remain confined to low-stakes use cases, regardless of their technical capability.

- Compliance and regulatory alignment reflect the legal reality organizations operate in. Regulations like the EU AI Act, along with state- and industry-level requirements, demand documentation, auditability, and demonstrable control, not as box-checking exercises, but as evidence of responsible operation when scrutiny arises.

These pillars are not separate initiatives. Together, they define whether agentic systems can be trusted, explained, and defended when scrutiny arises.

Why This Foundation Matters

Getting this right shapes everything that follows: how you evaluate AI agent platforms, what governance infrastructure you invest in, what observability you require, and how convincingly you can explain agentic systems to skeptical stakeholders.

Organizations that skip this foundation often end up with governance that looks impressive on paper but fails in practice: policies that aren't enforced, controls that don't prevent real incidents, and documentation that can't answer hard questions when something goes wrong.

Agentic AI represents a new way of building systems. The governance that supports it has to be new as well, not louder or heavier, but more deeply integrated into how these systems are designed and operated.

Why AI Agent Governance Is Non-Negotiable

If you're questioning whether AI agent governance is worth the investment, understand this: that decision has already been made for you. A combination of regulatory pressure, legal exposure, and technical reality has turned governance from a nice-to-have into a prerequisite for deploying agentic AI at scale.

- Regulation: Governance Is Now Legally Mandated

The EU AI Act is law. High-risk AI systems, which include most agentic use cases in credit decisions, hiring, and essential services, face mandatory requirements around risk management, data governance, transparency, and human oversight. Non-compliance penalties reach €35 million or 7% of global revenue, whichever is higher.

In the United States, AI regulation is emerging state by state. California addresses algorithmic discrimination. New York mandates audits for AI employment systems. Colorado requires consumer protections for AI decisions. Each jurisdiction creates different compliance obligations with different timelines.

If you're deploying AI agents across markets, you're not satisfying one regulation; you're navigating multiple frameworks that don't align perfectly. By 2027, governance will be legally mandated in virtually every major economy you operate in.

For context: A customer service agent that automatically processes refunds qualifies as high-risk under the EU AI Act if refund decisions significantly affect customers' access to services. An HR agent screening resumes is high-risk if it influences hiring decisions. The definition of "high-risk" is broader than many organizations assume.

- Liability: Legal Precedents Are Being Set Now

As AI systems cause real-world harm, courts are establishing who bears responsibility. Organizations face lawsuits for algorithmic bias in lending and hiring, with settlements reaching hundreds of millions of dollars. Autonomous systems involved in accidents create contested liability questions being answered right now in litigation.

Without clear governance, documented decision rights, comprehensive audit trails, and oversight mechanisms, you're exposed to liability you can't defend against when something goes wrong.

The question isn't whether governance will be required. It's whether you'll build it proactively or reactively after a costly incident.

- Technical Reality: Agents Require Continuous Governance

AI agents aren't deterministic systems you can test once and trust forever. They're probabilistic systems that change as environments shift, data drifts, and interactions accumulate. Behavior that looks acceptable at deployment can degrade or combine with other agents in unexpected ways.

Without continuous monitoring, behavioral analysis, and rapid intervention capabilities, you're operating blind. Traditional software testing doesn't work for systems that learn, adapt, and act autonomously. Governance for agentic AI has to account for this reality as the default operating condition.

For most organizations, the challenge is no longer whether governance is required, but how to build it in a way that keeps pace with autonomous systems.

- The Business Case: Governance Enables Faster Deployment

Here's what organizations with mature governance frameworks discover: they deploy AI agents faster, not slower.

Why? Because governance removes the organizational paralysis that actually stalls deployment. When policies are clear, controls are proven, and risks are managed, approvals happen quickly. The first agent requires building the governance foundation. The tenth agent inherits those patterns and moves through the process in weeks instead of months.

The organizations struggling to scale agentic AI aren't over-governed. They're constrained by uncertainty, about what's safe, what's allowed, and what could fail in ways they can't explain or defend.

Governance isn't the constraint on agentic AI adoption. It's what makes sustainable scale possible.

Inside the Enterprise Mindset: The Governance Questions That Matter

When organizations evaluate AI agent platforms, a familiar set of questions tends to surface. These aren’t signs of hesitation; they reflect experience. They come from understanding what it means to deploy autonomous systems in environments where failure has real consequences.

Here are the questions serious buyers ask, along with the principles that distinguish credible governance from surface-level assurances.

- How do AI guardrails actually prevent violations, rather than just detect them?

Effective guardrails are enforced technically at runtime, not documented in policy. They block prohibited actions before execution and combine fixed constraints with adaptive controls that adjust authority based on context and risk. - What prevents AI agents from hallucinating or acting on incorrect information?

Strong governance grounds agents in approved knowledge sources, requires validation or attribution for factual claims, and introduces confidence or verification layers before outputs are acted on in high-impact scenarios. - How is bias monitored and addressed over time?

Fairness must be monitored continuously, not assessed once. Credible governance tracks outcomes across relevant groups in production and defines clear remediation paths when disparities emerge. - How are multi-agent workflows governed?

Governance must operate at the system level, not just the agent level. This includes visibility into agent interactions, controls on coordination patterns, and mechanisms to stop workflows when collective behavior becomes unsafe. - How do we ensure sensitive data is protected?

AI agents should be treated like privileged insiders. Effective governance enforces strict identity, access control, data minimization, and output filtering to prevent unauthorized exposure or leakage. - What if agents behave in unexpected ways together?

Emergent behavior is expected in multi-agent systems. Governance focuses on detecting, bounding, and isolating problematic patterns rather than assuming perfect predictability.

These questions aren’t obstacles to adoption; they’re indicators of readiness. Platforms and governance frameworks that welcome this level of scrutiny are the ones built for real production use. In the sections that follow, we’ll translate these principles into concrete evaluation criteria buyers can use to assess AI agent platforms with confidence.

The AI Agent Governance Evaluation Framework: What Buyers Must Assess

When evaluating platform capabilities, governance capabilities should matter as much as core functionality. A powerful platform that cannot be governed reliably creates risk and liability, not long-term value.

What follows is not a checklist of features, but a framework buyers can use to assess whether a platform is capable of supporting autonomous systems safely at scale.

Guardrails: Control by Design, Not Policy

Effective AI guardrails are enforced architecturally, not documented as expectations. They prevent prohibited actions before execution rather than detecting violations after the fact.

Strong platforms implement guardrails across four dimensions:

- Input controls limit what data agents can access and process, reducing exposure to adversarial attacks and prompt manipulation.

- Process controls shape how decisions are made, enforcing requirements such as confidence thresholds, reasoning steps, or multi-factor validation.

- Output controls prevent sensitive data leakage, inappropriate responses, or regulatory violations.

- Action controls restrict what agents are allowed to execute, including transaction limits, scope boundaries, and escalation triggers.

Observability and Monitoring: Understanding What Agents Do and Why

Traditional monitoring focuses on system health. Agent governance requires observability into behavior. Effective platforms provide continuous visibility into:

- agent actions, decisions, and data access

- behavioral anomalies and policy violations

- performance, cost, and security signals

Decision provenance is critical. Buyers should expect the ability to reconstruct what information an agent considered, what alternatives it evaluated, which policies applied, and how confident it was in its decision. Just as important are control mechanisms that allow teams to intervene quickly through rollbacks, enforcement, or shutdowns when behavior deviates.

Evaluation and Testing: Governing Probabilistic Systems

AI agents do not behave deterministically. The same input may produce different outputs, which changes how systems must be evaluated. Instead of pass/fail testing, governance relies on statistical consistency over time. Buyers should look for:

- defined accuracy and reliability thresholds tied to decision risk

- continuous evaluation throughout the agent lifecycle

- separate assessment of individual agents and multi-agent interactions, where failures often emerge

Data Security and Access Control: Treating Agents as Privileged Insiders

Agents with system access should be governed like high-privilege users. Effective governance includes:

- unique identities for each agent and clear ownership

- strict access control and data minimization

- output protections that prevent sensitive information disclosure

- defenses against AI-specific threats such as prompt injection and data poisoning

Security here is not about trust in intent, but about enforcing least privilege by design.

Model and Tooling Supply-Chain Governance

AI agents do not operate in isolation. Their behavior depends on underlying models, external tools, plugins, and data sources that evolve independently.

Buyers should assess how platforms govern model provenance, versioning, and updates, particularly when foundation models change behavior over time. Equally important are controls over third-party tools, plugins, and open-source dependencies that expand the agent’s effective authority.

AI agents depend on components that change outside your control:

- Foundation models (GPT, Claude, Gemini) that providers update frequently

- Third-party APIs and tools that agents invoke

- Open-source libraries and dependencies

Critical evaluation question:

"What happens when OpenAI updates GPT-5, and my agent starts behaving differently?"

Required capabilities:

- Version pinning (lock agents to specific model versions)

- Pre-production testing of model updates

- Rollback mechanisms when updates cause issues

- Approval workflows for adding new tools to agents

As regulatory scrutiny increases, governance of the AI supply chain is becoming as important as governance of the agent itself.

Trust, Reliability, and Drift Management

Because agent behavior can change over time, governance must account for uncertainty. Buyers should expect mechanisms for:

- managing nondeterministic behavior through confidence thresholds and acceptable variance ranges

- detecting drift in performance, behavior, fairness, and compliance

- governing underlying models through versioning, monitoring, and controlled updates

Four types of drift that governance must detect:

- Data drift: Input distributions change (customer demographics shift)

- Concept drift: Relationships change (fraud patterns evolve)

- Performance drift: Accuracy degrades over time

- Behavioral drift: Agent strategies change in unintended ways

Without this, systems that appear reliable at deployment can quietly degrade in production.

Bias and Fairness Controls

Bias can emerge after deployment through feedback loops, even when initial testing shows no issues.

Effective governance includes continuous monitoring of outcomes across relevant groups, clearly defined fairness metrics, and predefined remediation processes. The key question for buyers is not whether bias can be detected, but whether there is a clear, enforceable response when it is.

What happens when bias is detected: Detection without remediation is insufficient.

Required remediation workflow:

- Containment: Reduce agent autonomy immediately (force human review)

- Assessment: Understand scope (how many customers affected)

- Root cause: Identify why bias emerged (feedback loop, data drift, model issue)

- Correction: Fix underlying issue (retrain, adjust policy, update data)

- Communication: Notify affected customers and remediate harm

Accuracy and Misinformation Prevention

Hallucinations represent governance failures, not just model limitations. For consequential actions, grounding alone is insufficient.

Additional safeguards include:

- Multi-source validation: Require corroboration from multiple sources

- Confidence thresholds: Only act when certainty exceeds threshold (e.g., 95%)

- Human review gates: Certain decisions require human confirmation regardless of confidence

- Deterministic fallback: Critical paths use rule-based logic, not LLMs

Strong platforms ground agents in approved knowledge sources, enforce validation for factual claims, and introduce additional checks or human review for high-stakes communications.

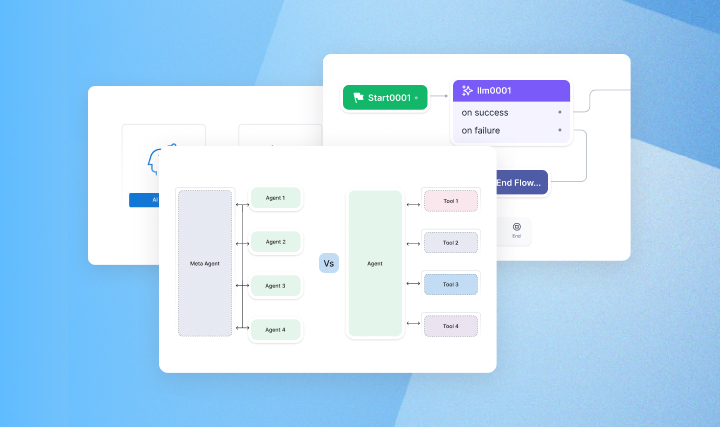

Multi-Agent Orchestration and Control

As soon as multiple agents interact, governance must operate at the system level. Buyers should look for:

- defined rules for agent-to-agent communication

- system-level monitoring for emergent or cascading behavior

- mechanisms such as circuit breakers and isolation to limit the blast radius when failures occur

Human Oversight and Emergency Control

Autonomy creates value, but governance requires the ability to intervene.

Effective platforms support graduated autonomy, allowing different levels of human involvement based on risk. They also provide clear emergency controls, including immediate shutdowns, overrides, and escalation paths.

Regulatory Alignment and Evidence Generation

Finally, governance must stand up to scrutiny.

What should be automatically generated:

- Agent inventory (what agents exist, what they do, who owns them) -

- Decision logs (complete provenance for all consequential decisions) -

- Policy enforcement records (guardrail violations and how they were blocked) -

- Fairness monitoring reports (demographic analysis over time) -

- Incident investigation records (root cause, remediation, outcomes)

Buyers should assess whether platforms support recognized frameworks such as ISO/IEC 42001, the NIST AI Risk Management Framework, and evolving industry requirements. Just as important is automatic documentation: audit trails, policy enforcement logs, bias reports, and incident records that can be produced when regulators or auditors ask, without manual reconstruction.

5. Governance in Practice: The Kore.ai Approach

The following illustrates how governance principles described in this guide translate into production capabilities.

5.1 Governance as Foundation, Not Feature

Most platforms bolt governance onto agents after building them. Kore.ai inverts this, providing governance infrastructure within which agents are built. This architectural choice reflects a core belief: governance enables velocity by building confidence for rapid, responsible scaling.

5.2 Kore.ai's AI Security, Compliance & Governance Capabilities

- Constitutional enforcement: Every agent operates within organizational boundaries enforced by the platform runtime, not policy documents. What agents cannot do is architecturally impossible, not just discouraged.

- Comprehensive guardrails: All four dimensions, input, process, output, and action, with actual prevention. The adaptive policy engine adjusts constraintsin real-time based on customer trust, transaction characteristics, and risk indicators while maintaining constitutional boundaries.

- Native observability: Decision provenance automatically captures inputs considered, alternatives evaluated, choices made, confidence levels, and policies applied. This enables stakeholder-appropriate explanations, drift analysis, and complete decision reconstruction.

- Identity and accountability: Every agent has a unique cryptographic identity. Every action attributed to a specific agent is linked to an accountable owner. No ambiguity when issues arise.

- Multi-agent governance: System-level monitoring tracks how agents communicate, influence each other, and create feedback loops. Circuit breakers automatically disable workflows exhibiting concerning patterns.

- Immutable audit trails: Comprehensive, cryptographically verified logging of all agent activity, automatically generated, not custom-built.

5.3 The Responsible AI Framework

- Fairness: Continuous demographic monitoring detects disparate impact. Automated assessments against fairness metrics trigger investigation and remediation.

- Transparency: Multi-level explainability for users, operators, and auditors. Clear AI versus human identification.

- Privacy: Data minimization enforced through access controls. Privacy violations blocked at guardrail layer.

- Human oversight: Graduated autonomy calibrates oversight to risk. Emergency controls enable immediate intervention.

5.4 Real-World Benefits

- Faster deployment: The first agent requires defining governance patterns. Tenth agent deploys 3-5x faster, inheriting proven infrastructure.

- Reduced compliance costs: Built-in audit trails and automated documentation eliminate manual work. Regulatory evidence pre-structured.

- Enhanced trust: Demonstrable governance accelerates legal approval, security support, and customer confidence.

- Regulatory readiness: Foundational governance adapts as regulations evolve. Compliance becomes a present reality, not scrambling for documentation.

Governance investment front-loads complexity into reusable infrastructure, creating compounding advantages at scale.

Conclusion: From Anxiety to Confident Action

The questions organizations are asking about AI agent governance are not signs of hesitation. They are signs of maturity. As AI agents move from experimentation into production, concerns around data security, bias, reliability, and accountability become practical operational requirements rather than abstract ethical debates.

What has become clear is that AI agent governance is not what slows adoption. It is what makes adoption viable. Regulatory pressure is increasing, liability boundaries are being tested in real cases, and agentic systems are growing more complex and interconnected. In this environment, deploying autonomous AI without robust governance is no longer a calculated risk; it is an unmanaged one.

Organizations that invest in governance early discover that it removes the uncertainty that stalls progress. Clear boundaries enable faster approvals. Runtime controls build trust across legal, security, and compliance teams. Continuous monitoring and decision provenance ensure that when issues arise, they can be detected, explained, and addressed quickly.

AI agent governance is not a constraint on innovation. It is the foundation that allows autonomy to scale responsibly. As regulations tighten, systems grow more complex, and expectations increase, organizations that treat governance as core infrastructure will move faster and with greater confidence than those that treat it as an afterthought.

Now let's turn that into action. Explore how Kore.ai's AI agent platform builds governance as a foundation, not a feature, enabling you to deploy autonomous AI with the confidence that comes from comprehensive security, compliance, and control.

.webp)