Parallel Agent Processing

Parallel AI agent processing is a practical response to one of the most persistent challenges in agentic AI: latency.

Nathan Schlaffer

Cobus Greyling

January 16, 2026

6 Min

Introduction

Parallel AI agent processing is a practical response to one of the most persistent challenges in agentic AI: latency. Rather than forcing a single agent to reason, plan, and act sequentially, this approach distributes work across multiple specialized agents that operate concurrently on different aspects of the same problem.

In the emerging world of agentic AI, latency has become a defining constraint. Research and early enterprise deployments show that organizations often adapt by selecting latency tolerant use cases instead of addressing the architectural root cause. This creates a ceiling on where and how AI agents can be deployed in production.

AI agents are highly capable. They can decompose complex tasks, reason step by step, and take meaningful actions across systems. However, when every step is executed in sequence, response times routinely stretch into tens of seconds. In interactive or operational workflows, this delay quietly undermines usability, trust, and adoption.

Parallel agent processing reframes the problem. By decomposing a task into independent sub tasks and executing them simultaneously across multiple agents, organizations can dramatically reduce end to end response time while preserving output quality.

Basic AI Agent Architecture Design Decision

Why Parallel Matters

Consider a simple but realistic example: analyzing a detailed product review. A useful outcome might include extracting key features, summarizing pros and cons, assessing sentiment, and producing a recommendation.

In a traditional sequential setup, a single orchestration agent invokes each tool or reasoning step one after the other. Each step must complete before the next begins. The result is predictable but slow, with total execution times often reaching 30 seconds or more.

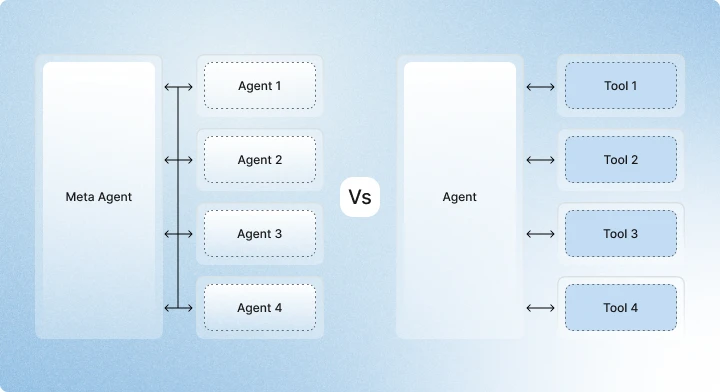

In a parallel agent architecture, each sub task is handled by a dedicated agent. One agent focuses on feature extraction. Another evaluates pros and cons. A third assesses sentiment. A fourth generates a recommendation. These agents execute concurrently.

A central orchestration agent coordinates the process by distributing work at the start and synthesizing results at the end. Benchmarks show that the parallel execution phase can complete in just over six seconds, with consolidation adding several more. The total runtime drops by more than half.

This is not a theoretical optimization. Experiments using modern agent frameworks clearly demonstrate overlapping execution timelines for parallel agents compared to the long, linear chains created by sequential processing. The difference is visible, measurable, and material.

Finding the right Balance with AI Agent Configuration

When to Go Parallel

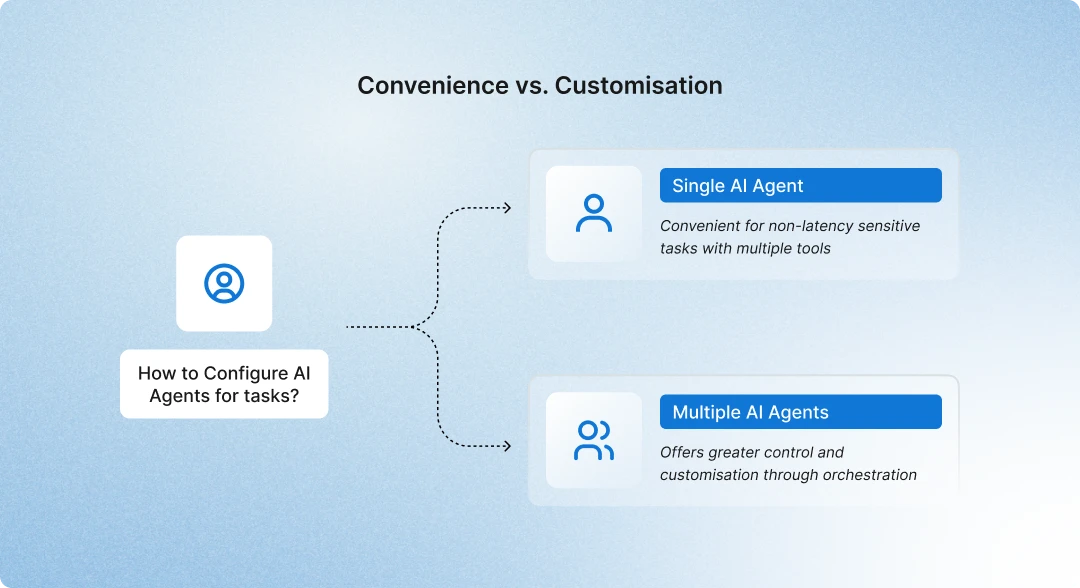

Parallel AI agents are not a universal solution. Its effectiveness depends on thoughtful upfront triage and a clear understanding of task structure.

Tasks that can be decomposed into independent sub tasks are strong candidates for parallel execution. When work streams do not rely on one another, multiple agents can operate simultaneously and produce outputs that are later synthesized without conflict.

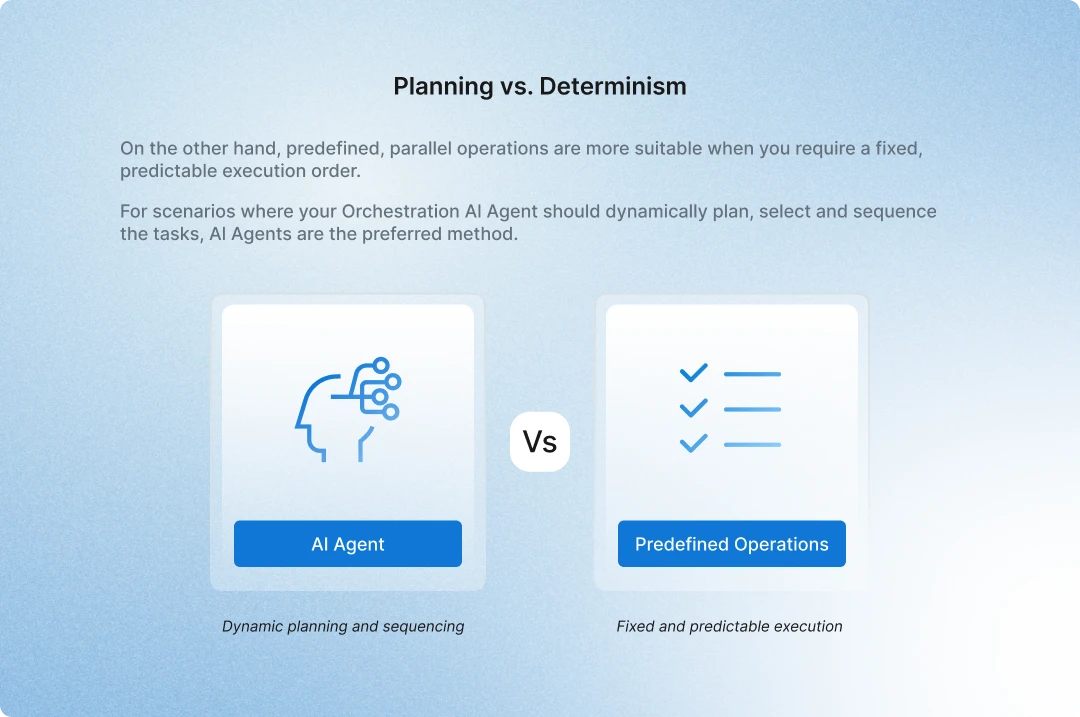

By contrast, interdependent steps require sequential execution. When one action depends on the output of another, forcing parallelism introduces coordination risk and increases the likelihood of errors.

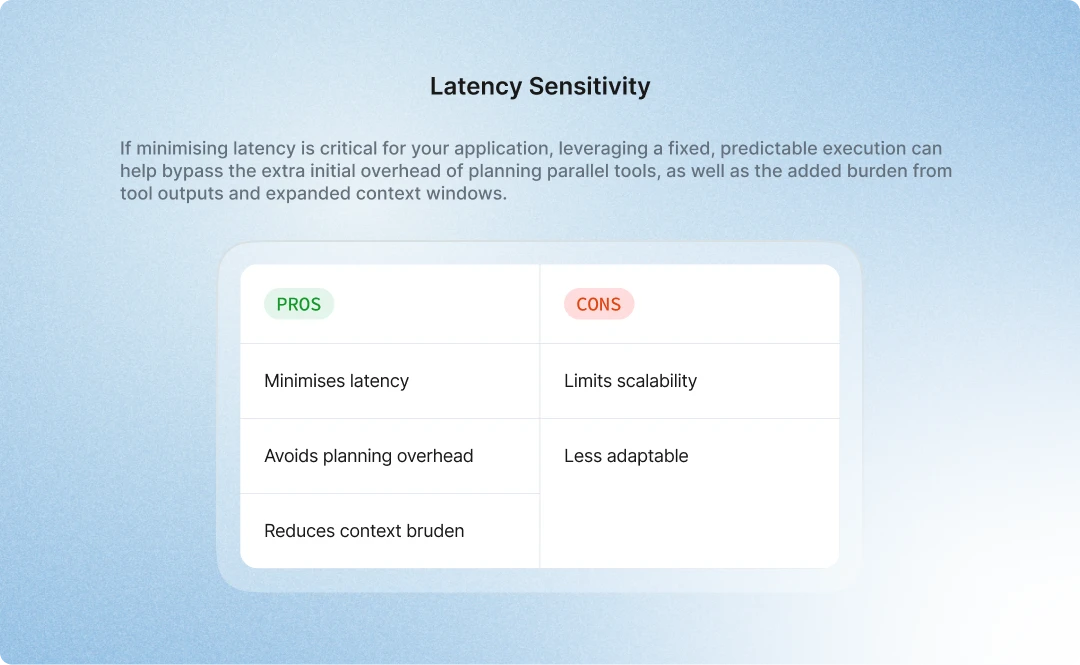

Latency requirements often determine the right approach. In scenarios where responsiveness is critical, such as real time customer support or interactive enterprise applications, fixed and predictable parallel execution minimizes overhead. It avoids the cost of dynamic planning and prevents context windows from expanding unnecessarily.

For workflows that are less time sensitive, simplicity often wins. A single versatile agent equipped with multiple tools can be easier to manage and sufficient for the task at hand.

Real-World Trends in Agentic AI

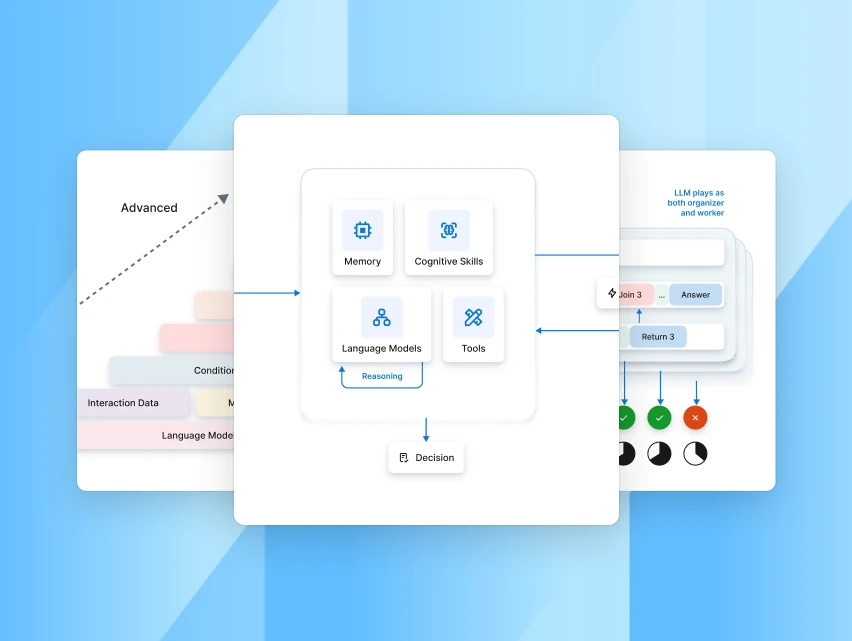

Leading AI platforms are already moving toward multi model, parallel orchestration patterns. Rather than relying on a single monolithic agent, systems increasingly route work through pipelines of specialized agents responsible for distinct stages of execution.

OpenAI’s own architectures reflect this shift. Queries are processed through agents focused on triage, clarification, instruction building, and research, each contributing a specific capability to the overall outcome.

Efficiency is further improved through model specialization. Lightweight and cost effective models handle early stage tasks such as disambiguation, intent clarification, and prompt refinement. Larger and more powerful models are reserved for deep reasoning and synthesis. This modular design significantly reduces both latency and operational cost for long running workflows.

Many AI leaders are converging on the same conclusion. Small language models are well suited as the foundation of agentic systems. They offer lower latency, smaller memory footprints, and easier fine tuning. Larger models are invoked selectively, only when the task demands greater depth or nuance.

The Bottom Line

Parallel agent processing fundamentally reshapes what agentic AI can deliver in production environments. By decomposing work into independent components and orchestrating multiple agents concurrently, organizations can achieve meaningful reductions in latency without compromising output quality.

As multi agent frameworks mature and small language models continue to improve, parallel execution will become the default architectural pattern for enterprise systems. The future of agentic workflows is not a single agent attempting to do everything. It is a coordinated system of specialized agents operating together with speed, efficiency, and precision.

Looking ahead, platforms like Kore.ai are accelerating this transition by making parallel agent orchestration accessible at scale.

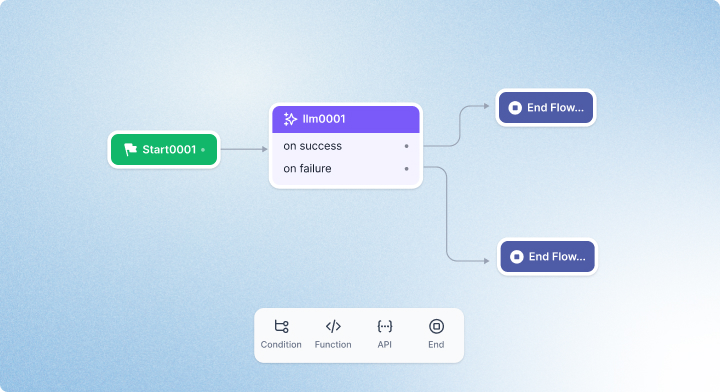

Through no code process flow builders, visual workflow design, parallel execution branches, and built in multi agent orchestration, teams can create sophisticated agentic AI workflows without deep engineering expertise. This democratization of parallel agent processing enables enterprises to deploy coordinated teams of specialized agents that operate simultaneously, delivering faster and more scalable automation for complex real world processes.

To see how this works in practice, explore the Kore.ai Agent Platform and learn how to design, orchestrate, and deploy parallel AI agents for enterprise-grade use cases.

.webp)