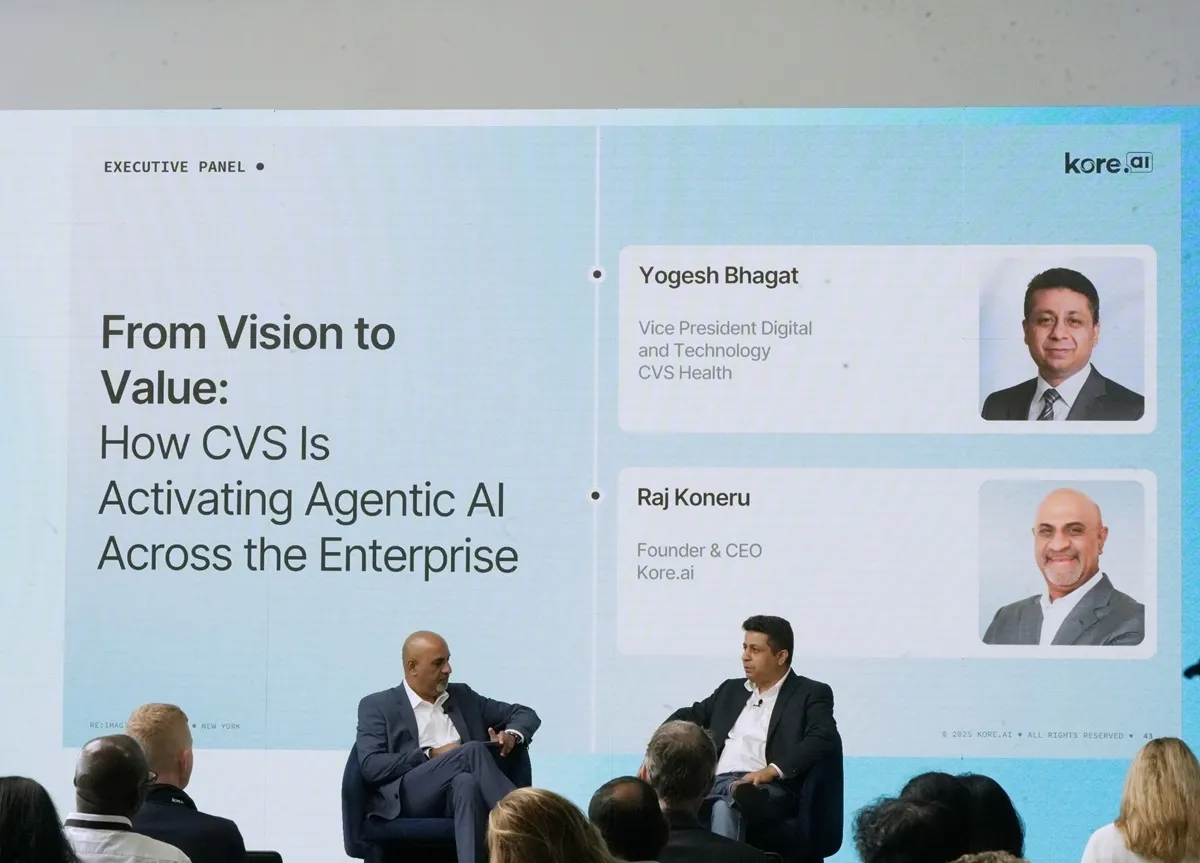

What Reimagine NYC revealed through the voices of Raj Koneru (CEO and Founder, Kore.ai), Shailesh Gavankar (Head of AI Strategy, Morgan Stanley), Yogesh Bhagat (VP Digital & Technology, CVS Health), Cathal McCarthy (Chief Strategy Officer, Kore.ai) , and Cobus Greyling (Chief AI Evangelist, Kore.ai) about building for the pace of AI

“Three months is an eternity in AI”.

At Reimagine NYC in September, this line did not land like a dramatic claim. It felt more like a quiet acknowledgment of something everyone in the room was already living. And it’s true, because in AI, what you build in March might already feel outdated by June not because the work is wrong, but because the landscape keeps shifting around it. Models evolve, tools merge, and the assumptions you confidently made a few months earlier begin to loosen.

Here, the pace itself is not the problem. It is an external force no enterprise can slow down or control. The real tension comes from the way we still plan. We operate in quarters and years, while AI shifts in weeks. That mismatch is where most organisations struggle. Readiness now has less to do with anticipating the next wave and more to do with staying steady while everything around you keeps changing.

What stood out at Reimagine NYC was how clearly this shift came through. No one was chasing the next big model. The conversations were about the foundation underneath it. Leaders are starting to see that staying ahead isn’t about predicting what AI will do next, but about building systems that can absorb whatever comes next. That realisation is what shaped the five pillars of this blueprint.

Pillar 1: Architecture that can handle unpredictability

At Reimagine NYC, the Morgan Stanley conversation led by Cathal McCarthy, CSO at Kore.ai, surfaced one of the most practical ideas of the day. Shailesh Gavankar, Head of AI Strategy, explained how their teams use a Lego Blocks approach. Instead of one large, fixed system, they created a small set of five to ten pre-approved blocks that represent safe, reusable tasks. Teams can combine these blocks to build their own workflows and share them across the organisation.

Shailesh explained the mindset behind it: “Give people enough room to build, but not enough room to break anything.” That discipline turns architecture into a source of stability rather than fragility. When every piece is replaceable, introducing a new model or adjusting a workflow doesn’t trigger a rebuild. The foundation stays steady even as individual components evolve.

This is what makes adaptability practical. The system is structured to absorb movement, not crack under it.

Read more: Explore agentic architecture

Pillar 2: The reality check of autonomous era

Another theme that stood out at Reimagine NYC was how loosely the industry uses the word “autonomy.” It is spoken about as if enterprises are on the verge of letting machines run entire functions, but when you look closer, no one is actually asking for that. What people want is speed and intelligence, not systems that run off on their own. It’s easy to get carried away by the promise of autonomy, but that excitement often outruns the capability.

During the discussion, Cobus Greyling, Chief AI Evangelist at Kore.ai, cut through the noise with a simple point: even CEOs don’t operate with full autonomy. Decisions still move through context, checks, and approvals. Expecting AI to function without boundaries when humans themselves don’t is unrealistic. And this is where most projects slip. The “autonomous agent” narrative expands faster than teams can define what an agent should do, and that assumption window is exactly where months of work disappear.

This picture becomes clear when we see things from the operator lens. Shailesh Gavankar, who leads AI Strategy at Morgan Stanley, pointed out that most enterprise workflows don’t need full autonomy at all. They benefit from assisted decision-making as a loop between a user and an assistant, not a handoff to something running independently. Autonomy in the enterprise is not about removing people. It is about giving AI enough room to act while staying inside the boundaries of policy, data rules, and accountability. That is the version of autonomy that actually works today.

Pillar 3: Governance as an operating system

One of the more honest observations at Reimagine NYC was how quickly traditional governance breaks once agents enter the picture. The old approach of reviews, committees, and documentation cycles works when systems are predictable. It collapses the moment you introduce agents that can think, reason and act. You cannot govern that world with spreadsheets and approval queues.

This came through strongly when Cobus Greyling, Chief AI Evangelist at Kore.ai, pointed out that enterprises cannot manage agent behaviour through human oversight alone. As he put it, “You cannot govern this world with committees. The system itself has to enforce the rules.”

The conversations made it clear that governance now has to sit inside the platform, not around it. Identity, permissions, boundaries, and audit trails need to be native behaviours, not manual add-ons. Because once you have dozens of agents operating at the same time, the only governance that holds is the kind the system enforces automatically.

That is the shift leaders are starting to recognise. Governance is no longer a checkpoint; it is an architectural layer. When the rules live inside the platform, teams can move faster without compromising safety, and leaders can trust the system because compliance is happening in the background. This is what governance for the agent era looks like: simple for users, strong underneath, and able to keep up with intelligence that evolves week after week.

Read more: Explore AI Observability

Pillar 4: Scaling with sanity

Scaling became one of the most practical conversations at Reimagine NYC. The discussion focused on what actually breaks when AI moves from a controlled pilot to a live enterprise environment. It brought together both strategic and operational viewpoints, which helped surface the gaps that do not appear on a slide.

Raj Koneru, CEO and Founder of Kore.ai, spoke about scale as a capability that must be designed into the system from day one, while Yogesh Bhagat, VP Digital & Technology, CVS Health, added the reality of what happens when AI starts interacting with real workflows across a large organisation.

Yogesh put it simply. “Everything looks simple on paper, but scaling it inside an enterprise is a different story.” Pilots run with clean inputs and narrow conditions. Production exposes where identity begins to strain, where access becomes restrictive and where context becomes difficult to deliver at the right moment.

Raj explained why these cracks appear. Agents do not behave like traditional monolithic systems such as SAP or Salesforce. They move a process forward in small, context-aware steps. Scaling effectively means fixing identity, access and context first. Once those fundamentals hold, intelligence can expand without becoming unstable.

Together, they made the point clear. Scaling is not about adding more agents. It is about preparing the environment so humans and agents can work together without friction. Once that foundation is stable, scale becomes far more predictable.

Read more: Learn how enterprises scale AI

Pillar 5: Absorptive capacity as the new advantage

Absorptive capacity became a quiet but recurring theme throughout the discussions. As leaders compared notes on how fast the landscape was moving, the challenge became obvious. Organisations often take months to align on definitions or roles, yet the environment continues to evolve in the background. It came through clearly when Shailesh Gavankar (Morgan Stanley), mentioned how long it took just to settle on what an agent is. By the time alignment happens, the ecosystem is already in a new phase.

The need for stronger foundations surfaced again when Cobus Greyling, Chief AI Evangelist at Kore.ai, spoke about the limits of incremental fixes. His point was simple. A fragmented approach cannot absorb constant change. A platform has to take the load so that governance, identity and boundaries adjust automatically instead of through manual reviews.

From an operational standpoint, Yogesh Bhagat (CVS Health), emphasised where these pressures appear first. Identity frameworks built for humans strain the moment agents multiply, and context delivery becomes inconsistent once AI runs inside real workflows. These are not edge conditions. They are the everyday realities of enterprise-scale AI.

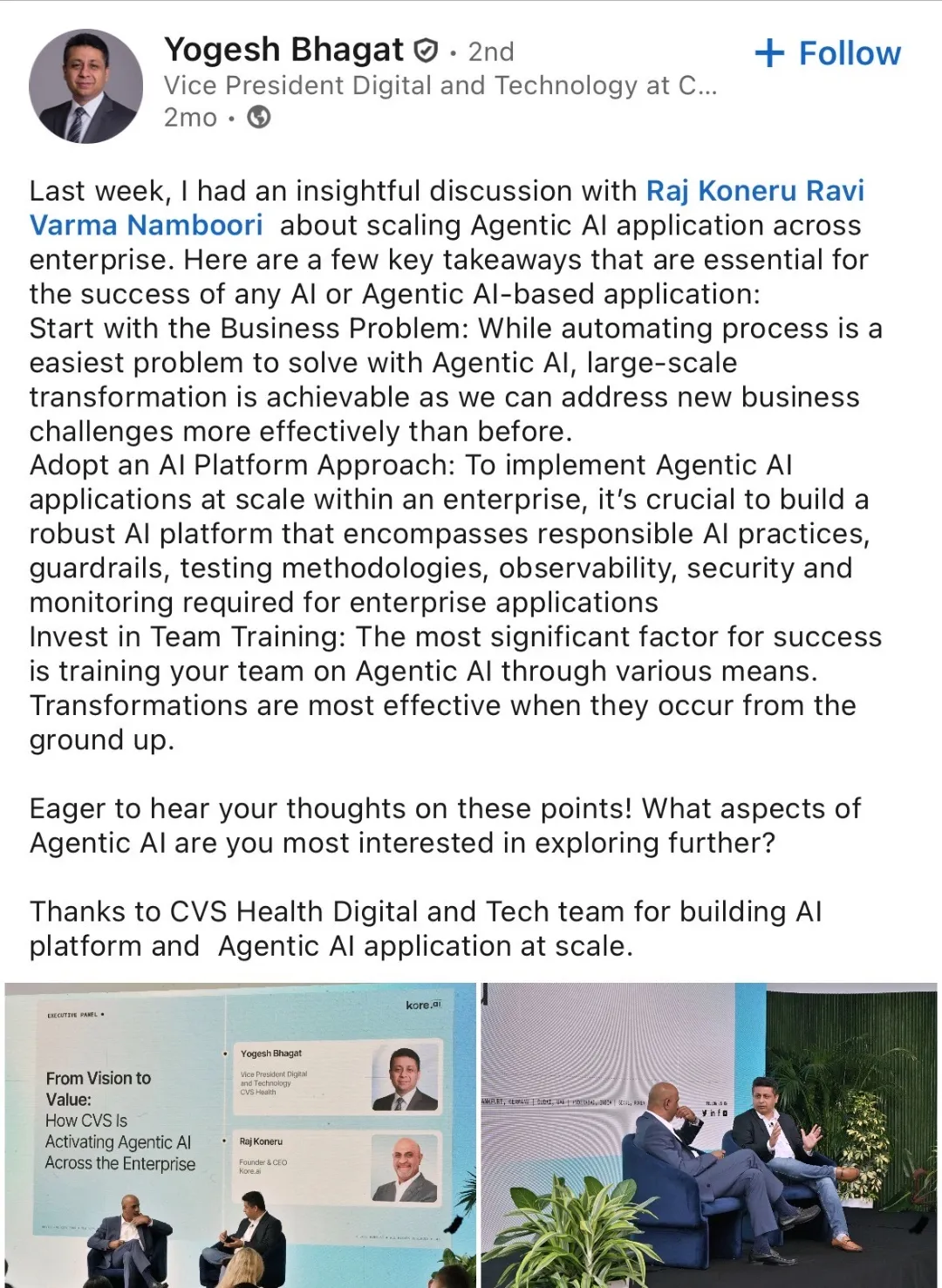

In his recent LinkedIn post, Yogesh shared some inside information on how CVS is handling this work, and much of it applies to any large organisation. They start with a business problem that genuinely needs attention. They rely on an AI platform with guardrails, testing, observability and security already built in. And they invest early in training so teams know how to work with agents and can move the work forward with confidence.

Across these viewpoints, the conclusion was the same. The organisations that will stay steady are the ones whose systems can take in new models, new behaviours and new workflows without disruption. Absorptive capacity is no longer a technical preference. It is becoming the differentiator for the next phase of enterprise AI.

Takeaway of Reimagine NYC

What stood out at Reimagine NYC was the honesty in the room. No one claimed to have the pace of AI under control. People spoke from real projects, real constraints and real lessons learned. The shared understanding was straightforward. AI will keep moving quickly, and the only real anchor is the foundation an organisation builds. Readiness is no longer about predicting the next shift. It is about whether the system stays steady while everything around it keeps changing.

A practical next step is to look at your own environment through that same lens. Can it take on new models and behaviours without slowing the organisation down? That question helped many leaders identify where they were strong and where they needed more stability. If you are exploring these questions inside your own organisation, we are always open to sharing what we have learned and helping you think through the path forward.

.webp)