Introduction

As autonomous AI agents take on mission-critical workflows across the enterprise, organizations urgently need visibility into how these systems reason, make decisions, and interact with data and tools. Most agents still operate as opaque black boxes, creating hidden risks across security, compliance, performance, and governance. This blog outlines why AI observability is now essential for enterprise AI, how it enables transparency, traceability, and Responsible AI, and how Kore.ai provides the full-stack observability needed to monitor, govern, and scale AI agents with confidence.

AI agents are no longer on the horizon; they are already getting integrated into the enterprise operating stack. They are handling IT tickets, processing invoices, executing transactions, and supporting employees and customers across every function. By 2027, Gartner predicts that 40 percent of enterprise workloads will be managed by autonomous AI agents. These systems are not single models running in isolation; they are orchestrated networks of large language models, APIs, vector databases, reasoning loops, and external tools. As they reason and act across distributed infrastructure, they generate millions of intermediate decisions that determine accuracy, security, and compliance. Yet in most enterprises, those decisions remain invisible.

The absence of agent monitoring is now one of the biggest technical and governance risks facing enterprise AI. When reasoning paths are not logged and correlated, organizations lose the ability to explain outcomes or detect anomalies before they scale. A subtle prompt change can trigger a different decision tree. A token-level hallucination can propagate through a workflow and surface as a compliance breach. Bias can enter through unvetted data retrieval or model drift. Tool orchestration failures can lead to financial or operational errors. Security gaps appear when agents invoke APIs or access sensitive systems without traceable controls. Without AI observability, there is no way to audit what happened, why it happened, or how to prevent it from happening again.

AI observability brings structure to this complexity. It captures reasoning traces, model activations, tool calls, data access events, latency metrics, and output evaluations in real time. These signals are correlated into execution graphs that show exactly how an agent perceives context, plans actions, and generates results. Semantic analysis layers detect drift, hallucinations, or guardrail violations, while governance layers tag every trace with policy, user, and model metadata for auditability. The result is a transparent system of record for autonomous behavior, one that allows enterprises to monitor cognition with the same rigor they apply to code, data, and infrastructure.

In this blog, we explore how observability transforms AI agents from opaque automation to explainable intelligence, and how Kore.ai is helping enterprises scale AI with confidence, accountability, and control.

What is AI observability?

AI observability is the discipline of making intelligent systems transparent, measurable, and controllable. It gives enterprises the ability to see inside the reasoning and decision-making processes of the AI agents, revealing not just what actions they perform but how and why they perform them. Traditional observability focuses on monitoring infrastructure such as servers, APIs, and latency. AI observability extends this visibility to cognition itself, capturing how agents interpret context, plan actions, invoke tools, and produce outcomes across dynamic enterprise environments.

At a technical level, AI observability instruments every layer of an agent’s lifecycle. It collects signals such as prompts, reasoning traces, model activations, retrieval queries, API calls, and generated outputs. These events are correlated into structured reasoning graphs that illustrate exactly how the agent perceived context, selected tools, executed actions, and validated results. Modern observability systems apply semantic and statistical analysis to these traces to detect issues such as drift, bias, hallucinations, and tool misuse. They also integrate performance metrics like latency, throughput, and error rates, creating a unified view of both cognitive and operational health.

Advanced AI observability frameworks go further by monitoring interactions across multiple agents and orchestration layers. They track how agents collaborate, route tasks, and exchange data to ensure coordination remains consistent and policy compliant. This continuous analysis surfaces anomalies before they affect business operations. It enables real-time evaluation of behavior, ensuring that each decision aligns with enterprise policy, security rules, and ethical standards.

Strategically, AI observability converts autonomous systems from black boxes into governed and auditable frameworks. It allows enterprises to validate that AI agents handle data responsibly, follow compliance requirements, and operate within approved workflows. Each trace can be enriched with metadata for model versioning, policy tagging, and access control, producing a full cognitive audit trail. This visibility strengthens enterprise trust, providing clear evidence of how decisions are made and ensuring that automation operates under complete governance.

In its most complete form, AI observability is both a technical framework and a leadership discipline. It integrates telemetry, analytics, and compliance into a single foundation that connects autonomy with accountability. For enterprises moving from experimentation to large-scale deployment, it ensures that intelligent systems remain explainable, reliable, and secure, forming the cornerstone of responsible AI at scale.

Why AI observability matters for enterprises

Enterprises are entering an era where AI is no longer a supportive layer but a decision-making core. AI agents now interact with data, systems, and people in ways that impact security, compliance, and customer trust. In this environment, AI observability is not just about tracking performance; it is about sustaining control over intelligence that learns, adapts, and acts autonomously.

1. Growing enterprise risks from poor AI visibility

Unlike deterministic applications, AI agents operate through probabilistic reasoning loops that evolve with data and context. A single interaction may involve multiple subsystems: a large language model for reasoning, a retrieval engine for context enrichment, a tool or API layer for action, and a feedback module for validation. Each layer generates telemetry, but without correlation, these signals remain fragmented. The absence of observability turns these interconnected systems into black boxes complex, powerful, and opaque.

When enterprises cannot trace how an agent arrived at a decision, root cause analysis becomes guesswork. A degraded response could stem from an outdated prompt, an API timeout, a dataset shift, or a tool misfire. Without observability, engineering teams can’t isolate the failure; compliance teams can’t prove accountability; and security teams can’t verify data boundaries. The result is operational uncertainty at scale.

2. Hidden security, bias, and hallucination issues in unmonitored AI

Every AI agent is only as secure as the systems it touches. Without observability at the reasoning and execution layers, unauthorized access, prompt injections, or tool misuse may go unnoticed. For example, an agent with access to enterprise data lakes might surface sensitive records in its output because guardrails weren’t triggered. Similarly, hallucinations, fabricated outputs that sound confident but are false, can lead to reputational or legal exposure.

Bias compounds the challenge. Agents fine-tuned on unbalanced data or overfit to specific user patterns can generate outcomes that deviate from policy or fairness standards. AI observability enables early detection of these issues by continuously monitoring model activations, retrieval context, and response variance. It provides the evidence and alerts needed to remediate before harm reaches end users or regulators.

3. Performance decline driven by AI Drift over time

AI agents don’t fail catastrophically; they drift. Over time, model weights shift, data distributions change, and workflows evolve. This gradual drift erodes quality and reliability long before a major incident occurs. Observability pipelines that capture latency, throughput, token probabilities, and reasoning traces allow engineers to quantify and correct drift proactively.

Advanced observability systems use semantic drift detection, comparing real-world agent outputs against baseline responses using similarity scoring and evaluator models. When performance drops below a defined threshold, teams can automatically retrain or reconfigure models. This continuous evaluation ensures that agents remain aligned with enterprise standards for accuracy, tone, and compliance.

4. Decision traceability as the foundation of AI governance and compliance

Enterprises operate under increasing regulatory scrutiny. Regulations such as GDPR, HIPAA, and the upcoming AI Act require transparency into how AI systems process and use data. AI observability provides the audit trail to meet these standards. Every reasoning trace, model invocation, and data access event can be logged and tagged with compliance metadata. When auditors ask why an AI agent made a decision, observability enables organizations to replay the entire reasoning path.

At the same time, observability connects technical governance to strategic oversight. CIOs and compliance officers gain visibility into agent performance metrics, error rates, and decision trends through real-time dashboards. This fusion of technical telemetry and business intelligence turns observability into an enterprise command center for AI health, policy alignment, and trust.

5. Continuous optimization enabled by full-stack AI Observability

The most advanced enterprises treat AI observability as a continuous learning loop. Insights from reasoning traces inform model fine-tuning, prompt optimization, and policy refinement. Each interaction becomes a data point that strengthens future performance. In this way, observability doesn’t just protect the enterprise; it accelerates innovation responsibly.

AI observability, therefore, is more than monitoring. It is the technical and governance architecture that ensures AI agents remain secure, reliable, compliant, and aligned with enterprise intent. In a world where AI decisions carry real business consequences, observability is what transforms intelligent systems from probabilistic experiments into accountable digital assets.

The core pillars of AI observability

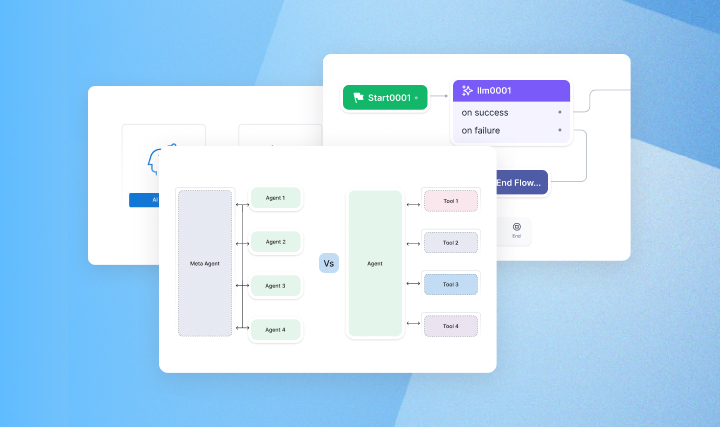

As enterprises operationalize large-scale AI agents and multi-model architectures, observability becomes the foundational layer for controlling, validating, and optimizing system behavior. AI stacks are no longer single-model deployments; they are complex, distributed reasoning systems involving LLMs, retrieval layers, tools, APIs, and orchestration engines. To govern these environments effectively, AI observability must deliver deep visibility across five core pillars: Cognition, Traceability, Performance, Security, and Governance. Together, these pillars provide the technical scaffolding required to make autonomous systems transparent, predictable, and fully accountable at enterprise scale.

1. Cognition/ Reasoning

Cognition exposes the internal decision-making process of AI systems, providing insight into how an agent interprets context, constructs reasoning steps, and converges on an output. AI agents generate decisions through multi-step chains that blend prompt manipulation, latent-space inference, retrieval augmentation, and iterative planning. Without instrumentation at this level, enterprises cannot validate logic, diagnose anomalies, or ensure alignment with policies and expected behavior.

Cognition observability typically captures:

- Intermediate reasoning traces across multi-step decision paths

- Token-level probabilities, activation patterns, and semantic transitions

- Prompt evolution and contextual augmentation during agent thought loops

- The influence of retrieval results on reasoning quality

- Model-to-model and agent-to-agent negotiation behavior

This pillar transforms opaque AI reasoning into a measurable, inspectable process, essential for debugging, bias detection, risk evaluation, and high-risk workflow validation.

2. Traceability

Traceability reconstructs the full execution lineage of a decision from initial input to final action. In enterprise deployments, AI agents routinely interact with workflow engines, databases, APIs, microservices, and other agents. Without traceability, these distributed interactions form a black box, making it impossible to perform root cause analysis, compliance audits, or behavioral forensics.

Traceability observability highlights:

- The complete sequence of tool and API calls made by an agent

- Parameters, payloads, timings, and downstream dependencies

- How reasoning steps map to system actions within the execution graph

- Cross-agent communication and orchestration flow decisions

- Discrepancies between intended workflows and real execution paths

End-to-end traceability ensures that every autonomous action is explainable, reconstructable, and fully attributable, critical for regulated industries, operational forensics, and enterprise-grade AI reliability.

3. Performance

Performance provides a correlated, system-wide view of how AI behaves under real-world load. In production, failures rarely stem from one component; they emerge from interactions among models, retrieval engines, tool endpoints, and orchestration logic. Performance observability quantifies these interactions to ensure throughput, reliability, and latency remain stable as usage scales.

Performance observability includes:

- Inference latency distributions, throughput patterns, and entropy signatures

- Retrieval relevance drift, vector search timing, and similarity variance

- Tool/API responsiveness under dynamic concurrency

- Workflow-level timing across multi-agent or multi-step pipelines

- Correlations between model complexity, token usage, and system reliability

This pillar enables engineering teams to identify bottlenecks early, optimize resource allocation, enforce SLAs, and maintain consistent behavioral integrity across massive operational loads.

4. Security

Security observability protects the cognitive and operational surfaces where AI systems are most vulnerable. Autonomous agents expand the enterprise attack surface; they access data, trigger workflows, chain tools, and adapt behavior based on dynamic context. Identifying abnormal or malicious patterns requires monitoring both reasoning and execution.

Security observability detects:

- Prompt injection, adversarial patterns, and semantic anomalies

- Retrieval poisoning or unsafe external data influencing decisions

- Unauthorized tool or API invocation attempts

- Privilege drift or unauthorized access path creation

- Anomalous reasoning loops indicate a coma-promised cognitive state

By correlating identity, policy, context, and behavior, security observability creates a real-time control plane that prevents AI agents from operating outside authorized boundaries.

5. Governance

Governance ensures continuous accountability across an AI system’s entire lifecycle. As models, prompts, embeddings, tools, and workflows evolve, enterprises require a versioned and explainable record of how changes impact system behavior. Lifecycle governance establishes the temporal backbone needed for compliance, stability, and responsible AI operations.

Governance observability captures:

- Version histories of agents/models, prompts, retrieval corpora, and workflows

- Behavioral diffs before and after updates or retraining

- The impact of embedding refreshes or data distribution shifts

- Configuration changes tied to drift, regressions, or anomalies

- Layered policy changes and their decision impact

Governance is the enterprise assurance layer that keeps autonomous systems predictable, reproducible, and regulatory compliant, even as they adapt and evolve.

When combined, these pillars create full-stack AI Observability. Together, Cognition, Traceability, Performance, Security, and Governance create a comprehensive observability fabric that spans the entire AI ecosystem. This fabric allows enterprises to monitor how AI thinks, executes, scales, safeguards data, and evolves. It provides engineering teams with actionable intelligence, security teams with full-stack auditability, and business leaders with the confidence that autonomous AI systems can operate reliably and responsibly at enterprise scale.

How does AI observability make autonomous systems enterprise-ready?

AI observability is the architectural substrate that converts autonomous systems from probabilistic black boxes into governed, measurable, and operationally deterministic enterprise assets. Modern agents execute across heterogeneous runtimes, multi-model inference pipelines, retrieval-augmented memory stacks, tool orchestration layers, and distributed workflow engines. Within these environments, decisions emerge from complex interactions between models, data, and external systems. Without correlated visibility across these layers, enterprises cannot validate decision integrity, enforce guardrails, or maintain SLAs.

Observability transforms this landscape by delivering end-to-end telemetry on how agents reason, act, optimize, and evolve, enabled through mechanisms such as agent tracing, performance analytics, and event-level governance logs.

1. Transparent decision logic through deep AI reasoning visibility

AI decision pathways are formed through layered cognitive operations: semantic decomposition of the prompt, latent-space inference, context expansion from retrieval systems, iterative chain-of-thought planning, and policy-conditioned reasoning loops. These processes drive every downstream action but remain invisible in standard logging architectures. Agent tracing provides the forensic interface required to expose these internal cognitive pathways.

Through agent tracing, technical teams can:

- Reconstruct the entire inference and execution graph for any request

- Identify which LLMs, sub-models, or routing heuristics were activated

- Analyze token-level probability distributions to determine reasoning depth

- Attribute latency across inference, retrieval, tool invocation, and orchestration layers

- Inspect branching paths, retries, backoff strategies, and fallback policies

The output is a complete cognitive lineage, an inspectable reasoning artifact that makes AI logic explainable, debuggable, and auditable. This capability is indispensable for validating correctness, enforcing safety constraints, and diagnosing deviation in multi-step reasoning chains.

2. Full execution lineage for enterprise-grade accountability

Beyond reasoning, enterprises need complete visibility into how each cognitive step is mapped to concrete system actions. AI agents read data, call APIs, orchestrate business logic, and trigger downstream automations, all of which have compliance, security, and operational implications. Event monitoring provides this execution-level accountability by generating a tamper-resistant, timestamped ledger of every system modification or agent-driven action.

Event monitoring captures:

- Model updates, prompt revisions, embedding refreshes, and retraining cycles

- User actions (API key creation, credential changes, policy updates, privilege adjustments)

- Guardrail checks, violations, overrides, suppressions, and escalations

- Workflow or tool configuration updates

- Policy and permission shifts that influence agent behavior

By correlating these system events with cognitive and execution traces, observability produces a unified, audit-grade provenance chain. This is the foundation for regulatory compliance across GDPR, HIPAA, SOC2, and the EU AI Act, where explainability, provenance, and accountability are mandated by law.

3. Performance intelligence as a continuous optimization engine

AI performance is never static. In production, systems exhibit dynamic operational behavior as workload patterns evolve: input distributions shift, vector similarity scores drift, tool latency fluctuates, and reasoning pathways adapt. AI insights and analytics convert these fluctuations into a continuous performance optimization loop.

Performance analytics provide visibility into:

- Inference latency distributions, throughput variance, and entropy shifts

- Vector retrieval drift, relevance decay, and hit-rate instability

- Tool/API degradation, timeout frequency, and failure clustering

- Workflow-level success/failure signatures across multi-agent systems

- Token-to-cost mapping, resource utilization, and execution efficiency trends

These signals allow engineering teams to drive automated optimization pipelines:

prompt re-balancing, model routing adjustments, tool reliability scoring, embedding refresh cycles, and even policy-driven workflow rewrites. Observability thus becomes not just a diagnostic layer but an adaptive performance regulator that stabilizes enterprise AI under evolving load conditions.

4. Early detection of silent failures in autonomous AI systems

Many AI failures do not present as explicit errors; they manifest as subtle deviations in reasoning, retrieval relevance, or execution flow. Without deep observability, these silent failures propagate unnoticed and become operational incidents, compliance breaches, or security vulnerabilities.

By correlating agent tracing, analytics, and system events, observability platforms can detect:

- Aberrant reasoning loops or unexpected chain-of-thought trajectories

- Workflow branches that diverge from the approved execution graph

- Hallucinatory outputs stemming from retrieval drift or prompt degradation

- Micro-latency spikes indicating failing tools or downstream pressure

- Unauthorized or anomalous policy-violating actions

Early detection allows enterprises to trigger automated rollbacks, enforce guardrail corrections, apply retrieval filters, or throttle agent autonomy, preventing minor issues from cascading into systemic failures.

5. Governance-level confidence for scaling autonomous AI safely

The transformative value of AI observability emerges when correlated cognitive, operational, and governance telemetry is exposed in a unified control plane. This visibility gives leadership and engineering the real-time insight needed to expand AI responsibility safely.

Observability empowers enterprises to:

- Deploy agents in regulated, high-risk, or mission-critical workflows

- Orchestrate multi-agent ecosystems with predictable, bounded behavior

- Maintain audit-ready transparency for every decision and action

- Enforce policy fidelity, security boundaries, and compliance controls

- Scale agent autonomy without proportionally increasing risk

This marks the shift from AI as an experimental automation layer to AI as a governed enterprise subsystem, reliable, explainable, compliant, and architecturally controllable.

AI observability: The control layer that makes responsible AI possible

Responsible AI in autonomous systems is ultimately a technical discipline, not a policy document. As agents reason, access data, and execute actions across distributed infrastructure, ethical and regulatory expectations translate into engineering requirements: measurable fairness, enforceable constraints, verifiable decision logic, and auditable system behavior. These requirements cannot be satisfied without a telemetry layer that exposes the system's behavior at runtime. This is the role of AI observability.

Observability provides the runtime instrumentation needed to evaluate responsible behavior under real operating conditions, conditions that differ significantly from controlled training or testing environments. By capturing granular signals across inference pathways, retrieval patterns, privilege boundaries, action sequences, and context-dependent outputs, observability enables technical teams to detect ethical drift, distributional bias, and unsafe behavioral divergence long before they propagate into production impact.

From a systems perspective, observability supports Responsible AI by supplying:

- Behavioral diagnostics that expose how decision functions evolve as input distributions shift.

- Constraint verification that confirms guardrails, safety checks, and policy rules executed as designed.

- Data governance telemetry that traces how sensitive information is accessed, transformed, and utilized in reasoning loops.

- Risk correlation signals that link anomalies across agents, models, and tools to emerging system-level vulnerabilities.

- Accountability artifacts that make decisions reproducible, defensible, and auditable across model versions and configuration states.

In effect, observability turns Responsible AI into an engineering control loop, a continuous, data-driven process that monitors, validates, and adjusts system behavior in real time. Without this instrumentation, responsibility becomes a static aspiration; with it, responsibility becomes a quantifiable and enforceable property of the AI system itself.

How Kore.ai delivers enterprise-grade AI observability

Kore.ai embeds observability as a foundational architectural layer of its Enterprise AI Agent Platform, providing organizations with the telemetry, behavioral intelligence, and governance instrumentation required to operate autonomous systems with reliability and control. Instead of monitoring only endpoints or infrastructure, Kore.ai captures the full cognitive and operational footprint of every AI agent: how it reasons, what data it accesses, which tools it invokes, and how its environment evolves. The result is a complete operational view of agent behavior, crucial for scaling enterprise AI safely.

1. Deep reasoning visibility through Agent-level tracing

At the heart of Kore.ai’s observability stack is agent-level tracing, a forensic view into the internal mechanics of AI reasoning.

The tracing engine records:

- Full inference paths and intermediate cognitive states

- Model routing decisions and retrieval strategies

- Tool/API calls, parameters, and downstream effects

- Branching logic, retries, fallbacks, and policy-conditioned constraints

- Token usage, complexity signatures, and latency breakdowns

These traces form a replayable execution graph, enabling engineers to validate decision integrity, compare reasoning paths, diagnose deviation patterns, and conduct compliance or incident investigations.

2. Performance & behavior analytics for early risk detection

Kore.ai applies advanced analytics on top of telemetry to identify risk signals long before they impact production.

The analytics engine surfaces:

- Latency distributions across inference, retrieval, and tool surfaces

- Semantic and retrieval drift trends

- Workflow failure clusters, timeouts, and error signatures

- Token-to-cost correlations and performance-efficiency trade-offs

- Behavioral variance across environments, models, or agent versions

These insights convert raw signals into actionable engineering intelligence, allowing teams to proactively address drift, degradation, and instability, critical for high-availability enterprise AI deployments.

3. Environment, configuration, and policy monitoring

Enterprise AI systems evolve continuously, models update, prompts change, guardrails shift, and workflows adapt. Kore.ai maintains lifecycle accountability by tracking every change that can influence agent behavior, including:

- Model and agent version updates

- Prompt revisions and orchestration logic adjustments

- Embedding refreshes, datastore changes, and retrieval updates

- Guardrail and policy modifications

- Tool configurations, routing rules, credentials, and permissions

This produces a temporal audit trail that connects configuration changes to behavioral outcomes, ensuring the system remains reproducible, explainable, and stable across development, staging, and production environments.

4. Security and access governance at runtime

Kore.ai extends observability into the security layer, monitoring the live attack surface of enterprise AI agents:

- Data access patterns and privilege boundaries

- Unauthorized API or tool invocation attempts

- Prompt injection signals and adversarial behavior

- Retrieval anomalies and unsafe external calls

- Guardrail enforcement events and policy violations

This enables organizations to maintain strict behavioral boundaries, ensuring AI agents operate only within approved scopes and cannot trigger unauthorized or unpredictable actions.

5. Responsible AI alignment through runtime governance

Kore.ai’s observability fabric serves as the execution backbone for Responsible AI in enterprise environments. It transforms principles, such as fairness, transparency, explainability, and accountability, into enforceable runtime controls by providing:

- Transparency through replayable reasoning and action lineage

- Accountability through configuration and event-based audit logs

- Fairness monitoring via output variance and behavioral drift analysis

- Safety enforcement via guardrail validation telemetry

- Privacy oversight via data access and usage monitoring

These capabilities align with the practices outlined in the Responsible AI Framework, turning Responsible AI into a continuous, measurable engineering discipline.

A unified observability fabric built for enterprise AI scale

By combining cognitive tracing, performance intelligence, lifecycle monitoring, security oversight, and governance metadata, Kore.ai delivers a full-stack AI observability system built specifically for enterprise AI agents. This unified approach provides engineering teams with deep operational visibility, governance teams with verifiable auditability, and business leaders with the confidence to scale autonomous systems into mission-critical workflows without increasing risk.

Kore.ai’s observability architecture ensures that Enterprise AI remains transparent, predictable, compliant, and fully under organizational control, even as autonomous systems grow in complexity and capability.

Conclusion: Observability is the control plane for the enterprise AI era

Enterprise AI is crossing a threshold. Autonomous agents are no longer experimental workflows, they are becoming the cognitive fabric of modern operations, making decisions that influence finance, customer experience, IT, HR, supply chain, and every mission-critical function. But as their intelligence grows, so does the complexity and risk hidden within their reasoning loops, retrieval patterns, and execution flows.

This is why AI observability is no longer optional. It is the structural layer that makes autonomy governable, explainable, and reliable at scale. By illuminating how agents think, act, and evolve, observability transforms opaque AI behavior into measurable system intelligence. It gives engineering teams the ability to diagnose failures and optimize performance, gives governance leaders the guardrails and traceability required for compliance, and gives business executives the confidence that autonomous systems can operate safely across enterprise workflows.

As global regulations tighten and AI becomes deeply embedded in operational infrastructure, observability becomes the difference between scaling AI responsibly and exposing the enterprise to invisible risk. The organizations that succeed will be the ones that treat observability as a core architectural requirement, not a post-deployment add-on.

The future of Enterprise AI belongs to platforms and teams that build with transparency, govern with precision, and monitor with depth. In this new era of autonomous agents, AI observability is not simply a tool, it is the control plane for trust, safety, and sustained intelligence at scale.

.webp)