Blog

All Articles

Thank you! Your submission has been received!

Oops! Something went wrong while submitting the form.

Chain Of Natural Language Inference (CoNLI)

Hallucination is categorised into subcategories of Context-Free Hallucination, Ungrounded Hallucination & Self-Conflicting Hallucination.

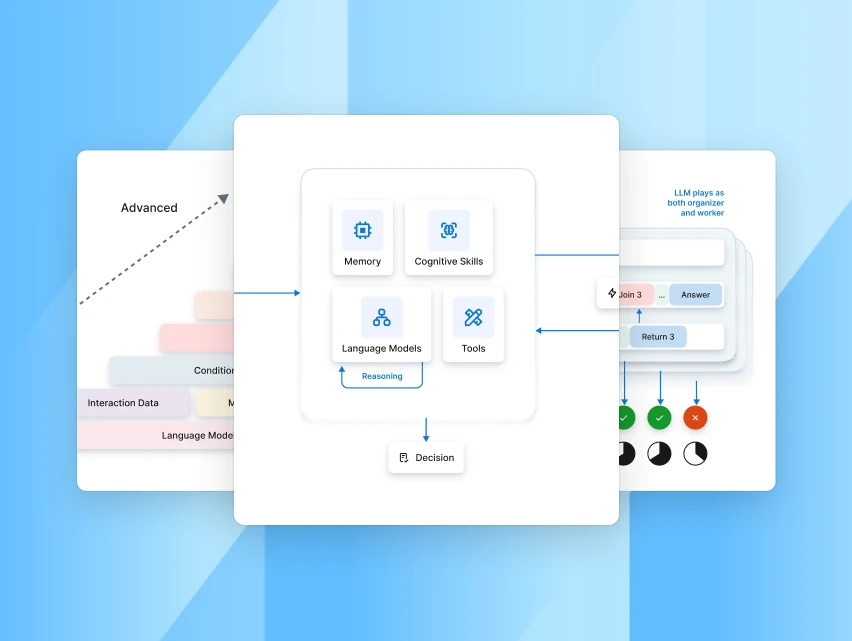

Autonomous AI Agents: the new workforce partners you can't afford to ignore

Autonomous AI agents streamline workflows, enhance productivity, and empower employees to focus on high-value work while businesses scale with AI for Work.

Enterprise Search: Definition, Use Cases, Benefits, and How to Choose the Right Solution

Discover what enterprise search is, how it helps organizations access information across data silos, its key use cases, benefits, and tips to choose the right enterprise search solution for your business.

Validating Low-Confidence LLM Generation

Learn how Kore.ai detects and corrects low-confidence LLM outputs using a real-time certainty model, entity extraction, and retrieval to reduce hallucinations.

Get the latest resources straight to your inbox

Subscribe to stay ahead and receive exclusive updates from our resource center before anyone else!

.webp)