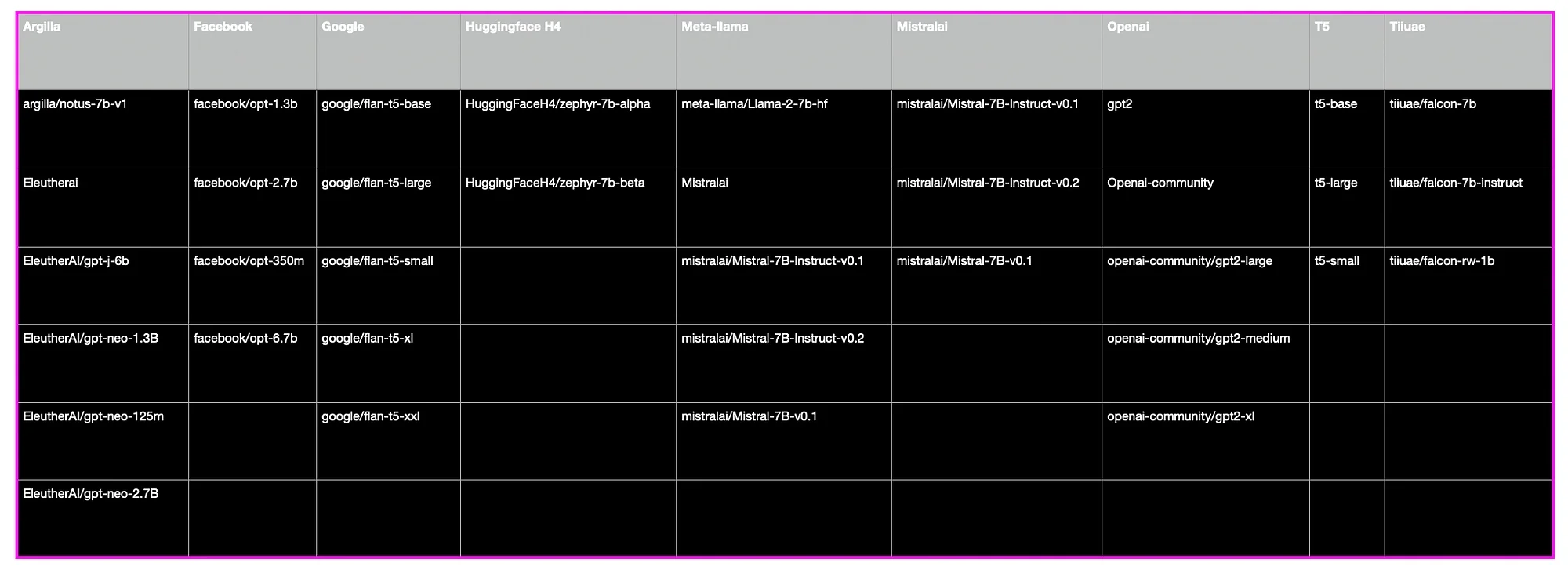

No-Code Deployment Of Open-Sourced Foundation Models Open-Sourced models from Argilla, EleutherAl, Facebook, Google, HuggingFace, Mistral Al, Meta T5, Tiiuae, and more...

Introduction

There is a whole host of open-sourced language models, both Large Language Models (LLMs) and Small Language Models (SLMs). Being able to host these models, and exposing them via an API or making use of the model via process automation flows is a significant enabler for building Generative AI applications.

The GALE AI productivity suite is a very good example of how models can be explored, managed, deployed and used within a no-code environment.

Advantages of open-sourced models

In principle, open-sourced models should be more accessibility than commercial models. And by leveraging in-context learning (ICL) less capable models are as efficient if not more than commercial models. So even-though open-source LLMs are freely available to anyone, making them accessible to a wide range of users, including researchers, developers, and organisation demand hosting, and exposing the model via a managed API. Customisation and fine-tuning of the model are possible via GALE. Users have the freedom to customise and modify open-source LLMs according to their specific needs and preferences. This flexibility allows for the development of tailored solutions and applications that address unique use cases.

I would argue that part of transparency is the fact that model drift is a real threat to production implementations. This is the scenario where the underlying model changes over time, without any notice to the user. Together with model drift, there are also factors like Catastrophic Forgetting which have been documented in relation to OpenAI models. Having a privately hosted open-source model instance guards against these problems. Open-source LLMs can facilitate rapid innovation by allowing users to leverage existing models, datasets, and tools as building blocks for new applications and research projects. This accelerates the pace of development and drives advancements in natural language processing technology.

Model orchestration

Using the Right Model for the Right Task: Task-Specific Models

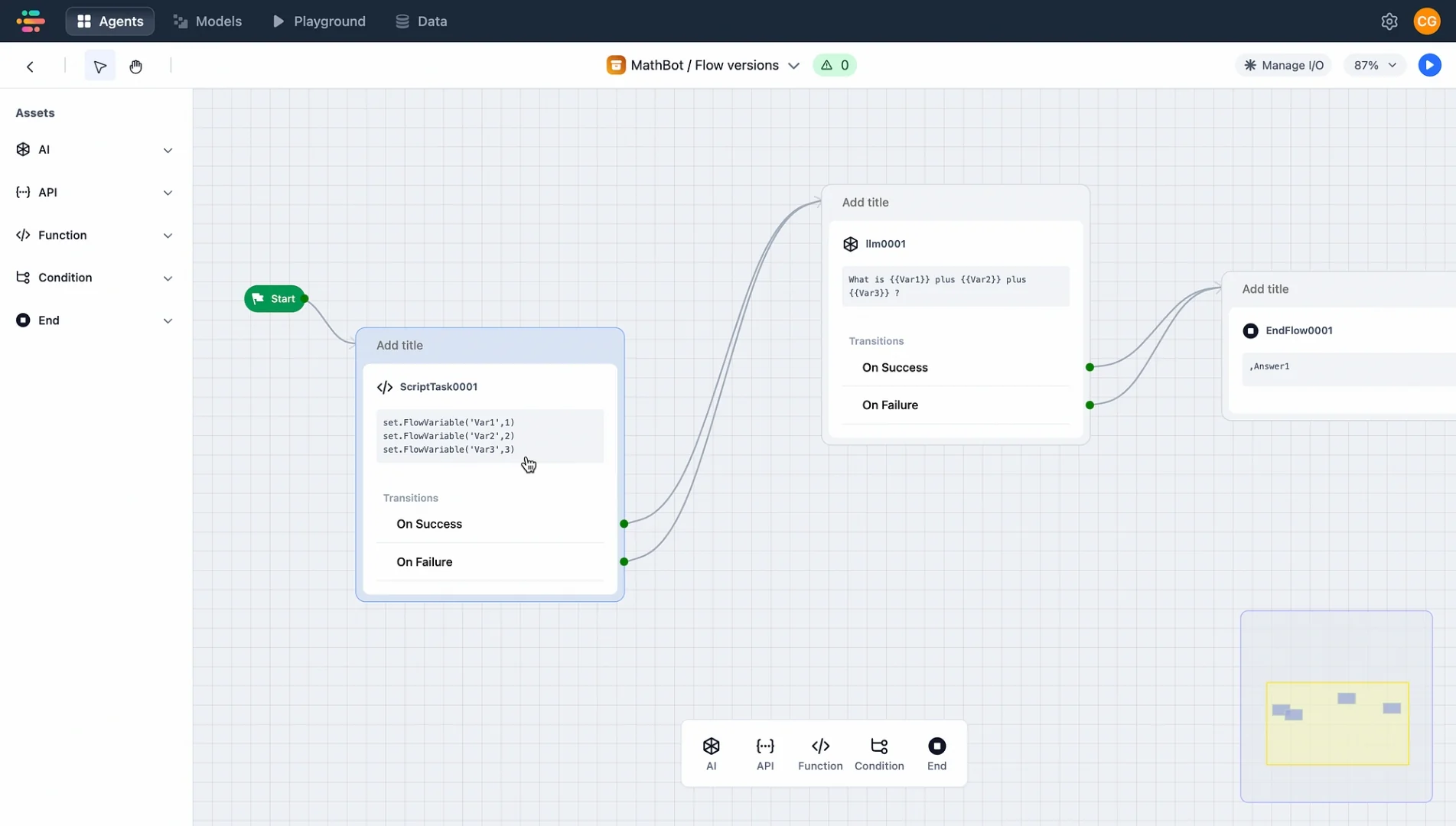

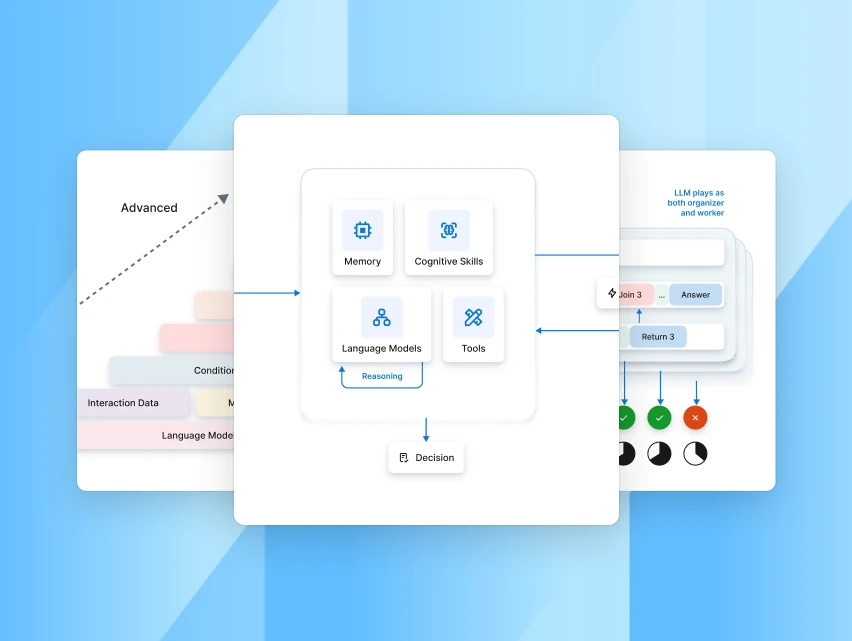

Different tasks in NLP, such as text classification, language translation, and sentiment analysis, may require specialised models optimised for their respective tasks. By selecting the appropriate model for each task, organisations can achieve better performance and accuracy. When choosing the right model for a task, factors such as model architecture, pre-training data, fine-tuning techniques, and computational resources must be considered. Using and orchestrating multiple LLMs can improve performance by leveraging the strengths of different models at various stages of the application flow. Model orchestration methods combine predictions from multiple models to produce a more robust and accurate output, particularly in tasks where individual models may have limitations or biases. Orchestration frameworks can dynamically select the most suitable model for each task based on factors such as task requirements, model performance, and resource availability. This adaptive approach ensures that the system can effectively handle a wide range of tasks and adapt to changing conditions over time. Orchestration can easily be achieved with a flow builder as seen below, where different models can be based on different scenarios and conditions.

Dashboard screenshot featuring multiple items and data points for performance tracking.

In summary, large language model orchestration involves managing the deployment, scaling, and optimisation of LLMs, while using the right model for the right task involves selecting appropriate models based on task requirements and optimising performance through dynamic model selection and ensemble learning techniques.

Accelerated Generative AI adoption

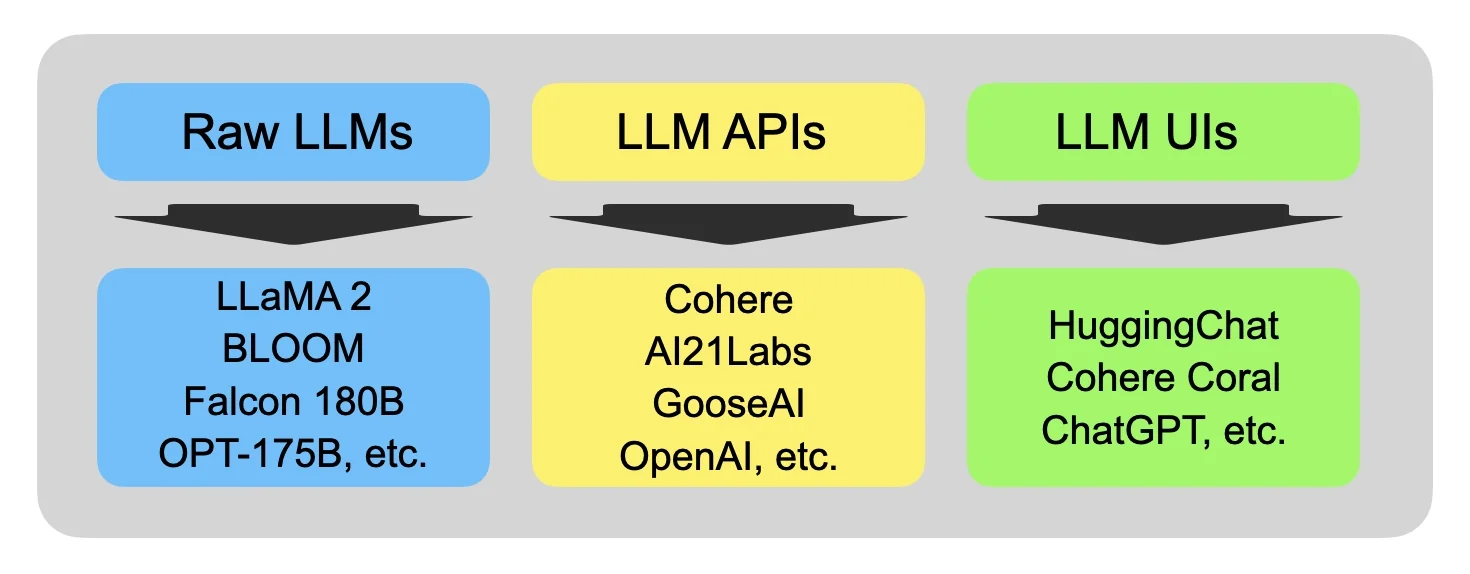

Large language models (LLMs) are accessible through the three options depicted in the image below. Various LLM-based User Interfaces, such as HuggingChat, Cohere Coral, and ChatGPT, offer conversational interfaces where the UI can learn user preferences in certain cases. Cohere Coral facilitates document and data uploads to serve as contextual references. LLM APIs represent the most popular method for organisations and enterprises to leverage LLMs. The market offers numerous commercial offerings, as detailed in the accompanying image. While LLM APIs are the simplest means to develop Generative Apps, they present challenges including cost, data privacy, inference latency, rate limits, catastrophic forgetting, model drift, and more. Several open-source raw models are available for free use, although implementing and operating them requires specialised knowledge. Additionally, hosting costs will increase with adoption.

Local and private hosting of SLMs which are fit for purpose solves most of these challenges.

Model access via GALE

Below is a matrix of open-sourced models which are available via the GALE productivity suite. The number of open-sourced models are sure to grow in number.

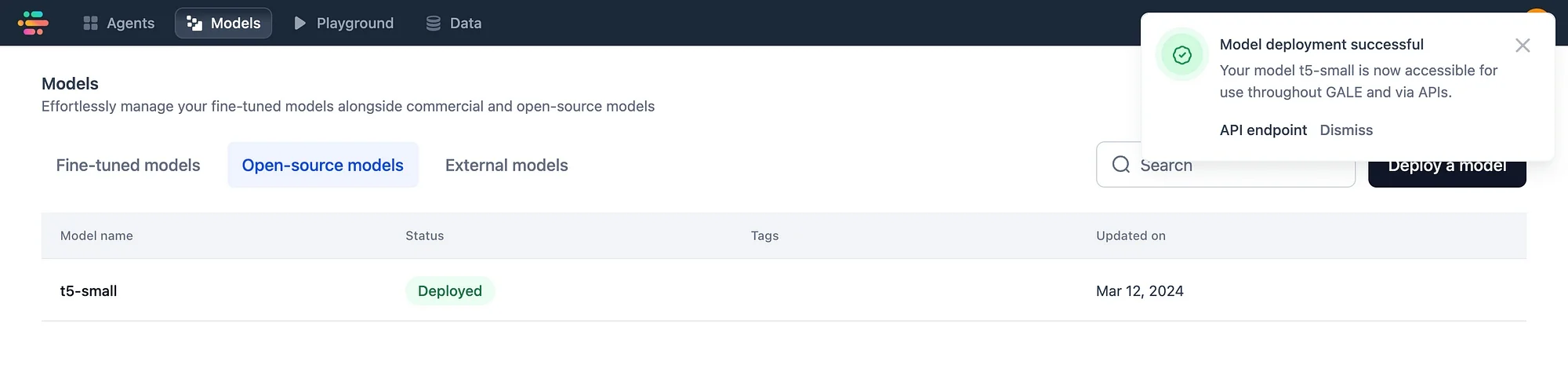

Considering the image below of GALE, there are three options available, fine-tuned models, open-source models or add external models. In this example, the open-source models option is selected. A list of available models within GALE are displayed, which can also be searched. In the example below I selected the t5-small model, which deploys within a few minutes. GALE notified me via email the moment the model was deployed. Models can be managed in terms of status, being deployed, un-deployed and deleted.

Once deployed, as seen below, a few options are available, a model endpoint is immediately available with different parameters being available. The Python code example given below, I could copy directly from the GALE user interface, and paste it into a notebook.

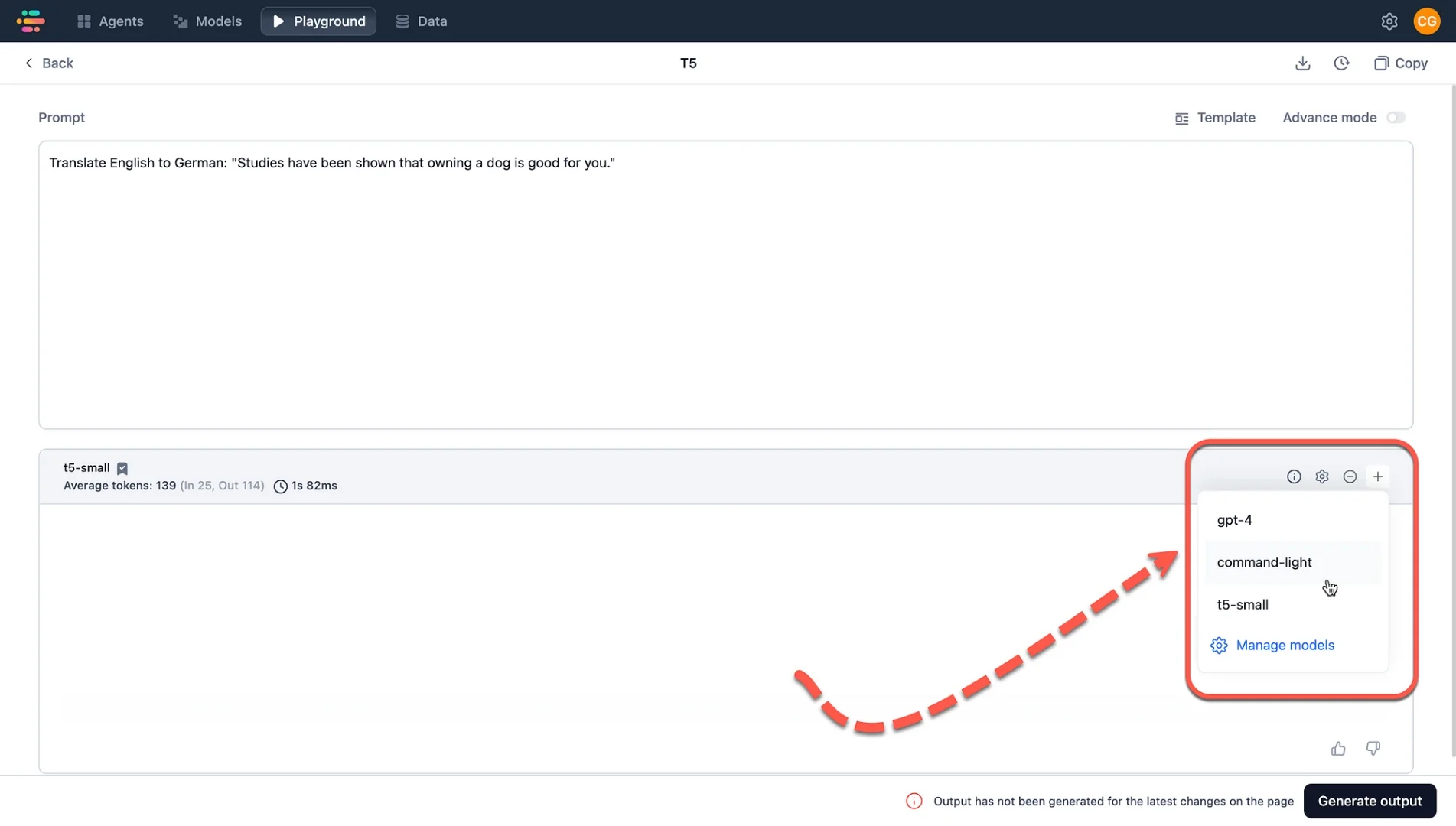

The deployed model is also accessible via the playground for experimentation. Multiple models can be compared in parallel, using the same input and prompt.

Below the models available to me is shown via the dropdown…

Conclusion

The importance of an AI productivity suite lies in its ability to empower individuals and organisations to achieve their goals faster, smarter, and with greater precision. By harnessing the power of AI to automate tasks, personalise experiences, and drive insights, a productivity suite becomes an indispensable asset in creating, deploying and managing generative AI applications.

.webp)