Blog

All Articles

Thank you! Your submission has been received!

Oops! Something went wrong while submitting the form.

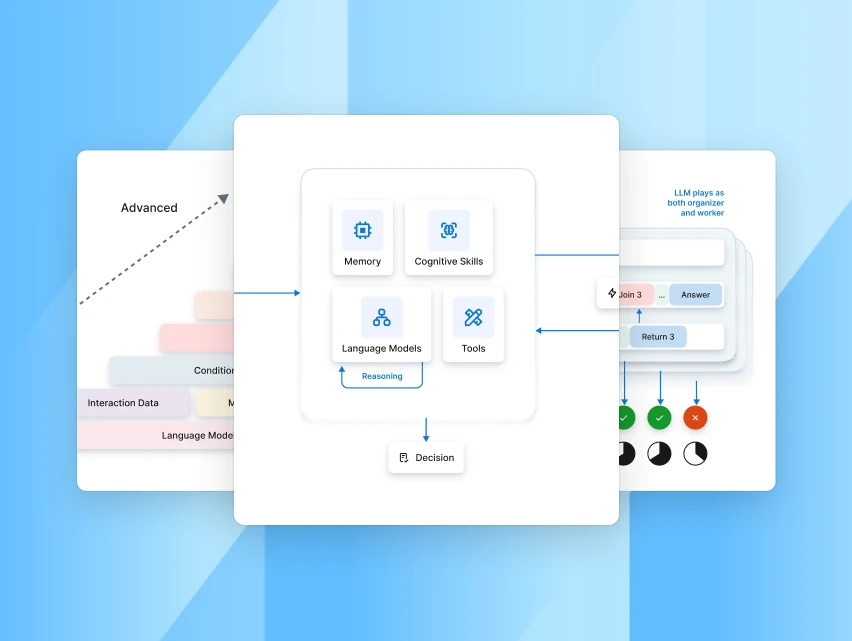

What is Agentic AI? Use Cases & How It Works (2026)

Agentic AI is an intelligent system that enables AI agents to make autonomous decisions. Discover how Agentic AI works, its use cases, and real-world examples.

Scaling Beyond Limits: How Leaders Reimagine with AI

At Reimagine Mumbai, leaders from BCG, Microsoft, Axis Bank, and Deloitte shared how AI agents are turning pilots into scalable agent ecosystems to transform work

How generative AI is improving workplace productivity in 2026

Discover how Generative AI is reshaping work, from automating tasks to enhancing decision-making. Learn 5 ways how GenAI boosts work productivity.

Moving beyond answers to authentic dialogue

Discover how autonomous conversational AI and real-time voice intelligence transform service, resolve issues faster, and create natural customer experience.

No items found.

Get the latest resources straight to your inbox

Subscribe to stay ahead and receive exclusive updates from our resource center before anyone else!

.webp)