Blog

All Articles

Thank you! Your submission has been received!

Oops! Something went wrong while submitting the form.

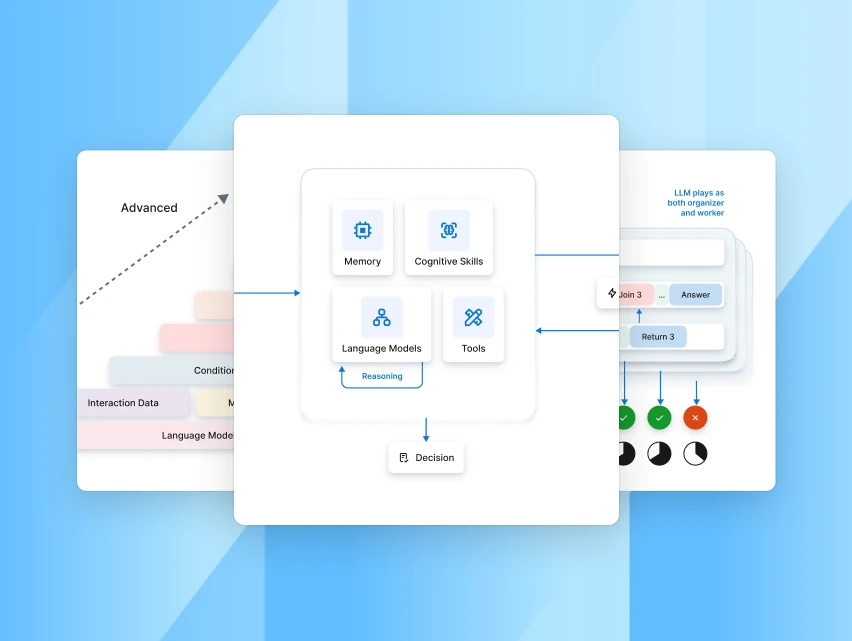

The Anatomy Of Chain-Of-Thought Prompting (CoT)

Dive into the anatomy of Chain-of-Thought (CoT) prompting — how it works, why it improves LLM reasoning, and tips for writing effective CoT prompts.

Contrastive Chain-Of-Thought Prompting

Contrastive Chain-Of-Thought Prompting

Contrastive Chain-of-Thought Prompting (CCoT) uses both positive & negative demonstrations to improve LLM reasoning.

Open ai structured JSON output with adherence

OpenAI Structured JSON Output With Adherence

In the past, when using OpenAI’s JSON mode there was no guarantee that the model output will match the specified and predefined JSON schema. In my view this really made this feature unreliable in a production environment, where consistency is important…

The Chain-Of-X Phenomenon In LLM Prompting

Explore the ‘Chain-of-X’ phenomenon in LLM prompting, how CoT-style decomposition has evolved across reasoning, retrieval, verification, empathy & more.

Get the latest resources straight to your inbox

Subscribe to stay ahead and receive exclusive updates from our resource center before anyone else!

.webp)