While AI agents wait to be called upon, Agentic AI sets its own goals and plans how to reach them.

The term AI agent isn’t new. It’s been around since 1998, when early systems were built to follow scripts and respond to pre-defined commands. They were fast and rule-bound systems that could execute tasks with precision, but little sense of purpose or creativity.

Everything changed with the rise of generative AI. When large language models (LLMs) appeared in late 2022, these AI agents started to evolve. They could now reason, plan, and learn from feedback. They began using tools and solving problems with a degree of autonomy that seemed impossible just a few years earlier.

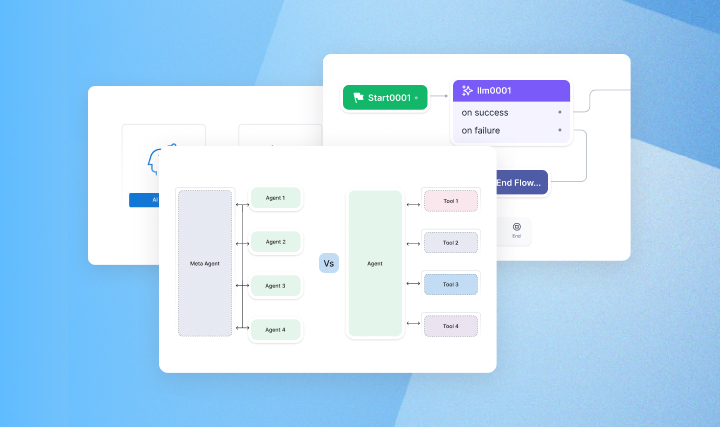

Today, these AI agents work as building blocks for Agentic AI. Unlike AI agents that act alone within fixed boundaries, agentic systems bring together multiple intelligent agents that collaborate, adapt, and make decisions in pursuit of shared goals.

Though the difference between AI agents and Agentic AI may sound subtle, for enterprises, that misunderstanding can be costly. Companies rolling out advanced chatbots or task-driven agents might believe they’ve cracked AI transformation, while rivals, armed with agentic systems, are quietly scaling autonomy and strategic impact.

In this blog, we’ll help you understand the key differences between Agentic AI and AI agents and key use cases where they excel.

What enterprises need to know (The TL;DR)

Before diving deep into the differences between Agentic AI and AI agents, here are the key takeaways:

- AI agents ≠ Agentic AI. AI agents automate tasks, whereas Agentic AI drives outcomes by setting goals, planning steps, and adapting as it learns.

- Autonomy is the real differentiator. AI agents wait to be called. Agentic AI takes initiative and acts when needed, allowing enterprises to move from reactive to proactive self-optimising systems.

- AI agents and Agentic AI aren’t rivals; they’re layers of the same stack. Together, they create a loop where data turns into action and outcomes feed back into continuous learning.

- Governance matters more than ever. The more autonomy AI gets, the more governance it needs. It needs clear goals, audit trails, data quality, and human oversight.

What is an AI agent?

An AI agent is, at its core, a piece of software designed to act on behalf of a person or a system. It takes in information from its environment, processes it, and performs actions designed to reach a specific goal. Think of it as a digital worker that is skilled and efficient, but only within the boundaries it’s been given.

Despite the name, most AI agents aren’t truly independent. They don’t think for themselves or decide what matters most. They follow rules or patterns they’ve learned from data. A customer service chatbot, for instance, can handle a refund request perfectly well, but it won’t decide to resign the entire customer journey to improve it.

That said, today’s AI agents are far from their early rule-based ancestors. Thanks to advances in machine learning and natural language processing, they can now understand intent, interpret nuance, and even learn from experience, yet they operate within explicitly defined boundaries, and their scope remains defined by design. They wait to be called upon rather than taking the initiative.

Characteristics of an AI agent

AI agents come in many forms, but these core characteristics shape how they operate and where they add the most value:

1. Autonomy

One of the main strengths of an AI agent is its ability to run with very little human supervision once it’s been set up. After deployment, it can process inputs, make decisions within a defined scope, and act on them in real time. That said, their autonomy has limits. These agents only act according to the logic or models they’ve been given.

2. Task-specific

AI agents are specialists. Each one is built for a particular job, be it filtering emails, booking appointments, or extracting data. This focus allows them to perform those tasks with remarkable efficiency and precision. Because their goals are narrowly defined, they’re also easier to control and trust. But this same specialisation means they can struggle outside their comfort zone. For instance, an email filter won’t suddenly decide to help you prioritise your workload.

3. Reactive

AI agents are fundamentally reactive — they respond to inputs, events, or changes in their environment. When you send a message, update a record, or make a request, they act. Some modern agents go a step further by learning from these interactions and fine-tuning their performance, but these adjustments are incremental. They refine behaviour, not purpose.

Examples of AI agents

- Virtual assistants and chatbots: Think Siri, Alexa, or business chatbots that interpret voice or text commands and respond appropriately. They are always reactive, never proactive.

- RPA bots: In enterprises, robotic process automation (RPA) handles invoices, reports, and repetitive admin work. These bots perform flawlessly but lack the awareness to optimize the process itself.

- Spam filters: Email classification systems continuously refine their accuracy through feedback loops, but remain bound by their defined task.

- Algorithmic trading systems: Financial agents execute trades based on signals and pre-set conditions. They can learn patterns and react instantly, but still operate within strict strategic limits.

What is Agentic AI?

Agentic AI marks a genuine step forward from AI agents. Instead of simply executing instructions, agentic AI systems set their own goals, plan how to reach them, and adapt as circumstances change. They move from following directions to owning outcomes.

The word “agentic” suggests true agency — the ability to act independently, based on internal objectives rather than constant human prompting. Where a conventional AI agent waits for the next task, an agentic system understands the broader purpose and figures out how best to achieve it.

Take software testing, for instance. An AI agent might run tests when told to. An agentic system, however, identifies what needs testing, designs its own approach, allocates resources, runs diagnostics, interprets results, and refines its process to maintain long-term quality, all without waiting for instruction.

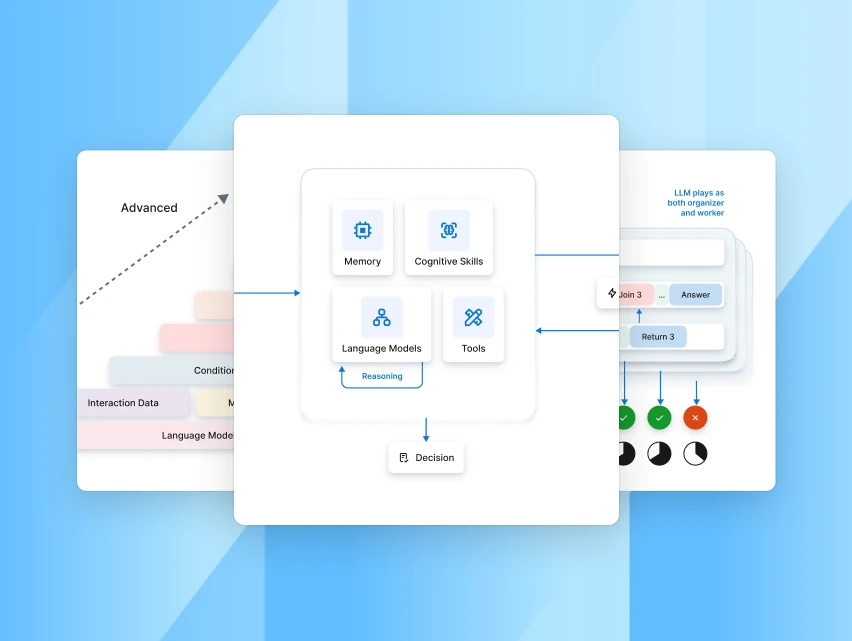

Characteristics of agentic AI

The Core Capabilities of Agentic AI Agentic systems bring several capabilities together to achieve this kind of autonomy:

1. Goal formulation

They can break down high-level objectives into specific, actionable steps. Given a goal such as “improve application performance,” an agentic AI can determine what to analyse, when to test, and how to measure success.

2. Strategic planning

Beyond reacting in the moment, these systems think ahead. They balance short-term actions with longer-term strategies, allocating effort and resources intelligently over time.

3. Context awareness

Agentic AI recognises when its environment changes, whether that’s new data, shifting conditions, or unexpected results, and adjusts its plans accordingly.

4. Orchestrated collaboration

Unlike AI agents that work in silos, agentic systems thrive when they work collectively. Orchestration ensures that multiple agents, each with their own capabilities, can operate together without duplication, conflict, or drift from the overall objective. This principle creates cohesive system behavior even when thousands of agents operate in parallel.

5. Learning and adaptation capabilities

Agentic systems are designed for continuous learning. They update their understanding in real time, refine strategies, improve predictions, and adapt to new inputs as they appear. This capability allows agentic systems to grow more effectively the longer they operate.

6. Outcome ownership

Above all, agentic AI takes responsibility for the result. It doesn’t stop when a plan fails; it rethinks, replans, and tries again until the goal is achieved.

👉Want to learn more about agentic AI? Watch this video on how to navigate the Agentic AI landscape.

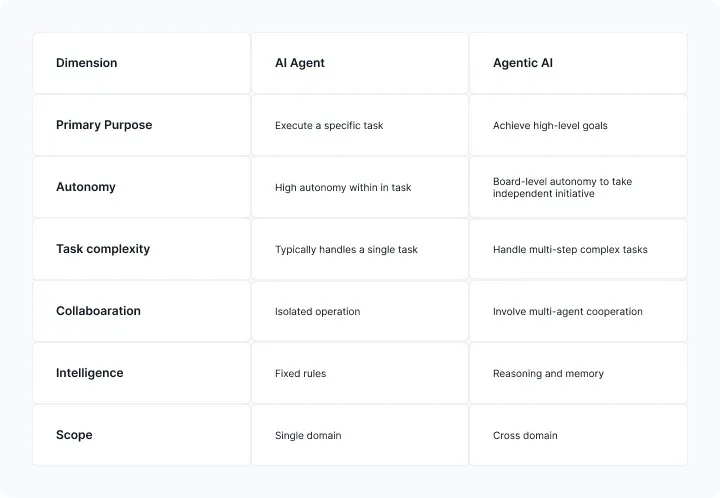

Agentic AI vs AI agent: Key difference leaders should know

While Agentic AI and AI agents are the layers of the same stack, they serve fundamentally different purposes.

1. Purpose: Task execution vs goal achievement

AI Agents

AI agents are designed with a specific purpose in mind: to complete well-defined tasks within clear boundaries. They’re efficient, dependable, and excellent at doing what they’re told. A customer service chatbot handles enquiries, while a scheduling assistant books meetings. Each is built for precision.

Their success is measured by how well they perform those individual tasks, how quickly they respond, how accurately they process information, or how satisfied a user feels after an interaction. What they don’t do is question whether the task itself is the right one, or whether there’s a better way to reach the underlying goal.

Agentic AI

Agentic AI operates with a broader sense of purpose. Instead of merely carrying out instructions, it’s designed to achieve results. When given a goal like “improve customer experience,” agentic AI starts analyzing patterns, identifies potential issues before they escalate, redesigns workflows, and even suggests changes to the product to reduce future complaints.

Agentic AI continuously evaluates whether its actions are moving the needle in the right direction and adjusts its approach accordingly. For instance, an agentic testing system doesn’t simply execute test cases; it ensures software quality as a whole, shifting priorities, refining strategies, and reallocating effort where it matters most.

In short, AI agents are task executors, whereas agentic AI is a problem-solver.

2. Autonomy: Task-specific vs independent initiative

AI Agents

Most AI agents are, by nature, reactive. They sit quietly until they’re called upon, spring into action when prompted, and then return to standby. Even the most advanced AI agents still rely on a user’s question or command to get started. They can process what they’re told and respond intelligently, but they don’t decide when to act.

This reliance on input creates clear limits. The agent can’t spot opportunities on its own or intervene before being asked. It won’t say, “I’ve noticed an issue and here’s how to fix it,” because that requires initiative, something it doesn’t have. In short, AI agents can act autonomously within a task, but they can’t choose which task to take on.

Agentic AI

Agentic AI, on the other hand, operates with true autonomy. It independently monitors its surroundings, recognises changes, and decides when to step in. It can identify problems, assess their significance, and take the first move without human prompting.

Take the example of customer support. An AI agent might respond to tickets as they come in, whereas an agentic system monitors customer sentiment in real time, spots early signs of frustration, and proactively reaches out with solutions before a complaint is even raised.

3. Task complexity: single task vs complex, multi-step tasks

AI agents

AI agents are designed to handle specific tasks. An AI agent can either answer questions, process transactions, schedule meetings, or pull data. But not a single agent can do everything. Also, when a task involves several steps, dependencies, or contextual shifts, AI agents begin to struggle. They can complete individual actions but not coordinate them end-to-end.

Agentic AI

Agentic AI, by contrast, thrives on multi-step reasoning and dynamic decision-making, even when the path forward isn’t fully defined. Instead of just completing one action at a time, it maps out a sequence of actions needed to reach an overarching goal.

Take, for example, an agentic marketing system. Rather than merely sending emails when prompted, it could design an entire campaign by identifying target segments, crafting personalised messages, running A/B tests, analysing engagement, and fine-tuning the strategy as results come in.

4. Collaboration: Isolated vs multi-agent cooperation

AI Agents

Most AI agents operate on their own, following a fixed script or working within a controlled framework. When multiple agents do interact, their collaboration is usually choreographed by an orchestrator that decides who does what and when. They don’t naturally share information or adjust to one another’s strengths; they simply pass the baton from one stage to the next.

This kind of structure works well for predictable, repetitive processes, but it leaves little room for flexibility or initiative. Each agent remains focused on its own slice of the problem, without awareness of the bigger picture.

Agentic AI

Agentic AI, on the other hand, can communicate and coordinate with others, be it human, machine, or data, to get the job done. It can adapt dynamically, share context, divide responsibilities, and adjust its actions based on what others are doing.

Imagine an agentic AI system working within a product development team. It might coordinate with developers to identify testing priorities, collaborate with analytics tools to gather performance data, and update documentation as code evolves, all while keeping everyone aligned on shared goals.

5. Intelligence: Rules vs Reasoning

AI agents

Most AI agents operate using relatively shallow intelligence. They rely on pre-set rules or pattern recognition to produce the right response at the right time. A chatbot, for example, can generate perfectly polished sentences but doesn’t actually understand what it’s saying. This works well for straightforward tasks but falls short when things get complicated.

Agentic AI

Agentic AI operates on a deeper level. It combines reasoning, memory, and strategic thinking to make sense of context. It remembers what it’s done before, evaluates situations, learns from experience, and uses that knowledge to choose the best course of action based on changing conditions.

For instance, if an agentic system encounters an error during a process, it doesn’t simply flag it for review. It analyses the root cause, identifies patterns across similar issues, and suggests the most effective way to fix or prevent the problem.

6. Scope of execution: Single domain vs cross domain

AI agents

Most AI agents operate within a single domain. For instance, a customer support bot will handle conversations within a company’s helpdesk platform but won’t step outside to coordinate with the billing system or product analytics. Similarly, a finance automation tool might process invoices in an ERP system but won’t update customer data in the CRM or notify operations when a payment fails.

This narrow scope it’s part of their design. Expanding beyond that familiar territory usually requires heavy re-engineering and can quickly degrade performance.

Agentic AI

Agentic AI, by contrast, takes a broader, more connected view. It can move freely between systems, combining insights from different platforms to achieve a broader goal. If resolving a customer issue means checking CRM data, analysing usage patterns, and triggering a support ticket, it can orchestrate all those steps seamlessly.

Key applications and use cases: Agentic AI vs AI agents

Both AI agents and agentic AI have found their place in today’s digital enterprises. However, they serve fundamentally different purposes. While AI agents specialise in precision and efficiency within defined limits, agentic AI thrives in fluid, complex environments where goals shift, systems overlap, and decisions require context.

AI agent use cases

1. Internal knowledge search

AI agents are already well embedded in enterprise operations and play a vital role in internal knowledge management. Employees can ask natural-language questions like, “Show me last year’s sales deck” or “Summarise the new HR policy”, and the agent instantly retrieves and condenses the most relevant documents.

Similarly, in customer support, these agents connect with CRMs and ticketing systems to handle enquiries and track orders. A customer asking “Where’s my order?” gets a fast, accurate response because the agent fetches data from shipping records and policy databases in real time.

2. Email filtering

Email overload is a universal frustration. AI agents built into tools like Outlook or Gmail now act as intelligent filters that sort, classify, and prioritise messages. They flag what’s urgent, extract tasks, and even draft suggested replies. Over time, they adapt to user habits and preferences, fine-tuning how they tag or group messages.

3. Smart scheduling and coordination

Scheduling is another space where AI agents have become indispensable. Integrated with calendar systems such as Google Calendar or Outlook, they identify meeting slots, manage conflicts, and adjust to shifting schedules.

An executive might simply say, “Book a 45-minute follow-up with the design team next week,” and the agent takes care of everything, from checking diaries and accounting for time zones to proposing the best time for everyone.

4. Reporting

In enterprises, AI agents drive tools like Power BI Co-pilot and Tableau Pulse, where non-technical users can type questions such as “Compare Q3 and Q4 revenue in the UK” and instantly receive charts or summaries. These systems bridge the gap between data and decision-making, turning natural language into meaningful insight.

Agentic AI use cases

While traditional AI agents shine at executing defined tasks, agentic AI systems excel in complexity. Here’s how that difference plays out in practice.

1. Business workflow automation

Agentic AI is redefining how business processes are run. Unlike robotic process automation (RPA) bots that follow scripts, agentic systems actively manage and optimise workflows end-to-end. They can restructure processes for efficiency, adapt to context, and coordinate seamlessly across departments and tools.

Take customer onboarding, for example. An agentic system might automatically collect documentation, verify data, notify compliance teams, and flag bottlenecks in real time, all while learning from each case to streamline future operations. Similarly, in supply chain management, the same system could rebalance inventory, reroute shipments, or adjust logistics in response to delays or demand shifts.

2. AI co-workers

Agentic AI has given rise to personal AI co-workers. Unlike digital assistants that wait for instructions, these systems take ownership of workstreams. They can manage projects, coordinate with human colleagues, make informed decisions within defined authority, and escalate only when necessary.

In a marketing or product team, for instance, an agentic AI might oversee campaign rollouts, analysing customer data, briefing designers, tracking metrics, and adjusting strategies as results come in.

3. Autonomous financial management

In finance, agentic AI extends well beyond automated trading. It enables end-to-end financial intelligence by continuously analysing markets, rebalancing portfolios, and managing risk in response to shifting conditions. An agentic portfolio manager might identify new investment opportunities, coordinate with multiple financial institutions, and adjust exposure in real time when economic indicators change. It can even account for evolving regulations and compliance constraints, making sure that decision-making remains both agile and responsible.

4. Healthcare treatment orchestration

In healthcare, agentic AI is moving from diagnostics to full treatment coordination. Instead of simply identifying conditions, it manages entire patient journeys, from scheduling procedures and monitoring vitals to adjusting care plans and ensuring compliance with medical protocols.

For example, in a hospital setting, an agentic system could track patient recovery in real time, identify anomalies, coordinate with specialists, and update treatment recommendations based on progress.

5. IT operations

In enterprise IT, agentic AI is proving invaluable for rapid, autonomous response. When a potential security threat is detected, agents specialising in threat analysis, compliance, and response simulation can collaborate instantly to assess risks and propose mitigation actions.

Rather than waiting for human analysts to interpret every alert, the system filters noise, correlates incidents across networks, and prioritises real threats. Over time, it learns from outcomes, refining its detection and response strategies to improve accuracy and speed.

Challenges of AI agents and Agentic AI

As AI systems evolve from single-task executors to multi-agent collaborators, new complexities arise. While both AI agents and agentic AI promise efficiency and autonomy, each comes with its own set of challenges.

AI agents challenges

Despite their impressive capabilities, AI agents are not truly autonomous intelligence. Their design, built largely around large language models (LLMs) and predefined workflows, gives them power, but also exposes several fundamental weaknesses:

1. Lack of real understanding

Most AI agents are great at recognising patterns but poor at understanding cause and effect. They know what tends to happen, but not why. In practice, that means they can’t reliably answer “what if” questions or adapt intelligently when circumstances shift.

2. Inherited weaknesses from LLMs

AI agents inherit both the brilliance and the flaws of the language models that power them. They can sound persuasive while being completely wrong, producing hallucinations. Their responses are also highly sensitive to phrasing, making them inconsistent across interactions. This brittleness limits their reliability, particularly in domains like finance, law, or healthcare, where precision is critical.

3. Incomplete autonomy and proactivity

While marketed as autonomous, most AI agents are still highly dependent on human input. They wait to be called, follow prescribed steps, and rarely take initiative. They lack the ability to set their own goals, self-correct when things go wrong, or reprioritise based on changing conditions. Even their interactions with other agents or humans tend to be scripted, and there’s little to no continuity or collaboration across time.

4. Weak long-term planning and recovery

AI agents also struggle with long-horizon reasoning. They can plan a few steps ahead but tend to lose track of context across complex, multi-stage processes. If something unexpected happens, say an API fails or a task produces ambiguous data, they often stall or repeat the same actions endlessly. Because most agents lack persistent memory, this makes them brittle in dynamic or high-stakes environments where consistency and foresight are essential.

5. Reliability and safety concerns

Trust remains a serious challenge with AI agents. When an AI agent generates a flawed plan or makes an incorrect assumption, it’s difficult to trace why. Their reasoning processes are largely opaque, and their outputs can change unpredictably under new conditions. Without causal grounding or formal verification methods, they cannot yet be relied upon in safety-critical settings such as infrastructure management or autonomous operations.

Agentic AI challenges

Though Agentic AI is a significant step forward, with this sophistication comes an entirely new set of challenges around coordination and control.

1. Unpredictable emergent behaviour

When intelligent agents interact, they sometimes produce results that no one designed or expected. These emergent behaviours can be helpful, revealing new solutions, but they can also be unstable or unsafe. In complex systems, this unpredictability may manifest as feedback loops, contradictory decisions, or unexpected escalations. Ensuring that such behaviour stays within safe and explainable boundaries remains one of the toughest challenges for developers of Agentic AI.

2. Coordination and communication bottlenecks

Multi-agent collaboration depends on clear communication, yet most current systems rely on natural-language exchanges that are prone to ambiguity and drift. Without a shared understanding of goals, context, or intent, agents can duplicate work, misinterpret tasks, or conflict over shared resources. Without structured coordination protocols, even small misunderstandings can lead to inefficiency or outright failure, particularly as the number of agents and tasks grows.

3. Scaling and debugging complexity

As Agentic AI systems grow larger, tracing what went wrong becomes increasingly difficult. Each agent operates with its own logic, memory, and toolset, meaning that a single error may be buried under layers of reasoning and communication. Debugging requires unpacking long chains of interdependent actions — a process far more intricate than troubleshooting a standalone model. Moreover, adding more agents doesn’t always mean better results; without careful orchestration, it can increase noise, redundancy, and confusion.

4. Security and vulnerability risks

More agents mean more points of failure. If one agent in a network is compromised, through a prompt injection, data poisoning, or a faulty integration, the entire system can be affected. Malicious inputs or corrupted outputs can spread across shared memory or communication channels, leading to cascading errors. Without strong access control, authentication, and isolation between agents, these systems are vulnerable to exploitation or manipulation.

5. Ethical and governance concerns

The distributed nature of Agentic AI raises difficult ethical and governance questions. When multiple agents contribute to a decision, who is accountable if something goes wrong? Biases can also multiply across agents, reinforcing one another and creating systemic inequities that are hard to detect. Over long periods, value drift — where agents optimise for goals that diverge from human intent — becomes a serious risk. Without robust oversight, transparent logging, and shared ethical frameworks, maintaining trust in these systems will be challenging.

👉 Watch this webinar to learn how to move beyond AI islands and build enterprise-wide AI agents.

Where do AI agents and Agentic AI complement each other?

While AI agents and Agentic AI operate at different levels of intelligence, they’re not rivals; they’re partners. While AI agents handle the precision work that keeps operations running smoothly, Agentic AI orchestrates, adapts, and drives strategy. Together, they form a powerful ecosystem that blends reliability with autonomy.

AI agents deliver consistency. Agentic AI brings context. When combined, they bridge the gap between automation and true intelligence. The same collaboration plays out in practice:

- In customer experience, AI agents can respond instantly to customer questions, retrieve data from CRM systems, and process refunds, while agentic AI steps in to coordinate the bigger picture, such as detecting emerging issues, adjusting response strategies, and deciding when to involve a human agent.

- In operations and supply chain, AI agents handle the routine tasks, such as updating orders or logging deliveries, whereas Agentic AI oversees the flow of information across departments, spotting bottlenecks or forecasting delays before they happen.

- In IT and security, AI agents monitor systems, detect anomalies, and run diagnostics. Agentic AI analyses the context of each incident, determines its priority, and orchestrates a coordinated response by pulling in the right tools and teams automatically.

- In product development and research, AI agents collect insights, summarise findings, and automate repetitive analysis. Meanwhile, Agentic AI coordinates entire research efforts, such as assigning tasks, combining results, and drawing strategic conclusions.

Conclusion

AI agents showed the world how machines could automate. Agentic AI is now showing how it can collaborate. What makes this new generation of AI truly transformative isn’t its ability to act, but its ability to own outcomes. When Agentic AI's autonomy and collaboration are combined with the precision and reliability of AI agents, it creates a system that both thinks and delivers.

But unlocking that potential takes more than technology. It requires vision, trust, and the right foundation. That’s where Kore.ai comes in. Kore.ai offers a unified platform that empowers enterprises to design, deploy, and scale both AI agents and Agentic AI with confidence.

- Build smarter workflows: Automate with precision and orchestrate with intelligence using enterprise-grade tools.

- Simplify complexity: Gain visibility and control through governance, orchestration, and integration frameworks built for scale.

- Deploy anywhere: Operate seamlessly across cloud, on-premises, or hybrid environments with the reliability global organisations demand.

The future of AI isn’t about choosing between automation and autonomy; it’s about combining them.

Ready to see how AI agents and Agentic AI can transform your enterprise? Request a custom demo and discover how Kore.ai can help you turn intent into impact.

Not ready yet? Head to our resources section to explore where you can apply Agentic AI and AI agents in your business.

FAQs

Q1. What is the difference between an AI agent and Agentic AI?

An AI agent performs specific, predefined tasks, like answering queries, scheduling meetings, or filtering emails, based on given rules or models. Agentic AI, by contrast, sets its own goals, plans how to achieve them, adapts to new conditions, and coordinates multiple agents or tools to deliver outcomes. AI agents are the building blocks of agentic systems, which also include elements like an orchestrator, planner, memory, and access to enterprise data.

Q2. Can an AI agent be upgraded into an agentic AI system?

Yes, in some cases. An existing AI agent serves as a component (or “player”) within an agentic AI architecture, but upgrading it means adding orchestration, goal decomposition, persistent memory, and multi-agent coordination. You can’t simply flip a switch; you need the right platform and governance.

Q3. What’s the cost difference between deploying AI agents and building agentic AI?

AI agents tend to have lower upfront costs, fewer moving parts, and quicker time-to-value. Agentic AI typically involves more infrastructure (memory layers, orchestration engines, tool integrations), higher run-time demands, and more complex architecture, so the investment and risk are greater.

Q4. How do you measure success or ROI for AI agents versus agentic AI?

For AI agents, metrics usually focus on “do the tasks correctly, quickly, at scale”. For agentic AI, metrics shift towards business outcomes: did the system improve customer retention, reduce cost per incident, optimise end-to-end workflows, or drive new capabilities? It’s less about tasks and more about outcomes.

Q5. What kind of governance or oversight do agentic AI systems require that agents don’t?

Agentic systems often require richer governance: orchestration logs, inter-agent coordination monitoring, causal reasoning audits, emergent behaviour review, memory traceability, and cross-agent accountability. With AI agents, you may focus on task logs and performance; with agentic AI, you need system-level transparency and controls.

Q6. How do you decide whether to start with AI agents or aim for agentic AI now?

If your problem is high-volume, predictable, and rule-based, then AI agents make sense.

If your task requires goal setting, adaptation across domains, multi-step workflows, and proactive intervention, then agentic AI may be the right target.

Often, the pragmatic path deploys agents first, builds trust, and then evolves into agentic systems when ready.

.webp)