The Framework for Responsible AI

Navigating Ethical Waters

Key Takeaways

- Anchor Core Principles:

Build ethical, trustworthy AI systems by prioritizing transparency, inclusiveness, and ongoing monitoring.

- Establish Robust Governance:

Define clear policies, conduct risk assessments, and assign dedicated roles to ensure compliance and ethical AI practices.

- Adhere to Compliance Needs:

Proactively address tightening regulations with strong governance and data protection to mitigate legal risks.

- Ensure Data Integrity:

Use high-quality, unbiased data to deliver fair and accurate AI outcomes across all user groups.

- Test and Monitor Consistently:

Conduct regular testing and continuous monitoring to align AI with ethical standards and performance goals.

- Scale Responsibly:

Focus on seamless integration and scalability to expand your AI while adhering to responsible practices.

- Optimize Tools Effectively:

Leverage advanced platform features like retrieval mechanisms and feedback loops to enhance transparency and ethical behavior.

- Prioritize Long-Term Impact:

Foster trust and drive lasting value by embedding Responsible AI into your core strategy.

1. Introduction

It’s 2024. Enterprises are on a relentless quest to redefine customer and employee experiences. Among the technological breakthroughs commanding center stage, Conversational AI emerges as a transformative catalyst, enabling organizations to engage with users in unprecedented, natural, and efficient ways. Recent leaps in generative AI models, exemplified by ChatGPT, have unveiled a universe of possibilities for Conversational AI.

As we peer into the near future, a profound shift awaits the customer service realm. According to Gartner’s projections, by 2025, up to 80% of call center agent tasks will be automated, a substantial leap from the 40% automation rate observed in 2022. This transformative forecast underscores the escalating significance of Conversational AI in reshaping the landscape of customer service operations.

Furthermore, the generative AI market stands at the threshold of remarkable expansion. Bloomberg Intelligence (BI) forecasts a staggering growth to $1.3 trillion over the next decade, a seismic surge from its $40 billion valuation in 2022. Additionally, Gartner predicts that by 2025 GenAI will be embedded in 80% of conversational AI offerings, up from 20% in 2023.This monumental growth signifies a paradigm shift in how businesses harness AI-driven conversational capabilities to revolutionize their operations, engage customers, and enhance efficiency.

Nonetheless, amidst the exhilaration of progress, it’s paramount to confront the challenges that accompany these technological leaps. The advancements have ushered in a new era fraught with risks like misinformation, brand reputation issues (misrepresentation of brand), improper responses, DeepFake (manipulated content), biases (discriminatory AI algorithms), IP infringement (intellectual property theft), and more. These emerging hazards underscore the immediate urgency for the conscientious and responsible adoption of AI. With AI technologies becoming increasingly pervasive, ethical and responsible usage takes precedence. The interplay between trustworthy, responsible AI practices and AI maturity is strikingly evident, with a resounding 85% of IT professionals recognizing that consumers are more inclined to choose a company that is transparent about its AI model development, management, and usage

(Source:IBM Global AI Adoption Index 2022).

Key challenges and impacts

By 2026, over half of governments worldwide are expected to mandate responsible AI through regulations and policies aimed at safeguarding data privacy and promoting ethical AI practices, according to Gartner. However, integrating responsible AI poses critical challenges:

Navigating government regulations

Understanding compliance norms

Addressing implications

Assessing infrastructure readiness

Managing audit costs

The need for a responsible AI Framework

The adoption of responsible AI raises significant questions and concerns among customers regarding bias, transparency, and ethical guidelines. To address these challenges and build public trust, collaborative efforts are crucial. Establishing standardized tools and methodologies for responsible AI adoption is essential to maximize societal benefits while mitigating potential harm. Through transparent processes and policies, organizations can promote ethical AI development and utilization, fostering long-term viability and sustainability.

At the forefront of AI innovation, we have gained unique insights into responsible AI through collaborations with global enterprises. Recognizing the importance of addressing ethical considerations, we have developed a comprehensive Responsible AI Framework. This framework encompasses ethical guidelines, strategies for regulatory compliance, and methods for addressing customer concerns. In our whitepaper, we invite you to explore these principles and practices, empowering organizations to leverage AI responsibly and ethically.

2. Core Principles of Responsible AI

In an era defined by rapid advancements in Artificial Intelligence (AI), it is imperative that we define and adhere to a set of foundational principles that guide responsible AI development and deployment. As AI technologies become increasingly integrated into our daily lives, organizations must embrace ethical considerations and responsible practices to ensure these innovations benefit society while mitigating potential risks. This section introduces the core principles that underpin responsible AI, providing a comprehensive framework for organizations and developers committed to navigating the intricate landscape of AI with integrity and foresight.

Principle One: Transparency

Transparency is the foundational principle of responsible AI, serving as a linchpin for building trust between AI systems and users. It involves open and honest communication about AI capabilities, limitations, and the nature of AI-generated responses. Inspectability and observability fuel this transparency, enabling users to delve into the inner workings of AI systems. By providing clear insights into their interactions with AI, organizations can build trust and ensure ethical use. According to the Cisco 2023 Data Privacy Benchmark Study, 65% of customers feel that the use of AI by organizations has eroded trust.

Embracing transparency in AI practices is essential to regain and reinforce that trust, ensuring that AI serves as a force for good in our evolving digital landscape. This principle entails:

- Explaining model capabilities and limitations: Organizations must provide detailed documentation and descriptions of their AI models, elucidating what they are designed to do and, equally importantly, what they are not capable of accomplishing. Transparency about model limitations fosters realistic expectations among users.

Example: An AI-powered communication tool designed for enterprise-level use may prove highly effective in aiding users with everyday queries and streamlining information retrieval from company databases. It’s essential, though, to transparently outline the tool’s capabilities and limitations, clearly defining what tasks it is designed to handle and where its functionalities may have constraints. This helps set realistic expectations for users and ensures a more informed and satisfactory user experience. - Distinguishing AI responses from human responses: It is crucial to clearly demarcate AI-generated responses from human-generated ones. This differentiation prevents users from misconstruing AI as human intelligence, thereby mitigating the risk of deception.

Example: In a financial advisory chatbot, AI-generated responses can be explicitly marked as “AI Response” or accompanied by an AI icon. - Explainable responses: Taking transparency to the next level involves providing explanatory responses. This entails offering insights into the rationale behind AI-generated answers, particularly in complex scenarios.

Example: A medical AI not only provides recommendations but also explains that its response is based on the latest medical research, empowering users with a deeper understanding of the decision-making process.

Principle Two: Inclusiveness

Inclusiveness isn’t merely a principle; it embodies a fundamental commitment to crafting AI systems that transcend biases, toxicity, and discrimination. It serves as an ethical imperative, driving AI towards being a force for good and ensuring fairness and equity across diverse user demographics.

- Data diversity and governance: At the core of inclusiveness lies the bedrock of data. According to a Gartner report, data plays a pivotal role in constructing AI systems. AI models heavily depend on unbiased datasets for ethical and fair performance. Organizations must be meticulous in ensuring that their datasets are not only diverse but also free from biases, reflecting the true spectrum of potential use cases and the diversity of end users.The emphasis should be on the quality of data, specifically its neutrality, to prevent AI systems from inheriting or exacerbating existing biases. Failure to prioritize unbiased data undermines the ethical foundation of AI systems and increases the risk of producing biased outcomes.

An illustrative example highlighting the significance of unbiased data can be found in Amazon’s attempt to incorporate AI into its recruitment process. The reliance on historical data primarily from white male candidates led to significant bias in favor of this demographic. Despite subsequent attempts to rectify the tool’s bias, the risk of the system creating new discriminatory patterns remained, ultimately leading to the discontinuation of the tool in 2018. This incident underscores the critical importance of prioritizing unbiased data to foster fairness and inclusiveness in AI systems. - Model governance: Inclusiveness extends to the development phase, where governance frameworks are pivotal. These frameworks encompass policies dictating the ethical use of AI models and the prioritization of appropriate metrics. When optimizing an AI model for accuracy, it becomes vital to contemplate the impact on underrepresented user groups. A commitment to such considerations ensures that the AI model’s impact is equitable and unbiased.

- Testing with diverse users: Inclusive AI development entails testing with a diverse user base at scale. This proactive approach anticipates the interactions of various user groups with the AI system, unveiling disparities in performance and discovering novel use cases. Through exhaustive testing across diverse demographics, organizations refine their AI models, fostering inclusivity and equitability.

- Continuous monitoring and retraining: Post-deployment, inclusiveness remains at the forefront. AI models require ongoing maintenance and retraining to sustain performance levels. A dedicated team must vigilantly monitor the model’s behavior, ensuring it aligns with intended objectives. Any deviations or issues should prompt immediate corrective action through retraining on new data.

- Open feedback loops: Implementing open feedback loops ensures continuous improvement in AI systems. By embracing a “human-in-the-loop” approach, organizations leverage human expertise to enhance AI performance. Incorporating concepts like “Continuous Improvement” and techniques such as Reinforcement Learning with Human Feedback (RLHF) creates a collaborative environment, allowing the AI to adapt and improve based on user experiences. This multifaceted approach proves instrumental in promptly identifying and rectifying issues, contributing to the refinement and optimization of AI systems.

For instance, consider how Uber uses AI to manage its army of drivers (around 3.5 million). Uber does not rely on human managers to decide whether a driver is better than others, which would surely unleash that manager’s preferences, biases, and subjective views, which are unreliable indicators of employees’ performance. Instead, its algorithms measure the driver’s number of trips, revenues, profits, accident claims, and passenger ratings. Granted, some drivers may be rated unfairly (too high or too low) because of factors unrelated to their actual performance, such as their gender, race, or social class, but in the grand scheme of things, the level of noise and bias will be marginal compared to the typical performance rating given to an employee by their boss.

Inclusiveness in AI isn’t an abstract concept; it’s a mission-critical endeavor. To excel, AI must serve everyone equitably, shunning biases and exclusion. Embracing the principle of inclusiveness empowers organizations to unlock AI’s full potential for fostering fairness, equity, and ethical interactions, as evident in Uber’s equitable driver management system.

Principle Three: Factual integrity

This principle serves as the cornerstone, ensuring that AI systems are unwavering beacons of truth and reliability. Here’s an in-depth look at what this entails:

- Anchoring responses in precision: Positioning AI responses with precision is akin to establishing your AI as an unwavering lighthouse in the expansive sea of information. Essential to fulfilling this pivotal role is training the AI using high-quality, reliable data sources. This foundational step ensures that every piece of information provided is not merely good but impeccably accurate, instilling trust in its users.

In industry terms, Large Language Models (LLMs) excel as in-context learners, demonstrating exceptional capability. By incorporating contextual references during inference, these models guarantee the delivery of accurate responses. Imagine a financial AI leveraging LLMs to deliver real-time stock market updates — every detail is not just rooted in precision but fortified by the model’s prowess in contextual understanding, establishing a reliable beacon of information in the industry landscape.

Also, harnessing Kore.ai’s XO GPT Module with Small Language Models (SLMs) exemplifies precision in AI response anchoring. Our SLMs are finely tuned with less than 10 billion parameters, ensuring rapid and accurate responses. The XO GPT Module excels with features like conversation summarization and query rephrasing, enhancing clarity and agility in communication. This empowers businesses to deploy AI solutions that not only meet but exceed expectations, driven by secure and efficient conversational AI technology integrated within Kore.ai’s robust platform. - Safeguarding against misinformation: AI should be a guardian of truth, resolutely opposing the spread of falsehoods. Responsible AI systems actively work against the propagation of false or misleading information. If, by any chance, they encounter inaccuracies, they swiftly rectify them, upholding the sanctity of factual integrity.

Example: Consider an AI tasked with generating news articles. Its mission is crystal clear: to uphold the highest standards of factual accuracy, ensuring that it never produces fake news. Instead, it stands as an unwavering defender of truthful reporting, setting the gold standard for journalistic integrity. By embracing the principle of factual integrity, organizations not only enhance the reliability of their AI systems but also contribute to a more trustworthy and fact-based AI landscape.

Principle Four: Understanding limits

Acknowledging the limitations of AI is essential for its responsible and effective deployment. While AI systems offer extraordinary capabilities, they are not without flaws. Organizations must recognize the boundaries within which their AI models operate to manage risks and achieve sustainable success.

Key considerations include:

- Recognize AI’s infallibility: Understand that AI systems, despite their power, are not error-proof. This recognition helps set realistic expectations for their performance and reliability.

- Commit to continuous evaluation: Establish processes to regularly assess AI models, systematically identifying their strengths, weaknesses, and areas for refinement. This ongoing evaluation ensures AI systems evolve alongside changing requirements.

- Adapt to evolving challenges: Continuously fine-tune AI systems to address emerging challenges and meet new use case demands. This adaptability is vital for maintaining relevance and effectiveness.

- Conduct use case assessments: For instance, a language translation AI should engage in routine evaluations to improve accuracy across various languages, ensuring inclusivity and reducing biases in translation.

- Mitigate potential risks: By understanding and addressing limitations, organizations can proactively prevent potential issues that could harm trust, functionality, or outcomes.

- Enhance strategic value: Embracing this principle allows organizations to maximize AI’s benefits while safeguarding against risks, ensuring AI remains a reliable and valuable asset over time.

By following these guidelines, organizations can responsibly navigate the evolving AI landscape, leveraging its capabilities while respecting its boundaries.

Principle Five: Governance

In an era where data protection regulations like GDPR are continuously evolving, the need for effective governance in the AI landscape is paramount. The Cisco 2022 Consumer Privacy Survey highlights disparities in GDPR awareness across countries, with Spain at 28% and the UK at 54%, while India stands out with an impressive 71% awareness of the draft Digital Personal Data Protection Bill (DPDPB). These disparities underscore the intricate global interplay between data protection and AI governance.

Furthermore, the Gartner Market Guide for AI Trust, Risk, and Security Management 2023 predicts that global companies may face AI deployment bans from regulators due to noncompliance with data protection or AI governance legislation by 2027. This prediction highlights the urgent imperative for organizations to establish robust governance practices in the realm of AI.

Effective governance in AI comprises several interconnected components:

- Clear policies and controls: Organizations must develop and rigorously enforce guidelines and policies for AI system development and deployment. These policies should encompass ethical considerations, user privacy, and data security. For example, an AI-powered customer service chatbot should strictly adhere to data privacy policies, ensuring responsible and ethical handling of user data.

- Comprehensive records: Maintaining meticulous records of AI model usage is vital for accountability and transparency. These records should encompass details about data sources, parameters, and decisions made during development. In sectors like medical AI, these records aid in tracing the origins of errors and demonstrating accountability to regulatory bodies.

- Regulatory compliance: Keeping pace with evolving data protection and AI governance laws is essential. Organizations must adapt their governance practices to align with shifting regulations. A proactive approach not only ensures compliance but also mitigates the risk of potential AI deployment bans.

- Ethical considerations: Beyond legal compliance, ethical considerations hold great significance in AI governance. Upholding high ethical standards in AI development and usage is essential for fostering trust among users and stakeholders.

- Transparency and accountability: Transparency in AI decision-making processes and accountability for AI-driven outcomes are pivotal. This transparency not only builds trust but also ensures the responsible deployment of AI.

As the regulatory environment continues to evolve, organizations that prioritize these interconnected components can navigate the intricate landscape with confidence, ensuring not only legal compliance but also the ethical and responsible utilization of AI technologies.

Principle Six: Testing rigor

In the context of responsible AI, rigorous testing is the bedrock upon which ethical and effective AI deployment is built. It plays a pivotal role in identifying and mitigating biases, inaccuracies, and gaps within AI systems. In this section, we will delve into the core principles and concrete practices for achieving robust AI testing.

Comprehensive testing for biases, inaccuracies, and gaps

Effective AI testing hinges on a steadfast commitment to recognizing and rectifying biases, inaccuracies, and gaps that may exist within AI systems. This commitment spans two crucial phases:

- Pre-launch testing: Prior to deploying an AI system, organizations should undertake thorough testing to proactively unearth any latent biases, inaccuracies, or gaps. This preemptive approach allows for adjustments and fine-tuning, mitigating potential issues before they impact end-users.

Example: Consider a language translation AI designed for diverse users. Pre-launch testing would involve evaluating the AI to ensure it offers equitable translations, avoiding favoritism towards one dialect over another. This pre-launch assessment is essential for eliminating linguistic bias. - Post-launch testing: Recognizing the dynamic nature of AI systems, post-launch testing assumes equal importance. AI behavior can evolve over time due to changing data inputs and usage patterns. Therefore, continuous monitoring and testing are vital to detect and rectify any biases, inaccuracies, or gaps that may emerge during real-world use.

Practical testing guidelines:

- Diverse data sets: During testing, employ diverse and representative data sets that mimic real-world scenarios. This approach aids in uncovering biases that may arise from underrepresented or skewed data sources. For example, testing facial recognition AI with a broad dataset ensures it performs accurately across various demographics.

- Ethical guidelines: Establish well-defined ethical guidelines for testing, precisely articulating what constitutes bias, inaccuracy, or a gap within the context of your AI system. Ensure these guidelines align with your organization’s broader ethical values and standards. For instance, set clear boundaries on AI behavior to avoid promoting harmful content.

- Continuous evaluation: Implement a framework for ongoing evaluation and retesting. Regularly review and update testing protocols to adapt to the evolving behavior of AI systems and emerging ethical considerations. For instance, continually assess recommendation algorithms for content platforms to avoid inadvertently promoting misinformation.

- Interdisciplinary collaboration: Form interdisciplinary teams comprising domain experts, ethicists, data scientists, and AI engineers. Collaboration ensures a well-rounded perspective on testing, guaranteeing a thorough evaluation. For example, a team of professionals from different backgrounds can assess AI in healthcare to ensure it adheres to medical ethics and patient privacy regulations.

- User feedback integration: Encourage users to provide feedback on AI system performance. User input can be a valuable source of insights for identifying and rectifying biases, inaccuracies, or gaps. For example, soliciting feedback from users of a voice recognition AI helps identify and address issues related to dialect recognition.

By placing a strong emphasis on comprehensive testing in both pre-launch and post-launch phases, your organization can uncover and mitigate biases, inaccuracies, and gaps. This ensures that AI systems operate ethically, equitably, and effectively in real-world scenarios. The adoption of practical guidelines and an unwavering commitment to continuous evaluation fosters trust and confidence in AI systems among users and stakeholders.

Principle Seven: Monitoring

The concept of continuous monitoring serves as the ultimate safeguard in the realm of responsible AI. It encompasses a proactive approach to ensure that AI systems remain aligned with ethical and functional standards. In this section, we will explore the practicalities of continuous monitoring in greater detail.

- Ongoing performance assessment: To uphold responsible AI, organizations must commit to the ongoing evaluation of AI model performance. This involves regular and systematic checks to determine how well the AI is functioning, identify any deviations from expected behavior, and gauge its impact on end-users.

Example: Continuous monitoring could involve tracking the accuracy and fairness of an AI-driven content recommendation system. Regular assessments would help pinpoint any anomalies or deviations from desired performance metrics. - User feedback incorporation: Soliciting feedback from users is an invaluable aspect of continuous monitoring. It provides a real-world perspective on the AI’s impact and helps organizations understand user experiences and concerns. This feedback loop is essential for identifying areas where improvements or adjustments are needed.

Example: For an AI chatbot used in customer support, actively gathering and analyzing user feedback can uncover patterns of frustration or dissatisfaction. This feedback can then inform adjustments to improve the chatbot’s effectiveness and user satisfaction. - Iterative improvement: Continuous monitoring is an ongoing process that involves a cycle of assessment, feedback collection, analysis, and refinement. This iterative approach ensures that AI systems evolve to meet changing user needs and ethical standards.

Example: A language translation AI that serves a global user base would continually evaluate its performance by monitoring user feedback and making regular updates to enhance translation accuracy and inclusivity.

This practice ensures that AI systems remain aligned with ethical considerations and user expectations over time. In the subsequent sections of this whitepaper, we will delve further into each of these facets, offering practical insights and real-world examples to illustrate their application across a variety of AI contexts.

Orchestrate responsible AI with Kore.ai

Enable ethical AI solutions for your business.

3. Recommended Practices

Responsible AI underpins trust, compliance, and sustainable practices. Building on our ethical foundation, let’s delve into actionable steps empowering organizations to integrate responsible AI into conversational AI initiatives—from model inception to deployment and beyond. These strategies foster a culture of adoption, ensuring transparent communication, risk mitigation, and improved user interactions.

One of the cornerstones of responsible AI is the data upon which models are trained. Organizations must meticulously curate high-quality, diverse, and representative datasets. Bias in training data can lead to biased AI behavior. Therefore, it’s imperative to eliminate or mitigate bias from the outset. For instance, when training a conversational AI model for customer support, ensure that the training data encompasses a wide array of user demographics to prevent any unintentional biases.

Rigorous testing is the bedrock of responsible AI. Organizations should subject their conversational AI models to extensive testing under diverse and real-world scenarios. This includes testing the model’s responses across various user demographics and contexts to identify and rectify any biases, inaccuracies, or gaps.

Example: A travel agency deploying a conversational AI for booking vacations should conduct testing with users from different countries and backgrounds to ensure that the AI provides equitable and culturally sensitive responses.

To fortify the reliability of AI response systems, the integration of retrieval mechanisms emerges as an important consideration. These mechanisms play a crucial role in validating AI-generated responses by cross-referencing them with trusted sources or comprehensive databases, thus guaranteeing the precision and currency of the information dispensed. In essence, retrieval mechanisms function as a protective barrier, effectively mitigating the potential for misinformation.

No single AI model is perfect. Organizations should consider using an ensemble of models, each with its strengths and limitations, to provide more comprehensive and accurate responses. By orchestrating multiple models, you can leverage the strengths of each while mitigating their respective weaknesses.

Example: An e-commerce platform can combine a sentiment analysis model, a recommendation engine, and a natural language understanding model to provide personalized and context-aware product recommendations, improving the overall user experience.

Transparency is a fundamental principle of responsible AI. Ensure that your conversational AI system is designed to provide explanations for its responses when requested. Users should have visibility into how and why the AI arrived at a particular answer. This transparency fosters trust and empowers users with insights.Example: A healthcare AI chatbot can explain the basis for its medical advice by referencing the latest research and clinical guidelines, enhancing user confidence in the recommendations provided.

Inclusiveness and fairness are pivotal. Implement safeguards to identify and mitigate harmful and biased interactions. Develop algorithms that can detect and handle harmful content, ensuring that users are protected from toxic conversations.

Example: A social media platform’s AI moderation system should be equipped to detect hate speech, harassment, and harmful content, taking swift action to remove or warn against such interactions.

Set up validation guardrails to thoroughly review AI-generated responses before they are presented to users. These guardrails act as a safety net, rigorously checking responses for potential biases, inaccuracies, or any harmful content, guaranteeing that only pertinent and trustworthy information is delivered.

For instance, in the realm of financial advisory services, validation guardrails can be employed to scrutinize AI-generated investment advice. This ensures that the advice provided is not only financially sound but also free from any misleading or detrimental content.

Maintaining detailed logs of user interactions with AI is crucial, serving as more than just conversation records. These logs provide the backbone for auditing and ensuring accountability within the AI system. Acting as diagnostic tools, they offer a robust debug facility, allowing for deep analysis of AI behavior and efficient issue triaging to ensure peak performance and regulatory compliance. Additionally, these logs possess auto-detection capabilities to highlight potential problems and trails of concern, actively aiding in assessing performance and maintaining optimal AI functionality.

Responsible AI doesn’t end at deployment. Continuously monitor the performance of your AI models and actively solicit user feedback. Regular assessments help detect and rectify issues promptly, ensuring that AI systems evolve to meet changing user needs and ethical standards.In this endeavor, the Kore.ai XO Platform offers robust support for ongoing evaluation.Kore.ai XO Platform’s Live Feedback capability empowers you to proactively collect user feedback during their interactions with the virtual assistant.

When issues or limitations are identified, organizations should be prepared to swiftly roll out targeted model updates. Having mechanisms in place for rapid updates ensures that problems are addressed in a timely manner, reducing the potential impact of AI shortcomings.

Example: A weather forecasting AI should promptly update its model when new data becomes available to improve the accuracy of its predictions.

Develop and implement risk mitigation controls to proactively manage potential risks associated with AI. These controls can include automated triggers for human intervention when the AI encounters uncertain or high-risk situations.

Example: An autonomous vehicle AI should have safety mechanisms that enable it to hand control back to a human driver in situations of uncertainty or extreme conditions.

I models benefit from fresh data. Regularly update your models with new, relevant data to ensure their ongoing accuracy and relevance. Data staleness can lead to outdated responses and reduced user satisfaction.

Example: An AI-powered financial advisor should periodically refresh its knowledge base with the latest financial market data and economic trends to provide up-to-date investment advice.

Download the Responsible AI Checklist.

Take the first step towards leading your organization, responsibly.

Get Access4. Governance Considerations

In the pursuit of deploying responsible AI systems, governance considerations occupy a central role. Effective governance not only safeguards against unintended consequences but also cultivates trust among users and stakeholders. As per a Cisco survey, respondents were asked whether governments, organizations, or individuals should have a primary role in protecting personal data. More than half (51%) said national or local government should play the primary role. Further, Gartner predicts that By 2025, regulations will necessitate focus on AI ethics, transparency and privacy, which will stimulate — instead of stifle — trust, growth and better functioning of AI around the world. This perspective underscores the broader societal perspective on data protection and informs governance strategies regarding data handling and privacy within AI systems.

In this section, we delve into the key facets of governing responsible AI systems, offering insights and best practices for organizations to navigate this critical terrain successfully.

Responsible AI systems begin with the formulation of clear and comprehensive usage policies. These policies serve as the foundation upon which ethical and responsible AI practices are built. They articulate the boundaries within which AI systems operate, defining acceptable use cases and delineating the scope of system capabilities. Importantly, these policies should be rooted in a thorough understanding of model evaluations, ensuring that the AI system aligns with ethical standards and societal norms.

Identifying potential harms and devising effective mitigation strategies is an indispensable part of responsible AI governance. Model risk assessments are the systematic evaluation of AI models to proactively identify vulnerabilities, biases, or unintended consequences that may arise during deployment. Organizations should develop a rigorous framework for conducting these assessments, which may include techniques such as bias detection, fairness analysis, and robustness testing.

The deployment of AI model updates should be subjected to approval workflows that incorporate human oversight. This ensures that model updates comply with established governance guidelines and ethical considerations. Human oversight is essential in evaluating the impact of model changes, validating their alignment with organizational objectives, and preventing the introduction of unintended biases or risks.

Transparency is the cornerstone of trust in AI systems. Organizations should commit to disclosing detailed transparency reports that shed light on model performance and usage data. These reports provide insights into the decision-making processes of AI models, the data sources used, and the performance metrics achieved. By openly sharing this information, organizations demonstrate their commitment to accountability, ethical behavior, and continuous improvement.

To facilitate the continuous refinement of AI systems, organizations should establish robust user reporting mechanisms. These mechanisms, integral to a collaborative approach in system enhancement, serve as a vital component in the iterative improvement process. By incorporating accessible and user-friendly reporting tools, organizations can encourage users to submit feedback regarding problematic AI responses or experiences.

To ensure effective governance in AI systems, organizations must establish dedicated roles responsible for oversight. These roles, including AI ethics officers, compliance officers, and data stewards, play a crucial role in monitoring AI behavior, ensuring policy compliance, and proactively addressing ethical concerns. By appointing these roles, organizations demonstrate their commitment to ethical AI practices and accountability.

Enterprises are emerging as key players in the responsible deployment of AI. While government regulations are crucial, the rapid pace of technological advancement requires enterprises to take the lead in ensuring transparency and fairness. Generative AI presents vast opportunities for innovation and economic growth, with predictions that 80% of enterprises will soon deploy these models. Although governments strive to regulate AI, the evolving tech landscape demands that enterprises implement robust safety measures, transparency, and fairness. By adopting open-source models and self-regulation, enterprises can democratize AI access and foster trust. Responsible AI practices will become a key competitive advantage, with Gartner predicting significant improvements in adoption and business outcomes for those prioritizing AI safety.

“Self-regulation and responsible use of AI are the surest ways to success and growth in the AI era. We believe responsible AI frameworks allow enterprises to harness the power of AI while ensuring fairness, transparency, integrity, inclusivity and accountability. The ability to communicate fairness and transparency of their AI offerings will become a key competitive differentiator for businesses because that’s what their customers expect from them.”

Raj Koneru

CEO and Founder, Kore.ai

Who’s responsible for Responsible AI?

Businesses must lead while the wheels of government turn

Read the Forbes Blog here5. Technology Capabilities

In this section, we provide an in-depth overview of Kore.ai XO platform capabilities, specifically tailored for the implementation of responsible AI. These capabilities are pivotal in ensuring that AI systems not only meet regulatory requirements but also adhere to ethical standards, enhance user experiences, and foster trust and accountability.

Conversational design tools

Kore.ai XO platform boasts a suite of conversational design tools. These tools empower organizations to craft AI interactions that are not only efficient and effective but also align with responsible AI principles. They facilitate the creation of user-friendly conversational interfaces that prioritize clarity and ethical considerations. Enabling users to build API’s and GenAI Apps by making use of prompt templates, prompt pipelines and chaining.

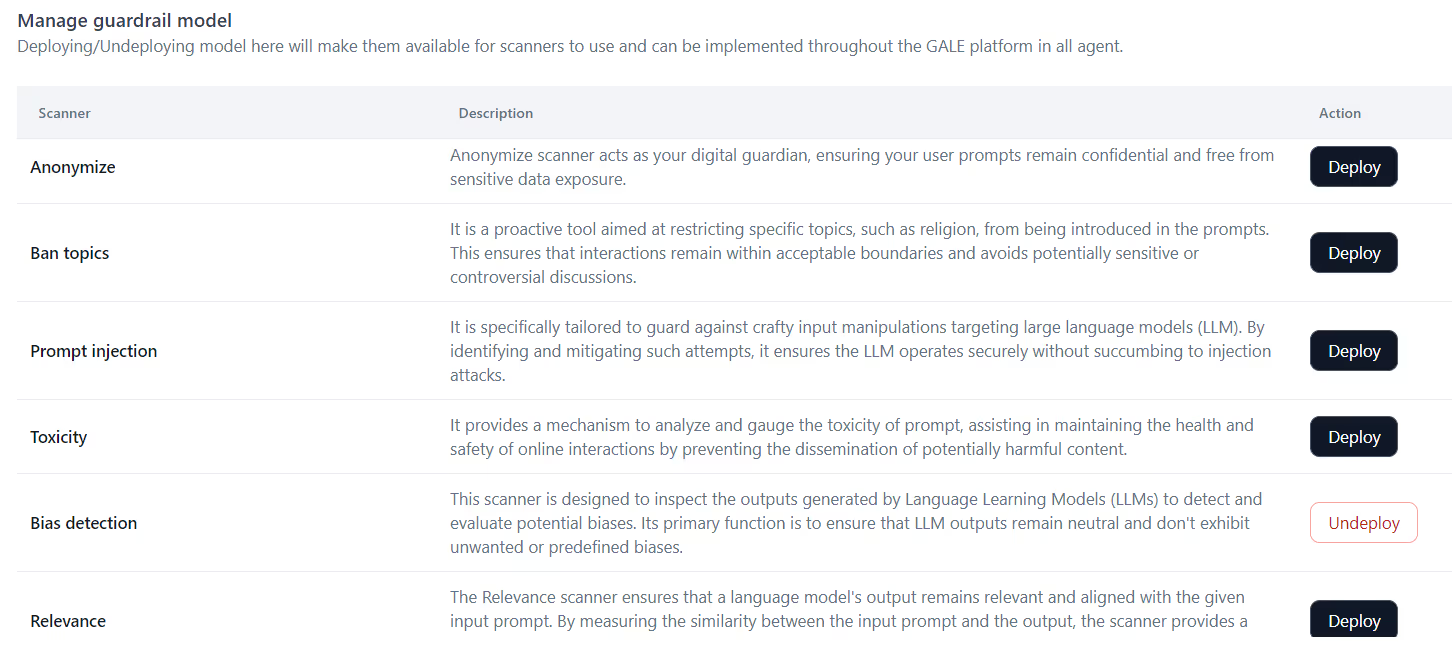

Guardrails and validation

Effective guardrails and validation mechanisms are crucial for the responsible deployment of AI. The Kore.ai XO Platform provides robust tools to set and enforce these guardrails, ensuring AI responses adhere to predefined ethical standards. Validation processes further ensure the accuracy and ethical integrity of responses, adding an essential layer of protection against unintended consequences.

Before interacting with large language models (LLMs), any personally identifiable information (PII) or sensitive data is anonymized to protect user privacy.

Smaller models are employed to screen both user input and AI responses, identifying and filtering out any harmful content.

Smaller models are employed to screen both user input and AI responses, identifying and filtering out any harmful content.

The platform supports defining fallback behaviors for instances where a guardrail blocks an AI response, ensuring seamless user experience.

Retrieval mechanisms

Efficient retrieval mechanisms, also known as RAG (Retrieval Augmented Generation) and ICL (In-Context Learning), are essential for accessing and managing data within AI systems. Kore.ai XO Platform offers robust retrieval mechanisms that facilitate the secure and ethical handling of user data. This ensures that user information is accessed only when necessary and in compliance with data protection regulations.

Testing and fine-tuning

Continuous testing and fine-tuning are imperative to maintain AI system performance. Kore.ai XO Platform equips organizations with the tools to rigorously test AI models, assess their performance, and fine-tune them as needed. This iterative process ensures that AI systems evolve to meet changing ethical standards and user expectations. An integral part of fine-tuning is data, at Kore AI we consider the four dimensions of Data, being:

- Data Discovery

- Data Design

- Data Development

- and Data Delivery

Mastering AI agent testing with Kore.ai

A step-by-step guide to fine tuning AI agents

Interaction logs and audit trails

Transparency and accountability are bolstered through comprehensive interaction logs and audit trails. Kore.ai XO Platform captures and maintains detailed records of AI interactions, providing organizations with a transparent view of system behavior. Audit trails serve as a valuable resource for tracking system performance and addressing any ethical concerns or anomalies.

Regression testing

Regression testing safeguards against unintended changes in AI behavior. Kore.ai XO Platform facilitates regression testing to ensure that model updates do not introduce biases or other ethical issues. This process helps maintain the ethical integrity of AI systems throughout their lifecycle.

Feedback collection

User feedback is a critical element in responsible AI. Kore.ai XO Platform incorporates mechanisms for collecting user feedback on AI interactions. This user-centric approach enables organizations to identify and rectify any issues promptly, improving system performance and aligning with user expectations.

Control mechanisms

Control mechanisms are essential for maintaining the ethical and responsible use of AI. Kore.ai XO Platform provides granular control over AI system behavior, allowing organizations to enforce ethical guidelines and respond to evolving regulatory requirements effectively.

Citations and custom tags

Transparency in AI decision-making is enhanced through citations and custom tags. Kore.ai XO Platform enables organizations to attribute AI responses to specific data sources and apply custom tags for additional context. This enhances accountability and builds trust among users.

Custom dashboards

Custom dashboards offer insights and oversight of AI system performance. Kore.ai XO Platform provides customizable dashboards that allow organizations to monitor key metrics and track adherence to responsible AI practices. These dashboards serve as valuable tools for governance and compliance efforts.

Kore.ai XO Platform capabilities are meticulously designed to empower organizations in implementing responsible AI. These features ensure that AI systems not only adhere to ethical standards but also offer exceptional user experiences while maintaining transparency, accountability, and compliance with regulatory frameworks.

Download the Responsible AI Checklist!

Take the first step towards leading your organization, responsibly.

Get Access6. Charting the course for responsible AI

IDC projects a staggering growth in the global AI market, with forecasts exceeding $500 billion by 2024, a remarkable 50% increase from 2021. Within the domain of conversational AI, responsible practices extend beyond regulatory mandates, forming the cornerstone of trust-building and user-centric experiences. These practices underscore a commitment to fairness, transparency, and ethical conduct, ensuring AI systems provide reliable and accurate responses vital for cultivating trust in today’s digital landscape.

Kore.ai’s responsible AI framework and platform capabilities equip organizations with robust tools to embed ethical considerations seamlessly into their conversational AI initiatives. By leveraging these capabilities, organizations can ensure meticulous data curation, rigorous model testing, ongoing transparency, and continuous monitoring and adaptation of AI systems. The result is not just compliance with regulations but also enhanced user trust and exceptional experiences, setting the stage for transformative changes and pioneering a future where AI is a trusted ally, delivering precise responses and unparalleled user interactions.

.webp)