What is generative AI?

It’s helpful to start with some key definitions and concepts to grasp how generative AI works. Understanding these basics will provide a solid foundation for exploring the more complex aspects of this technology. Generative AI is artificial intelligence that creates new content, like text, images, and music, by learning from existing data. Businesses use GenAI to automate tasks, generate content, and enhance industry decision-making.

Basic concepts and terminology

AI’s historical context and evolution

Over the past 20 years, AI has evolved from basic machine learning to sophisticated generative AI. Advances in big data, neural networks, and transformer models like BERT and GPT-3 have driven significant progress in AI. Developments in speech recognition technology (ASR), powered by advancements in natural language processing (NLP) and neural networks, have played a crucial role in this evolution. These improvements in speech recognition have enhanced AI systems’ ability to understand and process human language, contributing significantly to the progress in NLP.

Significant dates and turning points

How generative AI works

Introduction to generative AI technology

Generative AI is a subset of artificial intelligence that focuses on creating new content, such as text, images, and music, by learning from existing data. It uses advanced machine learning techniques, like deep learning and neural networks, to generate outputs that mimic human creativity and intelligence.

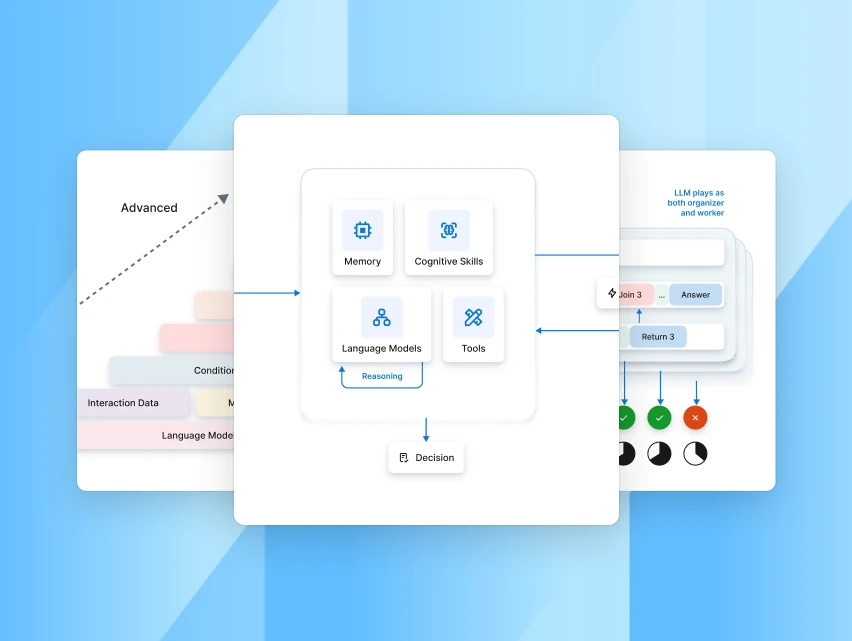

Key components and architecture

1. Data collection and preparation

- Large datasets are gathered and pre-processed to ensure quality and relevance.

- Data is cleaned, annotated, and transformed into formats suitable for training AI models.

2. Model training

- Deep learning models, especially neural networks, are trained on the prepared data.

- The model parameters are adjusted to minimize errors and improve accuracy during the training process.

- Transfer learning involves using and fine-tuning a pre-trained model on a new, related task, allowing it to leverage previously learned features and knowledge. This reduces training time and improves performance, especially when data for the new task is limited.

3. Neural networks

- Convolutional Neural Networks (CNNs): Primarily used for image generation tasks.

- Recurrent Neural Networks (RNNs): Used for sequential data like speech and text.

- Transformers: State-of-the-art models for text generation that use self-attention to understand context.

4. Inference and generation

- Once trained, the models can generate new content based on input data or prompts.

- For example, GPT-3 can generate human-like text based on an initial sentence or keyword.

5. Evaluation and refinement

- Generated content is evaluated for quality, coherence, and relevance.

- Models are continuously refined and updated based on feedback and new data.

6. Deployment

- Trained models are deployed into production environments where they can be accessed via APIs or integrated into applications.

- Continuous monitoring ensures performance and allows for real-time adjustments.

.webp)