AI Glossary

Stay up to date with industry terms

The AI industry moves fast, and so does the terms used to describe it. This glossary helps you stay up-to-date with the evolving market language.

Large Language Model (LLM)

A Large Language Model (LLM) is an advanced AI model trained on massive text datasets to understand, process, and generate human language. It can analyze queries, summarize information, complete tasks, and support reasoning across a wide range of language-driven applications. Its strength lies in handling complexity, adapting to different contexts, and delivering coherent, context-aware output at scale.

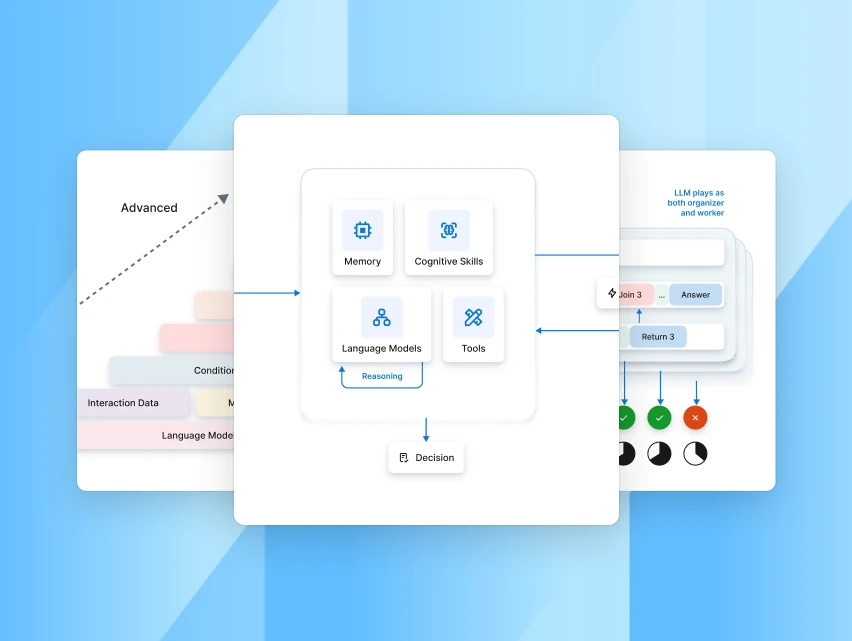

LLM Orchestration

LLM Orchestration is the process of managing how large language models interact with tools, memory, APIs, and other agents. It ensures the LLM isn’t just generating text, but working as part of a larger system that can reason, retrieve, act, and adapt across workflows.

Long-Term Memory

Long-term memory allows AI agents to remember information across interactions like user preferences, past actions, or previous answers. It helps make responses more personalized, consistent, and goal-aware over time.

Low-Code

Low-code platforms let users build AI-powered applications or automations using visual interfaces instead of traditional coding. It helps business users and non-engineers create workflows, bots, or integrations quickly and safely.

Low-Rank Adaptation (LoRA)

LoRA is a technique for fine-tuning large models efficiently, without needing to retrain the whole thing. It makes updates lighter, cheaper, and easier to deploy—perfect for customizing foundation models in enterprise settings.

Memory

Memory allows AI systems to retain and reuse information over time, like past interactions, user preferences, or task history. It helps the AI stay context-aware, make better decisions, and maintain continuity across conversations or workflows.

ModelOps

ModelOps is the practice of managing the full lifecycle of AI models, from training and testing to deployment, monitoring, and retirement. It’s essential for keeping models secure, updated, and aligned with business needs over time.

Model Router

A Model Router decides which AI model to use for a specific task. Based on factors like prompt type, confidence score, or domain, it directs requests to the best-fitting model ensuring the system stays efficient, accurate, and scalable.

Multi-Agent Orchestration

Multi-agent orchestration is the coordination of multiple specialized AI agents working together to complete complex tasks. Each agent focuses on its part retrieving data, executing actions, or reasoning and the orchestration layer ensures everything flows smoothly.

Multi-Agent Systems

A multi-agent system is a setup where several autonomous AI agents collaborate, communicate, and share context to solve a broader goal. It’s like a digital team, each agent with its own role working towards the same objective.

Multimodal AI

Multimodal AI refers to systems that can understand and process more than one type of input, like text, images, audio, or video. It enables richer, more flexible interactions across a wider range of tasks and channels.

Multi-Vector Search

Multi-vector search improves retrieval by using more than one semantic representation to find relevant information. It helps surface better results by capturing different meanings or perspectives behind a single query.

Natural Language Generation (NLG)

NLG is the process of turning structured data or internal knowledge into clear, human-sounding language. Whether it’s summarizing a report or answering a user question, NLG helps AI systems respond naturally and intelligently in real time.

Natural Language Processing (NLP)

Natural Language Processing is the broad field of AI that helps machines understand, interpret, and work with human language. It covers everything from analyzing text to extracting meaning, enabling systems to handle unstructured input like messages, emails, or voice commands.

Natural Language Understanding (NLU)

NLU is a subset of NLP focused on interpreting the meaning and intent behind what someone says or types. It helps AI systems figure out what the user wants, even if the phrasing is vague or unstructured, critical for driving accurate responses and actions.

No-Code

No-code platforms let users build AI applications, workflows, or automations without writing any code. Instead of programming, users work through visual interfaces, like drag-and-drop tools or form-based logic. It makes it possible for business teams to launch and manage AI solutions quickly, without needing deep technical skills.

Omni-Channel

Omni-channel means providing a seamless AI experience across multiple channels, like chat, voice, email, web, or mobile apps, while keeping context and continuity intact. It ensures that users get consistent support and can pick up where they left off, no matter how or where they interact.

Ontology

An ontology is a structured way of organizing knowledge. It defines the relationships between concepts, entities, and categories within a specific domain. In AI systems, it helps the agent understand how things are connected, so it can reason more accurately and respond in a context-aware way.

Open-Source LLMs

Open-source LLMs are large language models that are freely available for anyone to use, customize, or deploy. They offer flexibility and transparency, making them a strong option for enterprises that want control over model behavior, cost, or deployment environment.

Parameters

Parameters are the internal values that a language model learns during training. They control how the model interprets language, forms associations, and generates responses. In simple terms, more parameters generally mean the model can capture more complexity, but also requires more computation.

Pre-Trained Model

A pre-trained model is an AI model that’s already been trained on large datasets and can be fine-tuned or used directly for specific tasks. It saves time and resources by offering a solid foundation that can be adapted quickly to new use cases.

Probabilistic Model

A probabilistic model makes decisions or predictions based on the likelihood of different outcomes. Instead of producing one “correct” answer, it weighs possibilities and selects the most likely one, making it useful for language, reasoning, and uncertain scenarios.

Prompt Chaining

Prompt chaining is the technique of linking multiple prompts together using the output of one as the input to the next to guide the model through multi-step reasoning or tasks. It helps break down complex problems into manageable steps for more reliable outcomes.

Prompt Engineering

Prompt engineering is the art of crafting instructions that guide an AI model’s behavior. The way a prompt is written can shape the tone, format, and accuracy of the response, making it a powerful tool for improving results without retraining the model.

Prompt Pipelines

Prompt pipelines are structured sequences of prompts, logic, and decision steps that together drive a larger task. Think of them as reusable flows where each step builds on the last, helping AI systems complete end-to-end actions more reliably.

Query Optimization

Query optimization involves refining a query to make it more efficient, precise, or context-aware so the AI retrieves the best possible answers faster. This could include rephrasing, ranking priorities, or eliminating unnecessary noise in the input before processing it.

Reasoning

Reasoning is the AI’s ability to think through a problem, break it into steps, and make informed decisions. It’s what separates reactive bots from intelligent agents that can handle ambiguity, follow goals, and adapt in real time.

Reinforcement Learning

Reinforcement Learning is a method where AI learns by trial and error, getting rewarded for good outcomes and penalized for bad ones. It’s useful for training agents to improve over time in dynamic or goal-driven environments.

Reinforcement Learning from Human Feedback (RLHF)

RLHF combines reinforcement learning with human guidance. Instead of just learning from rules, the AI improves by watching how humans rate or correct its outputs, leading to responses that better match expectations and values.

Responsible AI

Responsible AI means building and deploying AI systems that are ethical, transparent, fair, and aligned with human values. It covers things like avoiding bias, respecting privacy, and making sure decisions can be explained and trusted.

Retrieval-Augmented Generation (RAG)

RAG is an AI technique that brings together three components, retrieval, augmentation, and generation, to produce more accurate and context-aware responses. First, it retrieves relevant information from trusted external sources, such as documents or databases. Then it augments the input by combining the user’s query with the retrieved data, giving the model richer context to work with. Finally, it generates a response based on that combined input. This approach reduces hallucinations, keeps answers grounded in real knowledge, and makes the system more reliable, especially for enterprise use cases where accuracy and traceability are essential.

Robotic Process Automation (RPA)

RPA automates repetitive tasks using bots that mimic human actions, like clicking buttons or copying data between systems. While powerful for rule-based tasks, it lacks the reasoning and flexibility of agentic AI, which can adapt to changing goals and context.

Role-Based Access Control (RBAC)

RBAC restricts access to features or data based on a user’s role, like admin, agent, or end user. It’s essential in enterprise AI platforms for protecting sensitive information and enforcing security policies across teams.

Scaffolding

Scaffolding is a technique where a complex task is broken into smaller steps that the AI can reason through, often using intermediate prompts, models, or agents. It’s helpful for multi-step reasoning, planning, and decision-making.

Search and Data AI (Kore.ai)

Search and Data AI is Kore.ai’s intelligent framework for enterprise knowledge discovery. It brings together agentic RAG, semantic understanding, multi-source connectors, and hybrid vector search to turn scattered internal data, like documents, databases, FAQs, or web content, into accurate, context-rich answers. The system intelligently ingests and indexes information, applies semantic and keyword searches, and wraps it all in conversational AI that can ask follow‑ups, maintain context across the session, and smoothly switch to a live agent when needed.

Semantic Search

Semantic search goes beyond keywords to understand the meaning behind a query. It helps AI systems find relevant content, even if the wording doesn’t exactly match, by looking at intent, context, and relationships between concepts.

Sentiment Analysis

Sentiment analysis helps AI understand emotions behind text, whether it’s positive, negative, or neutral. It’s useful in support, marketing, and feedback systems to assess customer tone and urgency.

Sequence Modeling

Sequence modeling is the process of analyzing or predicting patterns in ordered data, like sentences, clickstreams, or time-series events. It’s essential for tasks where the order of information affects the outcome, such as language processing or behavior prediction.

Short-Term Memory

Short-term memory stores recent inputs, decisions, or conversational context that an agent uses during an active session. It helps the system stay coherent and relevant within a task, without mixing it up with long-term data or unrelated past interactions.

Small Language Models (SLMs)

Small Language Models are compact AI models trained for specific tasks or domains. They’re faster, more cost-effective, and easier to control than massive models, making them ideal for use cases that require speed, privacy, or domain precision

Software Development Kit (SDK)

An SDK is a collection of tools, libraries, and documentation that helps developers build or extend AI applications. It provides everything needed to integrate with APIs, build custom features, or embed AI into enterprise workflows.

Sparse Retrieval

Sparse retrieval relies on traditional keyword matching methods to retrieve content. It’s fast and effective for exact matches, but often less flexible than semantic search when queries are vague or varied.

Supervised Learning

Supervised learning is when an AI model is trained using labeled data, examples where the input and correct output are known. It’s widely used for tasks like classification, prediction, and intent recognition.

Synthetic Data Generation

Synthetic data generation involves creating artificial data, like text, images, or records, to train or test AI models. It’s especially useful when real data is limited, sensitive, or needs to be balanced for fairness.

Temperature

Temperature is a setting that controls how “creative” the AI gets. A low temperature keeps responses focused and predictable. A higher one makes answers more diverse, but sometimes less accurate. It’s like adjusting how bold the AI is allowed to be.

Testing

Testing is how we make sure AI works as expected before it goes live. It includes checking accuracy, behavior, and edge cases, so there are no surprises when customers or teams start using it.

Tokens

Tokens are the chunks of text that an AI model reads or writes, like words or parts of words. The more tokens you give the model, the more context it has to work with. But there’s always a limit, so choosing what goes in really matters.

Toolformer

A taxonomy is just a fancy word for a well-organized list of categories. It helps the AI organize things in a meaningful way, like types of customer issues, product categories, or departments, so it knows how to respond and route information correctly.

Toxicity

Toxicity is when AI says something harmful, offensive, or inappropriate. It’s not always intentional, it’s just repeating patterns it has seen. That’s why filters and safeguards are used to catch and prevent it from showing up in responses.

Training Data

Training data is what an AI system learns from. It could be text, documents, conversations, anything that teaches the model how language works and what to expect. The better the training data, the smarter and more accurate the AI becomes.

Transfer Learning

Transfer learning is when an AI takes what it learned from one task and uses it for another. It’s like reusing knowledge, saving time, effort, and making the model smarter, faster.

Transformer

The transformer is a type of AI model architecture that made today’s powerful language models possible. It helps the AI understand how words relate to each other in a sentence so it can generate responses that make sense.

Transparency

Transparency means you can see and understand how an AI system came to its answers. It helps build trust, especially in business settings where decisions need to be explained, tracked, and improved over time.

Unstructured Data

Unstructured data is information that doesn’t follow a fixed format, like emails, chat logs, PDFs, images, or audio files. It’s messy but rich with insights, and AI systems are designed to make sense of it by extracting meaning, context, and intent.

Unsupervised Learning

Unsupervised learning is when AI is trained on data without labels. It learns by spotting patterns, clusters, or relationships on its own, which is useful for organizing data or discovering hidden insights without manual setup.

Vector Databases

A vector database is where those embeddings are stored and searched. Instead of matching text directly, it compares how similar the ideas behind different pieces of content are making search more accurate, especially for open-ended queries.

Vector Search

Vector search finds information based on meaning, not just keywords. It compares “embeddings”,numerical representations of content, to return the most relevant results, even when the user’s words don’t exactly match the document.

Zero-Shot Learning

Zero-shot learning lets an AI handle tasks it hasn’t been explicitly trained on just by understanding the instructions. It’s like giving the model a prompt and having it figure things out without needing examples or retraining.

.webp)